Comments (4)

Hi Jan, thank you for the question, again an insightful one :)

Maybe you are referring to re-shuffling the observations during a rollout?

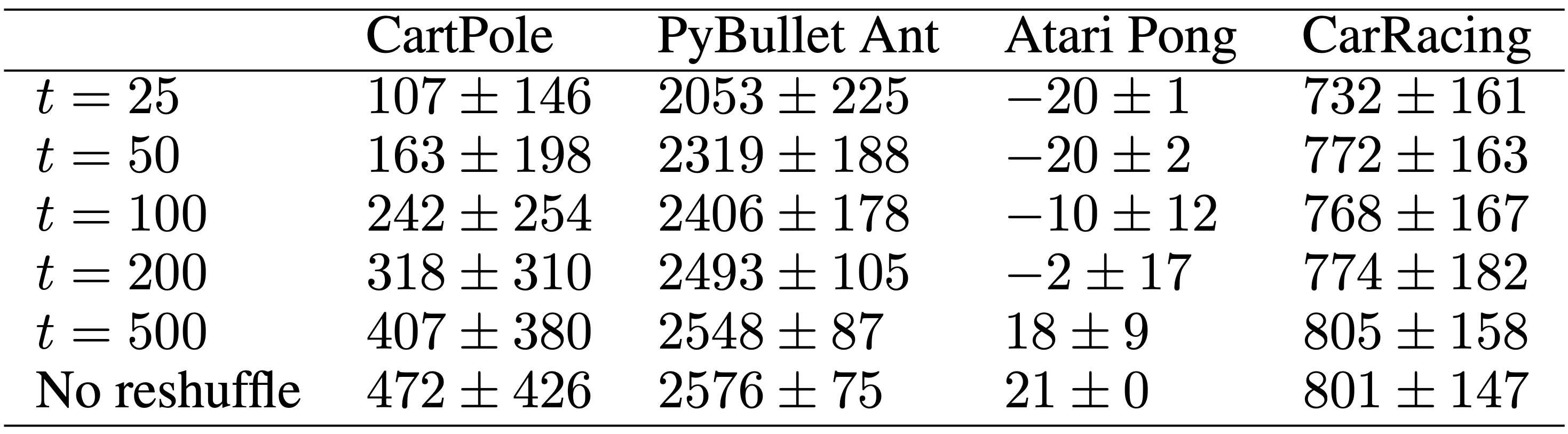

If this is the case, you are partially right because when the observations are reshuffled, self.hx has accumulated info about the previous observations and the agent's performance will be interrupted when the observations are no longer in the same order. However, in our experiments where we reshuffle the observations every t steps, we found that AttentionNeuron was able to "reset" its internal state and recover the performance (see the table below), though the recovery speed depends on the underlying task.

from brain-tokyo-workshop.

Thank you for the quick reply! Yes, I am referring to the re-shuffling of the observations during a rollout.

I actually have 2 additional questions related to this.

-

In your paper, you provide generic mathematical definitions of the AttentionNeuron layer and you state that

. However, shouldn't it be

. However, shouldn't it be  ? Since there is an LSTM cell and its hidden states.

? Since there is an LSTM cell and its hidden states. -

Do you think it would be possible to have a setup where the agent only looks at the current observation and the previous action (IMO this would be a truly permutation invariant agent w.r.t. current observation)? Or in other words, an agent that would give you exactly the same performance no matter how often you reshuffle during your rollout.

[EDIT]

from brain-tokyo-workshop.

- For non-vision tasks (Ant and CartPole) we used LSTM but for vision tasks (Pong and CarRacing) we used stacked frames and MLPs to avoid large computational graphs. Admittedly, we could have used separate and more precise notations for f_k and f_v, but we thought a generic formula (though less accurate) was better for understanding the general idea of the paper.

- For this question, I can only reason based on my experience. Learning a PI agent that only depends on (o_t, a_{t-1}) is hard. For example, if your control signal is force/torque, they contribute to the dynamics in second order (i.e., force->velocity->position), so what o_t reflects may not be the result of applying a_{t-1}. You may wonder if (o_t, a_{t-1}, a_{t-2}) will work, I think it depends on the task. For CartPole, I was able to train the agent by stacking k=4 obs, and I guess k<4 may work as well (I didn't try though). For Ant or other locomotion tasks, the contact/friction with ground will likely make k larger. On the other hand, it may be possible for other tasks and is an exciting direction for future works.

Let me know if the above answer your questions :)

from brain-tokyo-workshop.

Makes perfect sense!

Thank you again!!!

from brain-tokyo-workshop.

Related Issues (20)

- wann_train.py error HOT 8

- index XXX is out of bounds for axis 1 with size 10

- Hi,I have some problems when I run the vae_racing in prettyNEAT? HOT 5

- Ranking method inconsistency between duplicated projects HOT 1

- ListXor and alg_act parameter

- Why parameter ‘prob_crossover’ is 0? HOT 4

- What is the reason for the restriction on introducing new edges (source node must be in same or lower layer)? HOT 5

- Question regarding the innovation record HOT 2

- Creating the initial population HOT 1

- Why is the network's fitness smoother when the weight is positive HOT 1

- How about input and output activation functions changing? HOT 4

- AttentionAgent: can't run pretrained CarRacing examples because of doom problems? HOT 2

- AttentionAgent: mysterious 2*top_k, maybe a mistake? HOT 6

- Version of MNIST used to produce the results presented in the paper HOT 2

- AttentionAgent: Is there GPU support? How long approximately is training? HOT 1

- Google Colab GPU Error HOT 2

- Security Policy violation Binary Artifacts HOT 9

- Applicability to non-RL problems? HOT 2

- How to train AtariPong agent?

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from brain-tokyo-workshop.