Git repo for Analysis Services samples and community projects

A customizeable QPU AutoScale solution for AAS that supports scale up/down as well as in/out

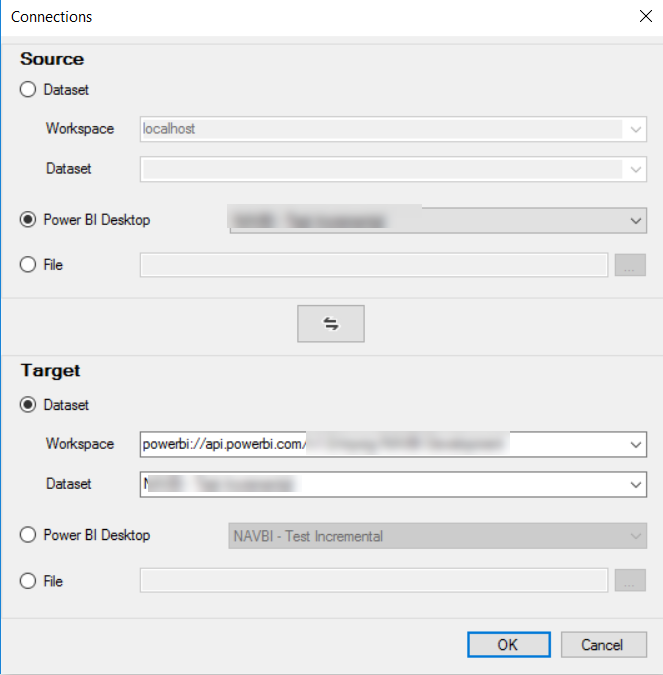

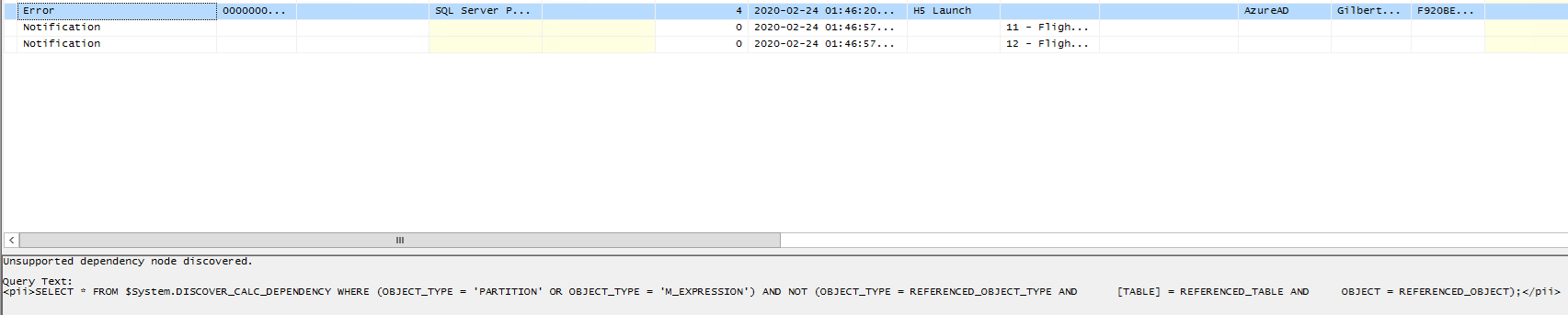

ALM Toolkit is a schema diff tool for tabular models

Automated partition management of Analysis Services tabular models

Real-time monitoring of Analysis Services memory usage broken out by database

The ASTrace tool captures a Profiler trace and writes it to a SQL Server table without requiring a GUI

The AsXEventSample sample shows how to collect streaming xEvents

Sample U-SQL scripts that demonstrate how to process a TPC-DS data set in Azure Data Lake.

Python script to reassemble job graph events from Analysis Services.

A curated set of rules covering best practices for tabular model performance and design which can be run from Tabular Editor's Best Practice Analyzer.

Metadata Translator can translate the names, descriptions, and display folders of the metadata objects in a semantic model by using Azure Cognitive Services.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.