UniPose: Unified Human Pose Estimation in Single Images and Videos.

NEW!: BAPose: Bottom-Up Pose Estimation with Disentangled Waterfall Representations

Our novel framework for bottom-up multi-person pose estimation achieves State-of-the-Art results in several datasets. The pre-print of our new method, BAPose, can be found in the following link: BAPose pre-print. Full code for the BAPose framework is scheduled to be released in the near future.

NEW!: UniPose+: A unified framework for 2D and 3D human pose estimation in images and videos

Our novel and improved UniPose+ framework for pose estimation achieves State-of-the-Art results in several datasets. UniPose+ can be found in the following link: UniPose+ at PAMI. Full code for the UniPose+ framework is scheduled to be released in the near future.

NEW!: OmniPose: A Multi-Scale Framework for Multi-Person Pose Estimation

Our novel framework for multi-person pose estimation achieves State-of-the-Art results in several datasets. The pre-print of our new method, OmniPose, can be found in the following link: OmniPose pre-print. Full code for the OmniPose framework is scheduled to be released in the near future. Github: https://github.com/bmartacho/OmniPose.

We propose UniPose, a unified framework for human pose estimation, based on our "Waterfall" Atrous Spatial Pooling architecture, that achieves state-of-art-results on several pose estimation metrics. UniPose incorporates contextual segmentation and joint localization to estimate the human pose in a single stage, with high accuracy, without relying on statistical postprocessing methods. The Waterfall module in UniPose leverages the efficiency of progressive filtering in the cascade architecture, while maintaining multi-scale fields-of-view comparable to spatial pyramid configurations. Additionally, our method is extended to UniPose-LSTM for multi-frame processing and achieves state-of-the-art results for temporal pose estimation in Video. Our results on multiple datasets demonstrate that UniPose, with a ResNet backbone and Waterfall module, is a robust and efficient architecture for pose estimation obtaining state-of-the-art results in single person pose detection for both single images and videos.

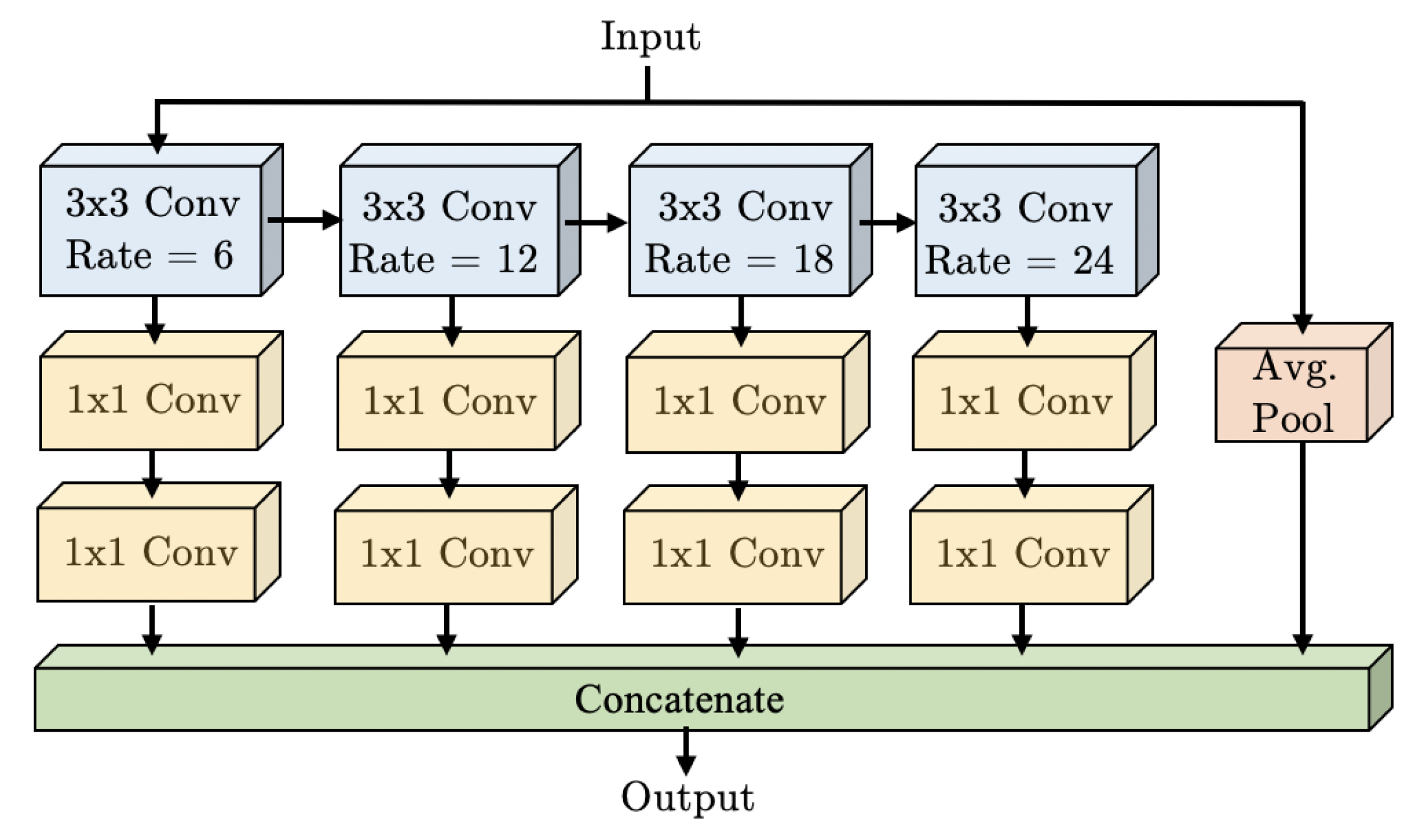

We propose the “Waterfall Atrous Spatial Pyramid” module, shown in Figure 3. WASP is a novel architecture with Atrous Convolutions that is able to leverage both the larger Field-of-View of the Atrous Spatial Pyramid Pooling configuration and the reduced size of the cascade approach.

Examples of the UniPose architecture for Pose Estimation are shown in Figures 4 for single images and videos.

Figure 4: Pose estimation samples for UniPose in images and videos.

Link to the published article at CVPR 2020.

Datasets:

Datasets used in this paper and required for training, validation, and testing can be downloaded directly from the dataset websites below:

LSP Dataset: https://sam.johnson.io/research/lsp.html

MPII Dataset: http://human-pose.mpi-inf.mpg.de/

PennAction Dataset: http://dreamdragon.github.io/PennAction/

BBC Pose Dataset: https://www.robots.ox.ac.uk/~vgg/data/pose/

Pre-trained Models:

The pre-trained weights can be downloaded here.

Contact:

Bruno Artacho:

E-mail: [email protected]

Website: https://www.brunoartacho.com

Andreas Savakis:

E-mail: [email protected]

Website: https://www.rit.edu/directory/axseec-andreas-savakis

Citation:

Artacho, B.; Savakis, A. UniPose: Unified Human Pose Estimation in Single Images and Videos. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

Latex:

@InProceedings{Artacho_2020_CVPR,

title = {UniPose: Unified Human Pose Estimation in Single Images and Videos},

author = {Artacho, Bruno and Savakis, Andreas},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2020},

}