BuildBuddy is an open source Bazel build event viewer, result store, and remote cache.

BuildBuddy is an open source Bazel build event viewer, result store, and remote cache. It helps you collect, view, share and debug build events in a user-friendly web UI.

It's written in Golang and React and can be deployed as a Docker image. It's run both as a cloud hosted service and can be deployed to your cloud provider or run on-prem. BuildBuddy's core is open sourced in this repo under the MIT License.

Getting started with BuildBuddy is simple. Just add these two lines to your .bazelrc file.

.bazelrc

build --bes_results_url=https://app.buildbuddy.io/invocation/

build --bes_backend=grpcs://remote.buildbuddy.io

This will print a BuildBuddy URL containing your build results at the beginning and end of every Bazel invocation. You can command click / double click on these to open the results in a browser.

Want more? Get up and running quickly with our fully managed BuildBuddy Cloud service. It's free for individuals, open source projects, and teams of up to 3.

If you'd like to host your own instance on-prem or in the cloud, check out our documentation.

Our documentation gives you a full look at how to set up and configure BuildBuddy.

If you have any questions, join the BuildBuddy Slack channel or e-mail us at [email protected]. We’d love to chat!

-

Build summary & logs - a high level overview of the build including who initiated the build, how long it took, how many targets were affected, etc. The build log makes it easy to share stack traces and errors with teammates which makes collaborative debugging easier.

-

Target overview - quickly see which targets and tests passed / failed and dig into more details about them.

-

Detailed timing information - BuildBuddy invocations include a "Timing" tab - which pulls the Bazel profile logs from your build cache and displays them in a human-readable format.

-

Invocation details - see all of the explicit flags, implicit options, and environment variables that affect your build. This is particularly useful when a build is working on one machine but not another - you can compare these and see what's different.

-

Build artifacts - get a quick view of all of the build artifacts that were generated by this invocation so you can easily access them. Clicking on build artifacts downloads the artifact when using either the built-in BuildBuddy cache, or a third-party cache running in GRPC mode that supports the bytestream API - like bazel-remote.

-

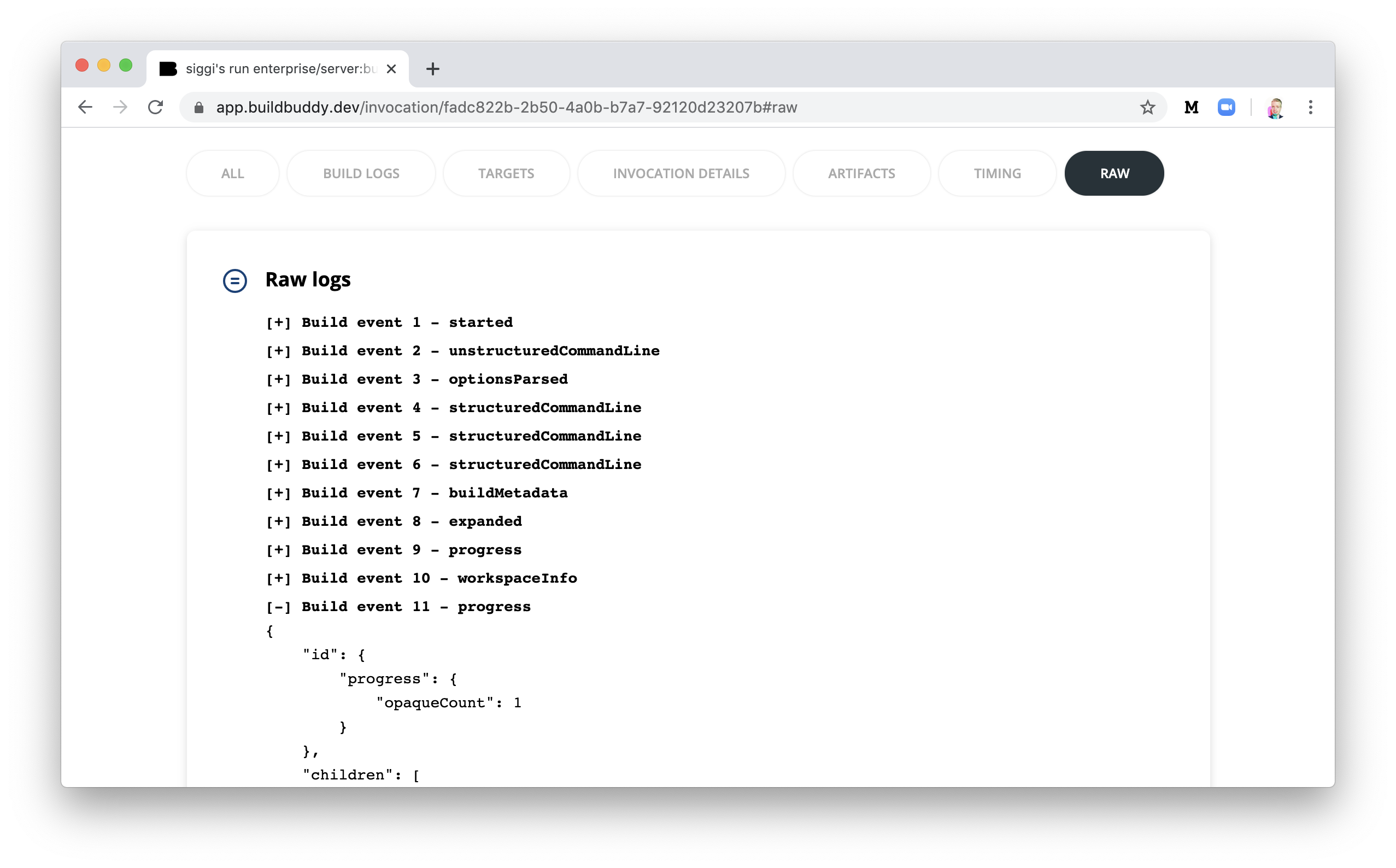

Raw logs - you can really dig into the details here. This is a complete view of all of the events that get sent up via Bazel's build event protocol. If you find yourself digging in here too much, let us know and we'll surface that info in a nicer UI.

-

Remote cache support - BuildBuddy comes with an optional built-in Bazel remote cache to BuildBuddy, implementing the GRPC remote caching APIs. This allows BuildBuddy to optionally collect build artifacts, timing profile information, test logs, and more. Alternatively, BuildBuddy supports third-party caches running in GRPC mode that support the bytestream API - like bazel-remote.

-

Viewable test logs - BuildBuddy surfaces test logs directly in the UI when you click on a test target (GRPC remote cache required).

-

Dense UI mode - if you want more information density, BuildBuddy has a "Dense mode" that packs more information into every square inch.

-

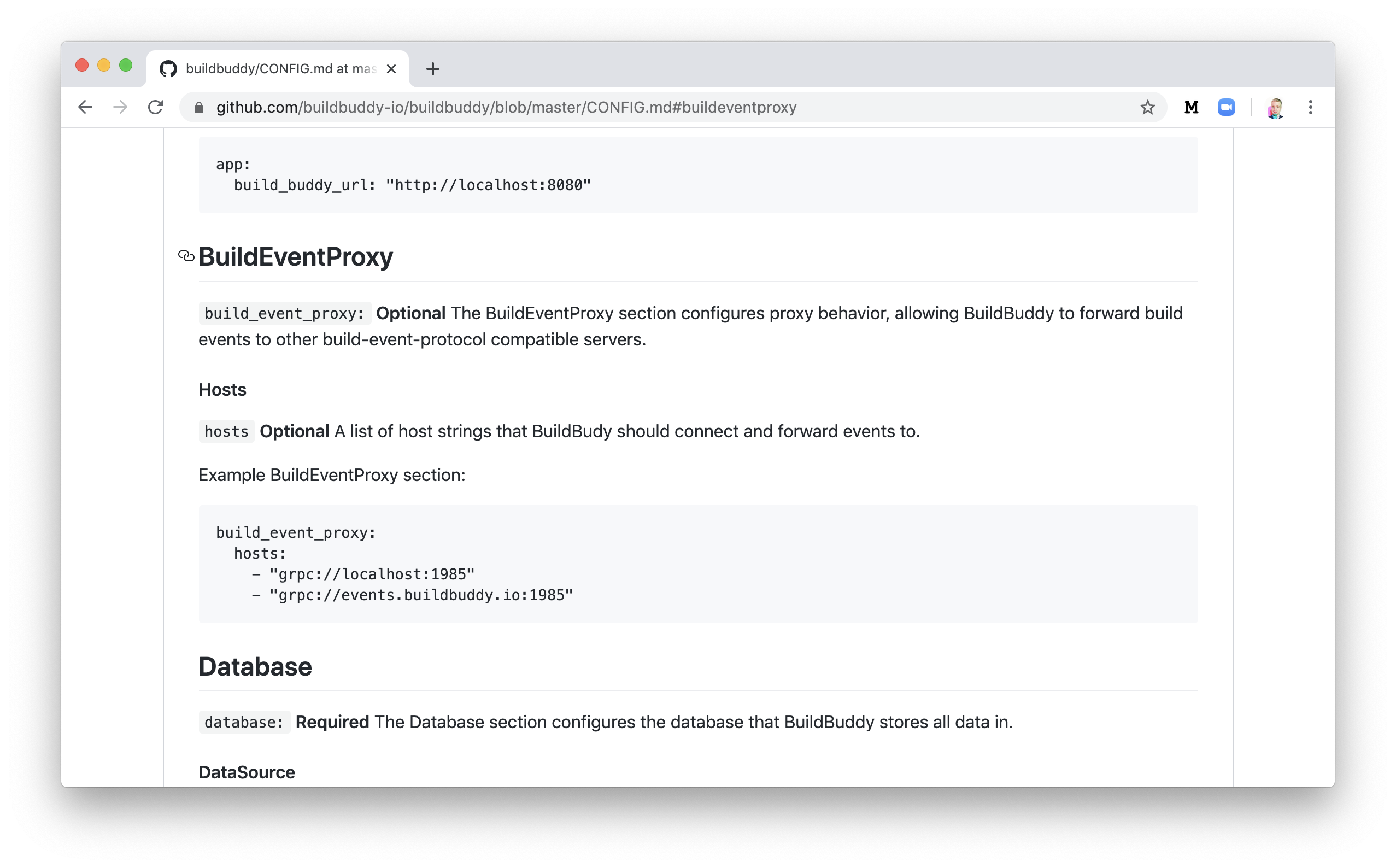

BES backend multiplexing - if you're already pointing your

bes_backendflag at another service. BuildBuddy has abuild_event_proxyconfiguration option that allows you to specify other backends that your build events should be forwarded to. See the configuration docs for more information. -

Slack webhook support - BuildBuddy allows you to message a Slack channel when builds finish. It's a nice way of getting a quick notification when a long running build completes, or a CI build fails. See the configuration docs for more information.