Learning to drive from a world on rails

Dian Chen, Vladlen Koltun, Philipp Krähenbühl,

arXiv techical report (arXiv 2105.00636)

This repo contains code for our paper Learning to drive from a world on rails.

ProcGen code coming soon.

If you find our repo or paper useful, please cite us as

@inproceedings{chen2021learning,

title={Learning to drive from a world on rails},

author={Chen, Dian and Koltun, Vladlen and Kr{\"a}henb{\"u}hl, Philipp},

booktitle={ICCV},

year={2021}

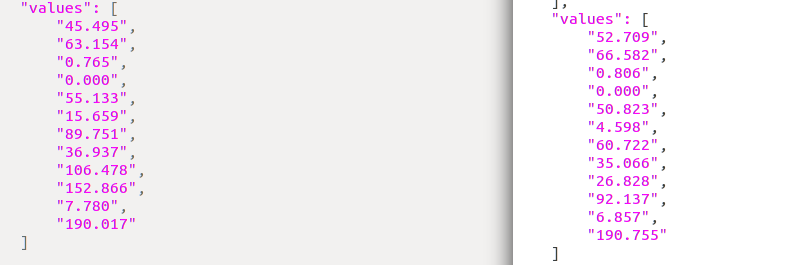

}- We have released the pre-computed Q values in our dataset! Check DATASET.md for details.

- Checkout our website for demo videos!

- To run CARLA and train the models, make sure you are using a machine with at least a mid-end GPU.

- Please follow INSTALL.md to setup the environment.

- Please refer to RAILS.md on how to train our World-on-Rails agent.

- Please refer to LBC.md on how to train the LBC agent.

If you evaluating the pretrained weights, make sure you are launching CARLA with -vulkan!

python evaluate.py --agent-config=[PATH TO CONFIG]python evaluate_nocrash.py --town={Town01,Town02} --weather={train, test} --agent-config=[PATH TO CONFIG] --resume- Use defaults for RAILS, and

--agent=autoagents/lbc_agentfor LBC. - To print a readable table, use

python -m scripts.view_nocrash_results [PATH TO CONFIG.YAML]We also release the data we trained for the leaderboard. Checkout DATASET.md for more details.

The leaderboard codes are built from the original leaderboard repo.

The scenariorunner codes are from the original scenario_runner repo.

The waypointer.py GPS coordinate conversion codes are build from Marin Toromanoff's leadeboard submission.

This repo is released under the MIT License (please refer to the LICENSE file for details). The leaderboard repo which our leaderboard folder builds upon is under the MIT License.