ESPnet is an end-to-end speech processing toolkit covering end-to-end speech recognition, text-to-speech, speech translation, speech enhancement, speaker diarization, spoken language understanding, and so on. ESPnet uses pytorch as a deep learning engine and also follows Kaldi style data processing, feature extraction/format, and recipes to provide a complete setup for various speech processing experiments.

- 2019 Tutorial at Interspeech

- 2021 Tutorial at CMU

- 2022 Tutorial at CMU

- Usage of ESPnet (ASR as an example)

- Add new models/tasks to ESPnet

- Support numbers of

ASRrecipes (WSJ, Switchboard, CHiME-4/5, Librispeech, TED, CSJ, AMI, HKUST, Voxforge, REVERB, Gigaspeech, etc.) - Support numbers of

TTSrecipes in a similar manner to the ASR recipe (LJSpeech, LibriTTS, M-AILABS, etc.) - Support numbers of

STrecipes (Fisher-CallHome Spanish, Libri-trans, IWSLT'18, How2, Must-C, Mboshi-French, etc.) - Support numbers of

MTrecipes (IWSLT'14, IWSLT'16, the above ST recipes etc.) - Support numbers of

SLUrecipes (CATSLU-MAPS, FSC, Grabo, IEMOCAP, JDCINAL, SNIPS, SLURP, SWBD-DA, etc.) - Support numbers of

SE/SSrecipes (DNS-IS2020, LibriMix, SMS-WSJ, VCTK-noisyreverb, WHAM!, WHAMR!, WSJ-2mix, etc.) - Support voice conversion recipe (VCC2020 baseline)

- Support speaker diarization recipe (mini_librispeech, librimix)

- Support singing voice synthesis recipe (ofuton_p_utagoe_db, opencpop, m4singer, etc.)

- State-of-the-art performance in several ASR benchmarks (comparable/superior to hybrid DNN/HMM and CTC)

- Hybrid CTC/attention based end-to-end ASR

- Fast/accurate training with CTC/attention multitask training

- CTC/attention joint decoding to boost monotonic alignment decoding

- Encoder: VGG-like CNN + BiRNN (LSTM/GRU), sub-sampling BiRNN (LSTM/GRU), Transformer, Conformer, Branchformer, or E-Branchformer

- Decoder: RNN (LSTM/GRU), Transformer, or S4

- Attention: Dot product, location-aware attention, variants of multi-head

- Incorporate RNNLM/LSTMLM/TransformerLM/N-gram trained only with text data

- Batch GPU decoding

- Data augmentation

- Transducer based end-to-end ASR

- Architecture:

- Search algorithms:

- Greedy search constrained to one emission by timestep.

- Default beam search algorithm [Graves, 2012] without prefix search.

- Alignment-Length Synchronous decoding [Saon et al., 2020].

- Time Synchronous Decoding [Saon et al., 2020].

- N-step Constrained beam search modified from [Kim et al., 2020].

- modified Adaptive Expansion Search based on [Kim et al., 2021] and NSC.

- Features:

- Unified interface for offline and streaming speech recognition.

- Multi-task learning with various auxiliary losses:

- Encoder: CTC, auxiliary Transducer and symmetric KL divergence.

- Decoder: cross-entropy w/ label smoothing.

- Transfer learning with an acoustic model and/or language model.

- Training with FastEmit regularization method [Yu et al., 2021].

Please refer to the tutorial page for complete documentation.

- CTC segmentation

- Non-autoregressive model based on Mask-CTC

- ASR examples for supporting endangered language documentation (Please refer to egs/puebla_nahuatl and egs/yoloxochitl_mixtec for details)

- Wav2Vec2.0 pre-trained model as Encoder, imported from FairSeq.

- Self-supervised learning representations as features, using upstream models in S3PRL in frontend.

- Set

frontendtos3prl - Select any upstream model by setting the

frontend_confto the corresponding name.

- Set

- Transfer Learning :

- easy usage and transfers from models previously trained by your group or models from ESPnet Hugging Face repository.

- Documentation and toy example runnable on colab.

- Streaming Transformer/Conformer ASR with blockwise synchronous beam search.

- Restricted Self-Attention based on Longformer as an encoder for long sequences

- OpenAI Whisper model, robust ASR based on large-scale, weakly-supervised multitask learning

Demonstration

- Real-time ASR demo with ESPnet2

- Gradio Web Demo on Hugging Face Spaces. Check out the Web Demo

- Streaming Transformer ASR Local Demo with ESPnet2.

- Architecture

- Tacotron2

- Transformer-TTS

- FastSpeech

- FastSpeech2

- Conformer FastSpeech & FastSpeech2

- VITS

- JETS

- Multi-speaker & multi-language extention

- Pre-trained speaker embedding (e.g., X-vector)

- Speaker ID embedding

- Language ID embedding

- Global style token (GST) embedding

- Mix of the above embeddings

- End-to-end training

- End-to-end text-to-wav model (e.g., VITS, JETS, etc.)

- Joint training of text2mel and vocoder

- Various language support

- En / Jp / Zn / De / Ru / And more...

- Integration with neural vocoders

- Parallel WaveGAN

- MelGAN

- Multi-band MelGAN

- HiFiGAN

- StyleMelGAN

- Mix of the above models

Demonstration

- Real-time TTS demo with ESPnet2

- Integrated to Hugging Face Spaces with Gradio. See demo:

To train the neural vocoder, please check the following repositories:

- Single-speaker speech enhancement

- Multi-speaker speech separation

- Unified encoder-separator-decoder structure for time-domain and frequency-domain models

- Encoder/Decoder: STFT/iSTFT, Convolution/Transposed-Convolution

- Separators: BLSTM, Transformer, Conformer, TasNet, DPRNN, SkiM, SVoice, DC-CRN, DCCRN, Deep Clustering, Deep Attractor Network, FaSNet, iFaSNet, Neural Beamformers, etc.

- Flexible ASR integration: working as an individual task or as the ASR frontend

- Easy to import pre-trained models from Asteroid

- Both the pre-trained models from Asteroid and the specific configuration are supported.

Demonstration

- State-of-the-art performance in several ST benchmarks (comparable/superior to cascaded ASR and MT)

- Transformer-based end-to-end ST (new!)

- Transformer-based end-to-end MT (new!)

- Transformer and Tacotron2-based parallel VC using Mel spectrogram

- End-to-end VC based on cascaded ASR+TTS (Baseline system for Voice Conversion Challenge 2020!)

- Architecture

- Transformer-based Encoder

- Conformer-based Encoder

- Branchformer based Encoder

- E-Branchformer based Encoder

- RNN based Decoder

- Transformer-based Decoder

- Support Multitasking with ASR

- Predict both intent and ASR transcript

- Support Multitasking with NLU

- Deliberation encoder based 2 pass model

- Support using pre-trained ASR models

- Hubert

- Wav2vec2

- VQ-APC

- TERA and more ...

- Support using pre-trained NLP models

- BERT

- MPNet And more...

- Various language support

- En / Jp / Zn / Nl / And more...

- Supports using context from previous utterances

- Supports using other tasks like SE in a pipeline manner

- Supports Two Pass SLU that combines audio and ASR transcript Demonstration

- Performing noisy spoken language understanding using a speech enhancement model followed by a spoken language understanding model.

- Performing two-pass spoken language understanding where the second pass model attends to both acoustic and semantic information.

- Integrated to Hugging Face Spaces with Gradio. See SLU demo on multiple languages:

- End to End Speech Summarization Recipe for Instructional Videos using Restricted Self-Attention [Sharma et al., 2022]

- Framework merge from Muskits

- Architecture

- RNN-based non-autoregressive model

- Xiaoice

- Tacotron-singing

- DiffSinger (in progress)

- VISinger

- VISinger 2 (its variations with different vocoders-architecture)

- Support multi-speaker & multilingual singing synthesis

- Speaker ID embedding

- Language ID embedding

- Various language support

- Jp / En / Kr / Zh

- Tight integration with neural vocoders (the same as TTS)

- Support HuBERT Pre-training:

- Example recipe: egs2/LibriSpeech/ssl1

- Architecture

- wav2vec-U (with different self-supervised models)

- wav2vec-U 2.0 (in progress)

- Support PrefixBeamSearch and K2-based WFST decoding

- Reproduces Whisper-style training from scratch using public data: OWSM

- Supports multiple tasks in a single model

- Multilingual speech recognition

- Any-to-any speech translation

- Language identification

- Utterance-level timestamp prediction (segmentation)

- Flexible network architecture thanks to Chainer and PyTorch

- Flexible front-end processing thanks to kaldiio and HDF5 support

- Tensorboard-based monitoring

See ESPnet2.

- Independent from Kaldi/Chainer, unlike ESPnet1

- On-the-fly feature extraction and text processing when training

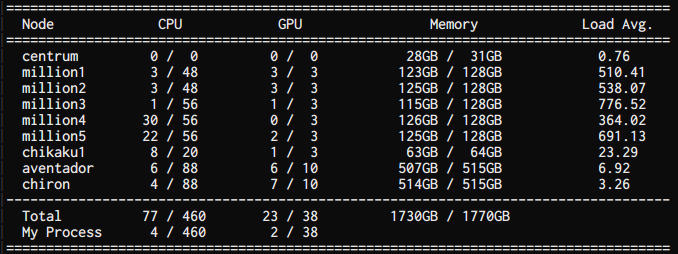

- Supporting DistributedDataParallel and DaraParallel both

- Supporting multiple nodes training and integrated with Slurm or MPI

- Supporting Sharded Training provided by fairscale

- A template recipe that can be applied to all corpora

- Possible to train any size of corpus without CPU memory error

- ESPnet Model Zoo

- Integrated with wandb

-

If you intend to do full experiments, including DNN training, then see Installation.

-

If you just need the Python module only:

# We recommend you install PyTorch before installing espnet following https://pytorch.org/get-started/locally/ pip install espnet # To install the latest # pip install git+https://github.com/espnet/espnet # To install additional packages # pip install "espnet[all]"

If you use ESPnet1, please install chainer and cupy.

pip install chainer==6.0.0 cupy==6.0.0 # [Option]You might need to install some packages depending on each task. We prepared various installation scripts at tools/installers.

-

(ESPnet2) Once installed, run

wandb loginand set--use_wandb trueto enable tracking runs using W&B.

go to docker/ and follow instructions.

Thank you for taking the time for ESPnet! Any contributions to ESPnet are welcome, and feel free to ask any questions or requests to issues. If it's your first ESPnet contribution, please follow the contribution guide.

expand

We list the character error rate (CER) and word error rate (WER) of major ASR tasks.

| Task | CER (%) | WER (%) | Pre-trained model |

|---|---|---|---|

| Aishell dev/test | 4.6/5.1 | N/A | link |

| ESPnet2 Aishell dev/test | 4.1/4.4 | N/A | link |

| Common Voice dev/test | 1.7/1.8 | 2.2/2.3 | link |

| CSJ eval1/eval2/eval3 | 5.7/3.8/4.2 | N/A | link |

| ESPnet2 CSJ eval1/eval2/eval3 | 4.5/3.3/3.6 | N/A | link |

| ESPnet2 GigaSpeech dev/test | N/A | 10.6/10.5 | link |

| HKUST dev | 23.5 | N/A | link |

| ESPnet2 HKUST dev | 21.2 | N/A | link |

| Librispeech dev_clean/dev_other/test_clean/test_other | N/A | 1.9/4.9/2.1/4.9 | link |

| ESPnet2 Librispeech dev_clean/dev_other/test_clean/test_other | 0.6/1.5/0.6/1.4 | 1.7/3.4/1.8/3.6 | link |

| Switchboard (eval2000) callhm/swbd | N/A | 14.0/6.8 | link |

| ESPnet2 Switchboard (eval2000) callhm/swbd | N/A | 13.4/7.3 | link |

| TEDLIUM2 dev/test | N/A | 8.6/7.2 | link |

| ESPnet2 TEDLIUM2 dev/test | N/A | 7.3/7.1 | link |

| TEDLIUM3 dev/test | N/A | 9.6/7.6 | link |

| WSJ dev93/eval92 | 3.2/2.1 | 7.0/4.7 | N/A |

| ESPnet2 WSJ dev93/eval92 | 1.1/0.8 | 2.8/1.8 | link |

Note that the performance of the CSJ, HKUST, and Librispeech tasks was significantly improved by using the wide network (#units = 1024) and large subword units if necessary reported by RWTH.

If you want to check the results of the other recipes, please check egs/<name_of_recipe>/asr1/RESULTS.md.

expand

You can recognize speech in a WAV file using pre-trained models.

Go to a recipe directory and run utils/recog_wav.sh as follows:

# go to the recipe directory and source path of espnet tools

cd egs/tedlium2/asr1 && . ./path.sh

# let's recognize speech!

recog_wav.sh --models tedlium2.transformer.v1 example.wavwhere example.wav is a WAV file to be recognized.

The sampling rate must be consistent with that of data used in training.

Available pre-trained models in the demo script are listed below.

| Model | Notes |

|---|---|

| tedlium2.rnn.v1 | Streaming decoding based on CTC-based VAD |

| tedlium2.rnn.v2 | Streaming decoding based on CTC-based VAD (batch decoding) |

| tedlium2.transformer.v1 | Joint-CTC attention Transformer trained on Tedlium 2 |

| tedlium3.transformer.v1 | Joint-CTC attention Transformer trained on Tedlium 3 |

| librispeech.transformer.v1 | Joint-CTC attention Transformer trained on Librispeech |

| commonvoice.transformer.v1 | Joint-CTC attention Transformer trained on CommonVoice |

| csj.transformer.v1 | Joint-CTC attention Transformer trained on CSJ |

| csj.rnn.v1 | Joint-CTC attention VGGBLSTM trained on CSJ |

expand

We list results from three different models on WSJ0-2mix, which is one the most widely used benchmark dataset for speech separation.

| Model | STOI | SAR | SDR | SIR |

|---|---|---|---|---|

| TF Masking | 0.89 | 11.40 | 10.24 | 18.04 |

| Conv-Tasnet | 0.95 | 16.62 | 15.94 | 25.90 |

| DPRNN-Tasnet | 0.96 | 18.82 | 18.29 | 28.92 |

expand

expand

We list 4-gram BLEU of major ST tasks.

| Task | BLEU | Pre-trained model |

|---|---|---|

| Fisher-CallHome Spanish fisher_test (Es->En) | 51.03 | link |

| Fisher-CallHome Spanish callhome_evltest (Es->En) | 20.44 | link |

| Libri-trans test (En->Fr) | 16.70 | link |

| How2 dev5 (En->Pt) | 45.68 | link |

| Must-C tst-COMMON (En->De) | 22.91 | link |

| Mboshi-French dev (Fr->Mboshi) | 6.18 | N/A |

| Task | BLEU | Pre-trained model |

|---|---|---|

| Fisher-CallHome Spanish fisher_test (Es->En) | 42.16 | N/A |

| Fisher-CallHome Spanish callhome_evltest (Es->En) | 19.82 | N/A |

| Libri-trans test (En->Fr) | 16.96 | N/A |

| How2 dev5 (En->Pt) | 44.90 | N/A |

| Must-C tst-COMMON (En->De) | 23.65 | N/A |

If you want to check the results of the other recipes, please check egs/<name_of_recipe>/st1/RESULTS.md.

expand

(New!) We made a new real-time E2E-ST + TTS demonstration in Google Colab. Please access the notebook from the following button and enjoy the real-time speech-to-speech translation!

You can translate speech in a WAV file using pre-trained models.

Go to a recipe directory and run utils/translate_wav.sh as follows:

# Go to recipe directory and source path of espnet tools

cd egs/fisher_callhome_spanish/st1 && . ./path.sh

# download example wav file

wget -O - https://github.com/espnet/espnet/files/4100928/test.wav.tar.gz | tar zxvf -

# let's translate speech!

translate_wav.sh --models fisher_callhome_spanish.transformer.v1.es-en test.wavwhere test.wav is a WAV file to be translated.

The sampling rate must be consistent with that of data used in training.

Available pre-trained models in the demo script are listed as below.

| Model | Notes |

|---|---|

| fisher_callhome_spanish.transformer.v1 | Transformer-ST trained on Fisher-CallHome Spanish Es->En |

expand

| Task | BLEU | Pre-trained model |

|---|---|---|

| Fisher-CallHome Spanish fisher_test (Es->En) | 61.45 | link |

| Fisher-CallHome Spanish callhome_evltest (Es->En) | 29.86 | link |

| Libri-trans test (En->Fr) | 18.09 | link |

| How2 dev5 (En->Pt) | 58.61 | link |

| Must-C tst-COMMON (En->De) | 27.63 | link |

| IWSLT'14 test2014 (En->De) | 24.70 | link |

| IWSLT'14 test2014 (De->En) | 29.22 | link |

| IWSLT'14 test2014 (De->En) | 32.2 | link |

| IWSLT'16 test2014 (En->De) | 24.05 | link |

| IWSLT'16 test2014 (De->En) | 29.13 | link |

ESPnet2

You can listen to the generated samples in the following URL.

Note that in the generation, we use Griffin-Lim (

wav/) and Parallel WaveGAN (wav_pwg/).

You can download pre-trained models via espnet_model_zoo.

You can download pre-trained vocoders via kan-bayashi/ParallelWaveGAN.

ESPnet1

NOTE: We are moving on ESPnet2-based development for TTS. Please check the latest results in the above ESPnet2 results.

You can listen to our samples in demo HP espnet-tts-sample. Here we list some notable ones:

- Single English speaker Tacotron2

- Single Japanese speaker Tacotron2

- Single other language speaker Tacotron2

- Multi English speaker Tacotron2

- Single English speaker Transformer

- Single English speaker FastSpeech

- Multi English speaker Transformer

- Single Italian speaker FastSpeech

- Single Mandarin speaker Transformer

- Single Mandarin speaker FastSpeech

- Multi Japanese speaker Transformer

- Single English speaker models with Parallel WaveGAN

- Single English speaker knowledge distillation-based FastSpeech

You can download all of the pre-trained models and generated samples:

Note that in the generated samples, we use the following vocoders: Griffin-Lim (GL), WaveNet vocoder (WaveNet), Parallel WaveGAN (ParallelWaveGAN), and MelGAN (MelGAN). The neural vocoders are based on the following repositories.

- kan-bayashi/ParallelWaveGAN: Parallel WaveGAN / MelGAN / Multi-band MelGAN

- r9y9/wavenet_vocoder: 16 bit mixture of Logistics WaveNet vocoder

- kan-bayashi/PytorchWaveNetVocoder: 8 bit Softmax WaveNet Vocoder with the noise shaping

If you want to build your own neural vocoder, please check the above repositories. kan-bayashi/ParallelWaveGAN provides the manual about how to decode ESPnet-TTS model's features with neural vocoders. Please check it.

Here we list all of the pre-trained neural vocoders. Please download and enjoy the generation of high-quality speech!

| Model link | Lang | Fs [Hz] | Mel range [Hz] | FFT / Shift / Win [pt] | Model type |

|---|---|---|---|---|---|

| ljspeech.wavenet.softmax.ns.v1 | EN | 22.05k | None | 1024 / 256 / None | Softmax WaveNet |

| ljspeech.wavenet.mol.v1 | EN | 22.05k | None | 1024 / 256 / None | MoL WaveNet |

| ljspeech.parallel_wavegan.v1 | EN | 22.05k | None | 1024 / 256 / None | Parallel WaveGAN |

| ljspeech.wavenet.mol.v2 | EN | 22.05k | 80-7600 | 1024 / 256 / None | MoL WaveNet |

| ljspeech.parallel_wavegan.v2 | EN | 22.05k | 80-7600 | 1024 / 256 / None | Parallel WaveGAN |

| ljspeech.melgan.v1 | EN | 22.05k | 80-7600 | 1024 / 256 / None | MelGAN |

| ljspeech.melgan.v3 | EN | 22.05k | 80-7600 | 1024 / 256 / None | MelGAN |

| libritts.wavenet.mol.v1 | EN | 24k | None | 1024 / 256 / None | MoL WaveNet |

| jsut.wavenet.mol.v1 | JP | 24k | 80-7600 | 2048 / 300 / 1200 | MoL WaveNet |

| jsut.parallel_wavegan.v1 | JP | 24k | 80-7600 | 2048 / 300 / 1200 | Parallel WaveGAN |

| csmsc.wavenet.mol.v1 | ZH | 24k | 80-7600 | 2048 / 300 / 1200 | MoL WaveNet |

| csmsc.parallel_wavegan.v1 | ZH | 24k | 80-7600 | 2048 / 300 / 1200 | Parallel WaveGAN |

If you want to use the above pre-trained vocoders, please exactly match the feature setting with them.

ESPnet2

ESPnet1

NOTE: We are moving on ESPnet2-based development for TTS. Please check the latest demo in the above ESPnet2 demo.

You can try the real-time demo in Google Colab. Please access the notebook from the following button and enjoy the real-time synthesis.

We also provide a shell script to perform synthesis.

Go to a recipe directory and run utils/synth_wav.sh as follows:

# Go to recipe directory and source path of espnet tools

cd egs/ljspeech/tts1 && . ./path.sh

# We use an upper-case char sequence for the default model.

echo "THIS IS A DEMONSTRATION OF TEXT TO SPEECH." > example.txt

# let's synthesize speech!

synth_wav.sh example.txt

# Also, you can use multiple sentences

echo "THIS IS A DEMONSTRATION OF TEXT TO SPEECH." > example_multi.txt

echo "TEXT TO SPEECH IS A TECHNIQUE TO CONVERT TEXT INTO SPEECH." >> example_multi.txt

synth_wav.sh example_multi.txtYou can change the pre-trained model as follows:

synth_wav.sh --models ljspeech.fastspeech.v1 example.txtWaveform synthesis is performed with the Griffin-Lim algorithm and neural vocoders (WaveNet and ParallelWaveGAN). You can change the pre-trained vocoder model as follows:

synth_wav.sh --vocoder_models ljspeech.wavenet.mol.v1 example.txtWaveNet vocoder provides very high-quality speech, but it takes time to generate.

See more details or available models via --help.

synth_wav.sh --helpexpand

- Transformer and Tacotron2-based VC

You can listen to some samples on the demo webpage.

- Cascade ASR+TTS as one of the baseline systems of VCC2020

The Voice Conversion Challenge 2020 (VCC2020) adopts ESPnet to build an end-to-end based baseline system. In VCC2020, the objective is intra/cross-lingual nonparallel VC. You can download converted samples of the cascade ASR+TTS baseline system here.

expand

We list the performance on various SLU tasks and datasets using the metric reported in the original dataset paper

| Task | Dataset | Metric | Result | Pre-trained Model |

|---|---|---|---|---|

| Intent Classification | SLURP | Acc | 86.3 | link |

| Intent Classification | FSC | Acc | 99.6 | link |

| Intent Classification | FSC Unseen Speaker Set | Acc | 98.6 | link |

| Intent Classification | FSC Unseen Utterance Set | Acc | 86.4 | link |

| Intent Classification | FSC Challenge Speaker Set | Acc | 97.5 | link |

| Intent Classification | FSC Challenge Utterance Set | Acc | 78.5 | link |

| Intent Classification | SNIPS | F1 | 91.7 | link |

| Intent Classification | Grabo (Nl) | Acc | 97.2 | link |

| Intent Classification | CAT SLU MAP (Zn) | Acc | 78.9 | link |

| Intent Classification | Google Speech Commands | Acc | 98.4 | link |

| Slot Filling | SLURP | SLU-F1 | 71.9 | link |

| Dialogue Act Classification | Switchboard | Acc | 67.5 | link |

| Dialogue Act Classification | Jdcinal (Jp) | Acc | 67.4 | link |

| Emotion Recognition | IEMOCAP | Acc | 69.4 | link |

| Emotion Recognition | swbd_sentiment | Macro F1 | 61.4 | link |

| Emotion Recognition | slue_voxceleb | Macro F1 | 44.0 | link |

If you want to check the results of the other recipes, please check egs2/<name_of_recipe>/asr1/RESULTS.md.

ESPnet1

CTC segmentation determines utterance segments within audio files. Aligned utterance segments constitute the labels of speech datasets.

As a demo, we align the start and end of utterances within the audio file ctc_align_test.wav, using the example script utils/asr_align_wav.sh.

For preparation, set up a data directory:

cd egs/tedlium2/align1/

# data directory

align_dir=data/demo

mkdir -p ${align_dir}

# wav file

base=ctc_align_test

wav=../../../test_utils/${base}.wav

# recipe files

echo "batchsize: 0" > ${align_dir}/align.yaml

cat << EOF > ${align_dir}/utt_text

${base} THE SALE OF THE HOTELS

${base} IS PART OF HOLIDAY'S STRATEGY

${base} TO SELL OFF ASSETS

${base} AND CONCENTRATE

${base} ON PROPERTY MANAGEMENT

EOFHere, utt_text is the file containing the list of utterances.

Choose a pre-trained ASR model that includes a CTC layer to find utterance segments:

# pre-trained ASR model

model=wsj.transformer_small.v1

mkdir ./conf && cp ../../wsj/asr1/conf/no_preprocess.yaml ./conf

../../../utils/asr_align_wav.sh \

--models ${model} \

--align_dir ${align_dir} \

--align_config ${align_dir}/align.yaml \

${wav} ${align_dir}/utt_textSegments are written to aligned_segments as a list of file/utterance names, utterance start and end times in seconds, and a confidence score.

The confidence score is a probability in log space that indicates how well the utterance was aligned. If needed, remove bad utterances:

min_confidence_score=-5

awk -v ms=${min_confidence_score} '{ if ($5 > ms) {print} }' ${align_dir}/aligned_segmentsThe demo script utils/ctc_align_wav.sh uses an already pre-trained ASR model (see the list above for more models).

It is recommended to use models with RNN-based encoders (such as BLSTMP) for aligning large audio files;

rather than using Transformer models with a high memory consumption on longer audio data.

The sample rate of the audio must be consistent with that of the data used in training; adjust with sox if needed.

A full example recipe is in egs/tedlium2/align1/.

ESPnet2

CTC segmentation determines utterance segments within audio files. Aligned utterance segments constitute the labels of speech datasets.

As a demo, we align the start and end of utterances within the audio file ctc_align_test.wav.

This can be done either directly from the Python command line or using the script espnet2/bin/asr_align.py.

From the Python command line interface:

# load a model with character tokens

from espnet_model_zoo.downloader import ModelDownloader

d = ModelDownloader(cachedir="./modelcache")

wsjmodel = d.download_and_unpack("kamo-naoyuki/wsj")

# load the example file included in the ESPnet repository

import soundfile

speech, rate = soundfile.read("./test_utils/ctc_align_test.wav")

# CTC segmentation

from espnet2.bin.asr_align import CTCSegmentation

aligner = CTCSegmentation( **wsjmodel , fs=rate )

text = """

utt1 THE SALE OF THE HOTELS

utt2 IS PART OF HOLIDAY'S STRATEGY

utt3 TO SELL OFF ASSETS

utt4 AND CONCENTRATE ON PROPERTY MANAGEMENT

"""

segments = aligner(speech, text)

print(segments)

# utt1 utt 0.26 1.73 -0.0154 THE SALE OF THE HOTELS

# utt2 utt 1.73 3.19 -0.7674 IS PART OF HOLIDAY'S STRATEGY

# utt3 utt 3.19 4.20 -0.7433 TO SELL OFF ASSETS

# utt4 utt 4.20 6.10 -0.4899 AND CONCENTRATE ON PROPERTY MANAGEMENTAligning also works with fragments of the text.

For this, set the gratis_blank option that allows skipping unrelated audio sections without penalty.

It's also possible to omit the utterance names at the beginning of each line by setting kaldi_style_text to False.

aligner.set_config( gratis_blank=True, kaldi_style_text=False )

text = ["SALE OF THE HOTELS", "PROPERTY MANAGEMENT"]

segments = aligner(speech, text)

print(segments)

# utt_0000 utt 0.37 1.72 -2.0651 SALE OF THE HOTELS

# utt_0001 utt 4.70 6.10 -5.0566 PROPERTY MANAGEMENTThe script espnet2/bin/asr_align.py uses a similar interface. To align utterances:

# ASR model and config files from pre-trained model (e.g., from cachedir):

asr_config=<path-to-model>/config.yaml

asr_model=<path-to-model>/valid.*best.pth

# prepare the text file

wav="test_utils/ctc_align_test.wav"

text="test_utils/ctc_align_text.txt"

cat << EOF > ${text}

utt1 THE SALE OF THE HOTELS

utt2 IS PART OF HOLIDAY'S STRATEGY

utt3 TO SELL OFF ASSETS

utt4 AND CONCENTRATE

utt5 ON PROPERTY MANAGEMENT

EOF

# obtain alignments:

python espnet2/bin/asr_align.py --asr_train_config ${asr_config} --asr_model_file ${asr_model} --audio ${wav} --text ${text}

# utt1 ctc_align_test 0.26 1.73 -0.0154 THE SALE OF THE HOTELS

# utt2 ctc_align_test 1.73 3.19 -0.7674 IS PART OF HOLIDAY'S STRATEGY

# utt3 ctc_align_test 3.19 4.20 -0.7433 TO SELL OFF ASSETS

# utt4 ctc_align_test 4.20 4.97 -0.6017 AND CONCENTRATE

# utt5 ctc_align_test 4.97 6.10 -0.3477 ON PROPERTY MANAGEMENTThe output of the script can be redirected to a segments file by adding the argument --output segments.

Each line contains the file/utterance name, utterance start and end times in seconds, and a confidence score; optionally also the utterance text.

The confidence score is a probability in log space that indicates how well the utterance was aligned. If needed, remove bad utterances:

min_confidence_score=-7

# here, we assume that the output was written to the file `segments`

awk -v ms=${min_confidence_score} '{ if ($5 > ms) {print} }' segmentsSee the module documentation for more information.

It is recommended to use models with RNN-based encoders (such as BLSTMP) for aligning large audio files;

rather than using Transformer models that have a high memory consumption on longer audio data.

The sample rate of the audio must be consistent with that of the data used in training; adjust with sox if needed.

Also, we can use this tool to provide token-level segmentation information if we prepare a list of tokens instead of that of utterances in the text file. See the discussion in #4278 (comment).

@inproceedings{watanabe2018espnet,

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson {Enrique Yalta Soplin} and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

title={{ESPnet}: End-to-End Speech Processing Toolkit},

year={2018},

booktitle={Proceedings of Interspeech},

pages={2207--2211},

doi={10.21437/Interspeech.2018-1456},

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

}

@inproceedings{hayashi2020espnet,

title={{Espnet-TTS}: Unified, reproducible, and integratable open source end-to-end text-to-speech toolkit},

author={Hayashi, Tomoki and Yamamoto, Ryuichi and Inoue, Katsuki and Yoshimura, Takenori and Watanabe, Shinji and Toda, Tomoki and Takeda, Kazuya and Zhang, Yu and Tan, Xu},

booktitle={Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={7654--7658},

year={2020},

organization={IEEE}

}

@inproceedings{inaguma-etal-2020-espnet,

title = "{ESP}net-{ST}: All-in-One Speech Translation Toolkit",

author = "Inaguma, Hirofumi and

Kiyono, Shun and

Duh, Kevin and

Karita, Shigeki and

Yalta, Nelson and

Hayashi, Tomoki and

Watanabe, Shinji",

booktitle = "Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics: System Demonstrations",

month = jul,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.acl-demos.34",

pages = "302--311",

}

@article{hayashi2021espnet2,

title={Espnet2-tts: Extending the edge of tts research},

author={Hayashi, Tomoki and Yamamoto, Ryuichi and Yoshimura, Takenori and Wu, Peter and Shi, Jiatong and Saeki, Takaaki and Ju, Yooncheol and Yasuda, Yusuke and Takamichi, Shinnosuke and Watanabe, Shinji},

journal={arXiv preprint arXiv:2110.07840},

year={2021}

}

@inproceedings{li2020espnet,

title={{ESPnet-SE}: End-to-End Speech Enhancement and Separation Toolkit Designed for {ASR} Integration},

author={Chenda Li and Jing Shi and Wangyou Zhang and Aswin Shanmugam Subramanian and Xuankai Chang and Naoyuki Kamo and Moto Hira and Tomoki Hayashi and Christoph Boeddeker and Zhuo Chen and Shinji Watanabe},

booktitle={Proceedings of IEEE Spoken Language Technology Workshop (SLT)},

pages={785--792},

year={2021},

organization={IEEE},

}

@inproceedings{arora2021espnet,

title={{ESPnet-SLU}: Advancing Spoken Language Understanding through ESPnet},

author={Arora, Siddhant and Dalmia, Siddharth and Denisov, Pavel and Chang, Xuankai and Ueda, Yushi and Peng, Yifan and Zhang, Yuekai and Kumar, Sujay and Ganesan, Karthik and Yan, Brian and others},

booktitle={ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={7167--7171},

year={2022},

organization={IEEE}

}

@inproceedings{shi2022muskits,

author={Shi, Jiatong and Guo, Shuai and Qian, Tao and Huo, Nan and Hayashi, Tomoki and Wu, Yuning and Xu, Frank and Chang, Xuankai and Li, Huazhe and Wu, Peter and Watanabe, Shinji and Jin, Qin},

title={{Muskits}: an End-to-End Music Processing Toolkit for Singing Voice Synthesis},

year={2022},

booktitle={Proceedings of Interspeech},

pages={4277-4281},

url={https://www.isca-speech.org/archive/pdfs/interspeech_2022/shi22d_interspeech.pdf}

}

@inproceedings{lu22c_interspeech,

author={Yen-Ju Lu and Xuankai Chang and Chenda Li and Wangyou Zhang and Samuele Cornell and Zhaoheng Ni and Yoshiki Masuyama and Brian Yan and Robin Scheibler and Zhong-Qiu Wang and Yu Tsao and Yanmin Qian and Shinji Watanabe},

title={{ESPnet-SE++: Speech Enhancement for Robust Speech Recognition, Translation, and Understanding}},

year=2022,

booktitle={Proc. Interspeech 2022},

pages={5458--5462},

}

@article{gao2022euro,

title={{EURO}: {ESPnet} Unsupervised ASR Open-source Toolkit},

author={Gao, Dongji and Shi, Jiatong and Chuang, Shun-Po and Garcia, Leibny Paola and Lee, Hung-yi and Watanabe, Shinji and Khudanpur, Sanjeev},

journal={arXiv preprint arXiv:2211.17196},

year={2022}

}

@article{peng2023reproducing,

title={Reproducing Whisper-Style Training Using an Open-Source Toolkit and Publicly Available Data},

author={Peng, Yifan and Tian, Jinchuan and Yan, Brian and Berrebbi, Dan and Chang, Xuankai and Li, Xinjian and Shi, Jiatong and Arora, Siddhant and Chen, William and Sharma, Roshan and others},

journal={arXiv preprint arXiv:2309.13876},

year={2023}

}