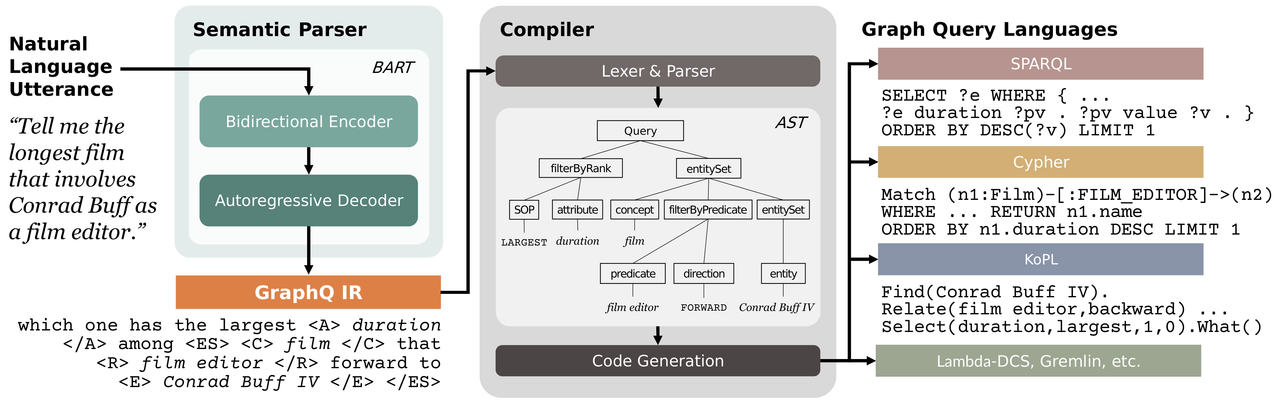

This repository contains source code for the EMNLP 2022 Long Paper "GraphQ IR: Unifying the Semantic Parsing of Graph Query Languages with One Intermediate Representation".

All required packages and versions can be found in the environment configuration file environment.yml, or you may simply build an identical conda environment like this:

conda env create -f environment.yml

conda activate graphqir

As for our implemented source-to-source compiler, please refer to GraphQ Trans for its setup and usage.

In our experiments, we used the BART-base pretrained model for implementing the neural semantic parser. To reproduce, you may download the model checkpoint here.

For KQA Pro, you may follow their documentation to set up the database backend. After starting the service, replace the url in data/kqapro/utils/sparql_engine.py with your own.

For GrailQA, you may follow Freebase Setup to set up the database backend. After starting the service, replace the url in data/grailqa/utils/sparql_executer.py with your own.

Experiments are conducted on 4 semantic parsing benchmarks KQA Pro, Overnight, GrailQA and MetaQA-Cypher.

This dataset contains the parallel data of natural language questions and the corresponding logical forms in SPARQL and KoPL. It can be downloaded via the official website as provided by Cao et al. (2022).

This dataset contains the parallel data of natural language questions and the corresponding logical forms in Lambda-DCS in 8 sub-domains as prepared by Wang et al. (2015). The data and the evaluator can be accessed here as provided by Cao et al. (2019).

To pull the dependencies for running the Overnight experiments, please run:

./pull_dependency_overnight.shThis dataset contains the parallel data of natural language questions and the corresponding logical forms in SPARQL. It can be downloaded via the offical website as provided by Gu et al. (2020). To focus on the sole task of semantic parsing, we replace the entity IDs (e.g. m.06mn7) with their respective names (e.g. Stanley Kubrick) in the logical forms, thus eliminating the need for an explicit entity linking module.

Please note that such replacement can cause inequivalent execution results. Thus, the performance reported in our paper may not be directly comparable to the other works.

To pull the dependencies for running the GrailQA experiments, please run:

./pull_dependency_grailqa.shThis dataset contains the parallel data of natural language questions and the corresponding logical forms in Cypher. The original data is available here as prepared by Zhang et al. (2017). We used a rule-based method to create its Cypher annotation for low-resource evaluation.

To pull the dependencies for running the MetaQA-Cypher experiments, please run:

./pull_dependency_metaqa.shThroughout the experiments, we suggest to structure the files as follows:

GraphQ_IR/

└── data/

├── kqapro/

│ ├── data/

│ │ ├── kb.json

│ │ ├── train.json

│ │ ├── val.json

│ │ └── test.json

│ ├── utils/

│ ├── config_kopl.py

│ ├── config_sparql.py

│ └── evaluate.py

├── overnight/

│ ├── data/

│ │ ├── *_train.tsv

│ │ └── *_test.tsv

│ ├── evaluator/

│ └── config.py

├── grailqa/

│ ├── data/

│ │ ├── ontology/

│ │ ├── train.json

│ │ ├── val.json

│ │ └── test.json

│ ├── utils/

│ └── config.py

├── metaqa/

│ ├── data/

│ │ └── *shot/

│ │ ├── train.json

│ │ ├── val.json

│ │ └── test.json

│ └── config.py

├── bart-base/

├── utils/

├── preprocess.py

├── train.py

├── inference.py

├── corrector.py

├── cfq_ir.py

└── module-classes.txt

To simplify, here we take the NL-to-SPARQL semantic parsing task over the KQA Pro dataset as an example.

For other datasets or different target languages, you may simply modify the arguments --input_dir, --output_dir , and --config accordingly.

For running the BART baseline experiments, remove the argument --ir_mode.

For running the experiments with CFQ IR (Herzig et al., 2021), set --ir_mode to cfq. Please note that CFQ IR is only applicable to those datasets with SPARQL as the target query language (i.e., KQA Pro, GrailQA) .

python -m preprocess \

--input_dir ./data/kqapro/data/ \ # path to raw data

--output_dir ./exp_files/kqapro/ \ # path for saving preprocessed data

--model_name_or_path ./bart-base/ \ # path to pretrained model

--config ./data/kqapro/config_sparql.py \ # path to data-specific configuration file

--ir_mode graphq # or "cfq" for CFQ IR / removed for running baseline python -m torch.distributed.launch --nproc_per_node=8 -m train \

--input_dir ./exp_files/kqapro/ \ # path to preprocessed data

--output_dir ./exp_results/kqapro/ \ # path for saving experiment logs & checkpoints

--model_name_or_path ./bart-base/ \ # path to pretrained model

--config ./data/kqapro/config_sparql.py \ # path to data-specific configuration file

--batch_size 128 \ # 128 for KQA Pro; 64 for Overnight & GrailQA & MetaQA-Cypher

--ir_mode graphq # or "cfq" for CFQ IR / removed for running baseline python -m inference \

--input_dir ./exp_files/kqapro/ \ # path to preprocessed data

--output_dir ./exp_results/kqapro/ \ # path for saving inference results

--model_name_or_path ./bart-base/ \ # path to pretrained model

--ckpt ./exp_results/kqapro/checkpoint-best/ \ # path to saved checkpoint

--config ./data/kqapro/config_sparql.py \ # path to data-specific configuration file

--ir_mode graphq # or "cfq" for CFQ IR / removed for running baseline If you find our work helpful, please cite it as follows:

@article{nie2022graphq,

title={GraphQ IR: Unifying Semantic Parsing of Graph Query Language with Intermediate Representation},

author={Nie, Lunyiu and Cao, Shulin and Shi, Jiaxin and Tian, Qi and Hou, Lei and Li, Juanzi and Zhai, Jidong},

journal={arXiv preprint arXiv:2205.12078},

year={2022}

}