Event-based camera data representation. (In some papers, also called "Event Stacking")

Some popular representation and (maybe) their demo codes.

If you see other representation (paper or code), please tell me or make a pull request to this repo. Many thanks

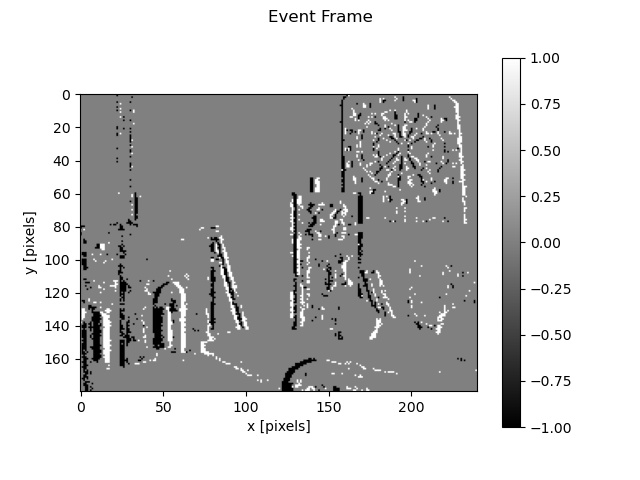

Event frame is the simplest representation. Considering polarity, each pixel in image would only be +1/0/-1, which means a positive/no/negative event occurs here.

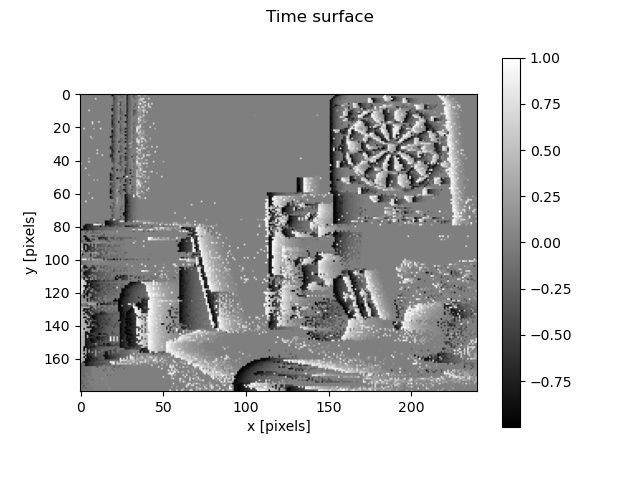

Time-surface is also caled surface of active events, which include both spatio and temporal information. The value of each pixel should be t is the reference time, which could be 'local' or 'global'.

Check this paper for more details.

Draw time-surface in 2d image

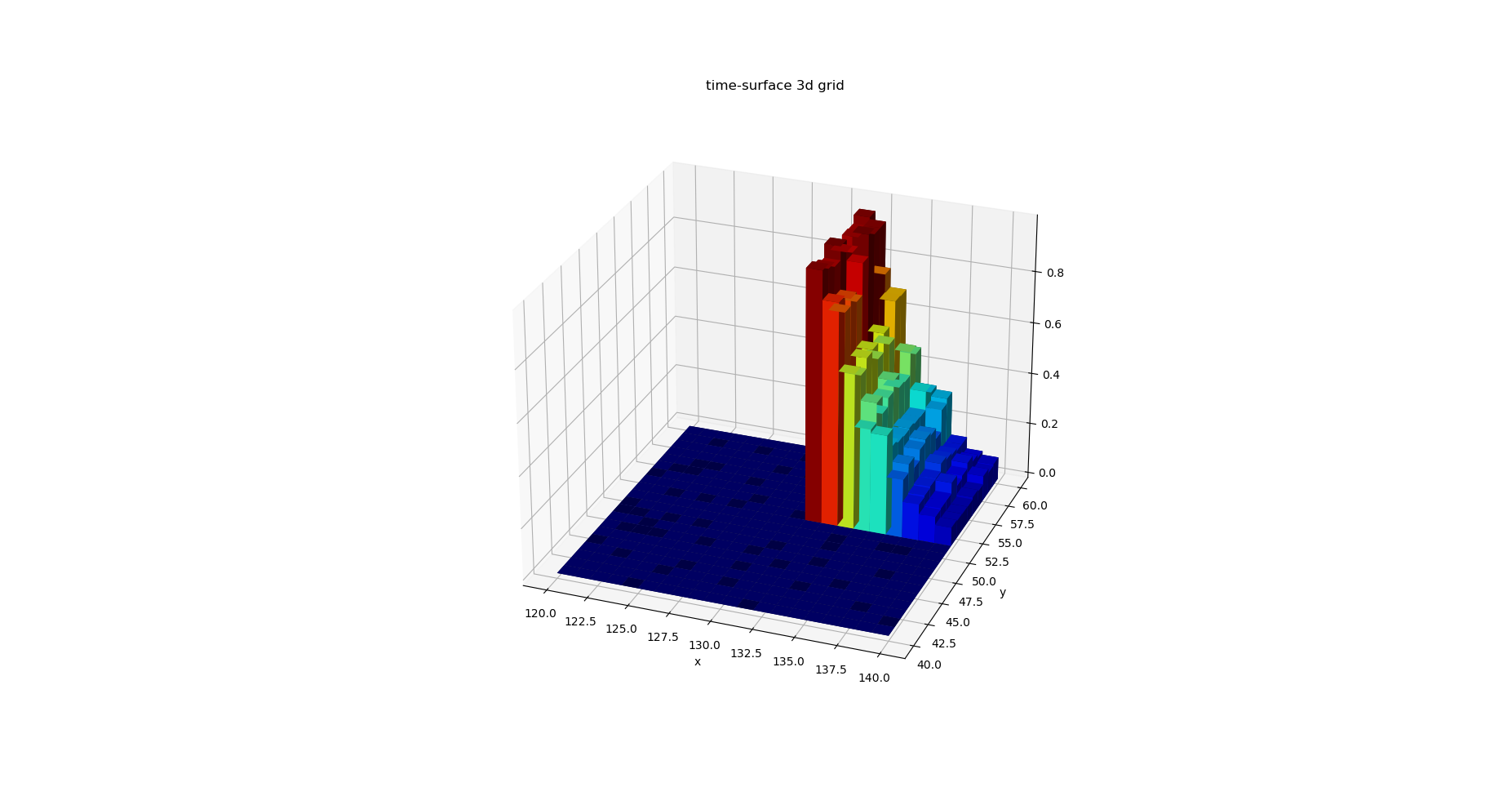

Draw time-surface as a 3d grid

To plot time surface in a 3d grid, check plot_3d_grid.py file.

Attention that plot 3d grid needs much memory, so just try to plot a patch in full image.

Also, since the global reference time is used for whole image, the local time-surface may be not "smooth".

There are lots of adaptive/modified time-surface. I just list some of them when I saw them in papers.

- Varying decay parameter.

For naive TS, the decay parameter

$\tau$ is constant. While some use this$\tau = max(\tau_u - \frac{1}{n} \Sigma_{i=0}^{n}(t-t_{last,i}), \tau_l)$ , which make TS decay faster in dense texture, while slower in low-texture scene. Reference: [14].

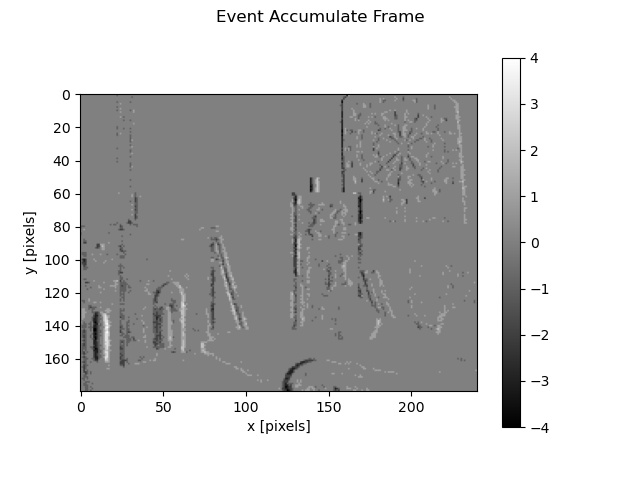

spatiol-temporal voxel grid, sometimes called Stacking Based on Time(SBT), Stacking Based on Number(SBN).

First proposed by Alex Zhu's paper[2].

Widely used in learning-methods to "stacking", such as E2VID[3], reconstruction method[4] and HDR imaging[5].

These methods all stack events into frames for networking processing, but may be slightly different. In Zhu's method (and E2VID's), timestamp information are reserved when drawing the gray-scale image, but SBT/SBN in [4] only accumulate polarity ignoring the timestamps, and in [5] positive and negative polarity stacking are separated and frames are doubled. How to stack depends mainly on the network architecture I guess.

No reference codes now. Will be add if I find an elegant implementation.

First proposed by Yin Bi in his ICCV19 and TIP20 papers[8,9]. Also appeared in Yongjian Deng's [6] Paper, and Simon Schaefer's paper[10] in CVPR2022.

The key idea is to use a 3D graph to orgnize event stream for further processing (like classification).

Steps: 1. Voxelize the event stream; 2. Select N important voxels (based on the number of events in each voxel) for denoise; 3. Calcuate the 2D histgram as the feature vector in each voxel; 4. The 3D coordinate, and the feature, construct a Vertex in a graph; 5. Data association and further processing can be dealed by graph (see paper for more details).

First proposed by Yeongwoo Nam in CVPR2022 [7] Paper

Traditional SBT or SBN methods may cause events overwrite when the scene is dense, especially for autonomous driving. The proposed methods uses M stack and each reserve the half of duration of previous stack. Older events are not preserved since the information is less important the the new ones.

The multiple stacks can be further processed by other network (such as 'generate' a sharp map described in [7]).

BEHI representation is first proposed in Wang's IROS2022 paper [11]. This representation draws all events (without polarity) in one frame during the whole histroy time

Tencode is first proposed in Huang's WACV2023 paper [12]. This method ecnodes both polarity and timestamp into a RGB image. The on/off polarity encode R and B channel, and timestamp encodes the G channel. Given a time duration

Proposed in Tobias Fische's ICRA2023 paper [13]. Used for place recognition.

- C++ version may be added later.

- Some other presentations would be added later.

Some codes are inspired by TU Berlin's Course: https://github.com/tub-rip/events_viz

[1]. Liu et al., Adaptive Time-Slice Block-Matching Optical Flow Algorithm for Dynamic Vision Sensors, BMVC 2018

[2]. Zihao Zhu, et al. Unsupervised Event-based Optical Flow using Motion Compensation, ECCVW 2018

[3]. H. Rebecq, R. Ranftl, V. Koltun and D. Scaramuzza, "High Speed and High Dynamic Range Video with an Event Camera," in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 6, pp. 1964-1980, 1 June 2021, doi: 10.1109/TPAMI.2019.2963386.

[4]. Mostafavi, M., Wang, L. & Yoon, KJ. Learning to Reconstruct HDR Images from Events, with Applications to Depth and Flow Prediction. Int J Comput Vis 129, 900–920 (2021).

[5]. Yunhao Zou; Yinqiang Zheng; Tsuyoshi Takatani; Ying Fu: Learning To Reconstruct High Speed and High Dynamic Range Videos From Events.

[6]. Yongjian Deng; Hao Chen; Hai Liu; Youfu Li: A Voxel Graph CNN for Object Classification With Event Cameras. CVPR2022.

[7]. Yeongwoo Nam; Mohammad Mostafavi; Kuk-Jin Yoon; Jonghyun Choi: Stereo Depth From Events Cameras: Concentrate and Focus on the Future. CVPR022.

[8]. Yin Bi, Aaron Chadha, Alhabib Abbas, Eirina Bourtsoulatze, and Yiannis Andreopoulos. Graph-based object classification for neuromorphic vision sensing. IEEE Int. Conf. Comput. Vis. (ICCV), 2019

[9]. Yin Bi, Aaron Chadha, Alhabib Abbas, Eirina Bourtsoulatze, and Yiannis Andreopoulos. Graph-based spatio-temporal feature learning for neuromorphic vision sensing. IEEE Transactions on Image Processing, 29:9084–9098, 2020.

[10]. Simon Schaefer; Daniel Gehrig; Davide Scaramuzza: AEGNN: Asynchronous Event-Based Graph Neural Networks. CVPR2022. [11]. Wang, Ziyun; Ojeda, Fernando Cladera; Bisulco, Anthony; Lee, Daewon; Taylor, Camillo J.; Daniilidis, Kostas et al. (2022): EV-Catcher: High-Speed Object Catching Using Low-Latency Event-Based Neural Networks. In IEEE Robot. Autom. Lett. 7 (4), pp. 8737–8744. DOI: 10.1109/LRA.2022.3188400.

[12]. Ze Huang, Li Sun, Cheng Zhao, Song Li, and Songzhi Su. EventPoint: Self-Supervised Interest Point Detection and Description for Event-based Camera. WACV 2023.

[13]. and Michael Milford. How Many Events Do You Need. Event-based Visual Place Recognition Using Sparse But Varying Pixels. ICRA 2023.

[14]. Zhu S, Tang Z, Yang M, et al. Event Camera-based Visual Odometry for Dynamic Motion Tracking of a Legged Robot Using Adaptive Time Surface[J]. arXiv preprint arXiv:2305.08962, 2023.