A permissive synthetic data library from Gretel.ai

If you want to quickly discover gretel-synthetics, simply click the button below and follow the tutorials!

Check out additional examples here.

This section will guide you through installation of gretel-synthetics and dependencies that are not directly installed by the Python package manager.

By default, we do not install certain core requirements, the following dependencies should be installed external to the installation

of gretel-synthetics, depending on which model(s) you plan to use.

- Tensorflow: Used by the LSTM model, we recommend version 2.11.x

- Torch: Used by Timeseries DGAN and ACTGAN (for ACTGAN, Torch is installed by SDV), we recommend version 2.0

- SDV (Synthetic Data Vault): Used by ACTGAN, we recommend version 0.17.x

These dependencies can be installed by doing the following:

pip install tensorflow==2.11 # for LSTM

pip install sdv<0.18 # for ACTGAN

pip install torch==2.0 # for Timeseries DGAN

To install the actual gretel-synthetics package, first clone the repo and then...

pip install -U .

or

pip install gretel-synthetics

then...

$ pip install jupyter

$ jupyter notebook

When the UI launches in your browser, navigate to examples/synthetic_records.ipynb and get generating!

If you want to install gretel-synthetics locally and use a GPU (recommended):

- Create a virtual environment (e.g. using

conda)

$ conda create --name tf python=3.9

- Activate the virtual environment

$ conda activate tf

- Run the setup script

./setup-utils/setup-gretel-synthetics-tensorflow24-with-gpu.sh

The last step will install all the necessary software packages for GPU usage, tensorflow=2.8 and gretel-synthetics.

Note that this script works only for Ubuntu 18.04. You might need to modify it for other OS versions.

The timeseries DGAN module contains a PyTorch implementation of a DoppelGANger model that is optimized for timeseries data. Similar to tensorflow, you will need to manually install pytorch:

pip install torch==1.13.1

This notebook shows basic usage on a small data set of smart home sensor readings.

ACTGAN (Anyway CTGAN) is an extension of the popular CTGAN implementation that provides some additional functionality to improve memory usage, autodetection and transformation of columns, and more.

To use this model, you will need to manually install SDV:

pip install sdv<0.18

Keep in mind that this will also install several dependencies like PyTorch that SDV relies on, which may conflict with PyTorch versions installed for use with other models like Timeseries DGAN.

The ACTGAN interface is a superset of the CTGAN interface. To see the additional features, please take a look at the ACTGAN demo notebook in the examples directory of this repo.

This package allows developers to quickly get immersed with synthetic data generation through the use of neural networks. The more complex pieces of working with libraries like Tensorflow and differential privacy are bundled into friendly Python classes and functions. There are two high level modes that can be utilized.

The simple mode will train line-per-line on an input file of text. When generating data, the generator will yield a custom object that can be used a variety of different ways based on your use case. This notebook demonstrates this mode.

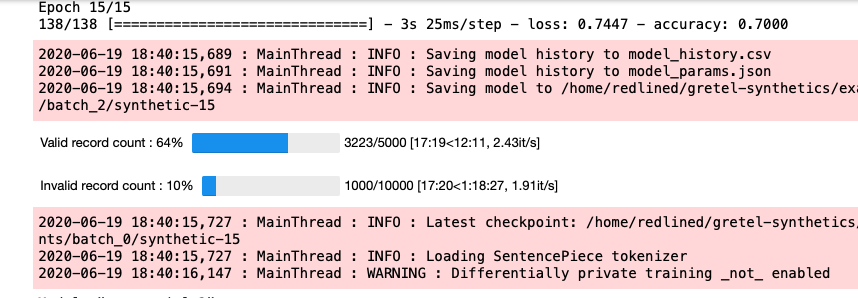

This library supports CSV / DataFrames natively using the DataFrame "batch" mode. This module provided a wrapper around our simple mode that is geared for working with tabular data. Additionally, it is capable of handling a high number of columns by breaking the input DataFrame up into "batches" of columns and training a model on each batch. This notebook shows an overview of using this library with DataFrames natively.

There are four primary components to be aware of when using this library.

-

Configurations. Configurations are classes that are specific to an underlying ML engine used to train and generate data. An example would be using

TensorFlowConfigto create all the necessary parameters to train a model based on TF.LocalConfigis aliased toTensorFlowConfigfor backwards compatibility with older versions of the library. A model is saved to a designated directory, which can optionally be archived and utilized later. -

Tokenizers. Tokenizers convert input text into integer based IDs that are used by the underlying ML engine. These tokenizers can be created and sent to the training input. This is optional, and if no specific tokenizer is specified then a default one will be used. You can find an example here that uses a simple char-by-char tokenizer to build a model from an input CSV. When training in a non-differentially private mode, we suggest using the default

SentencePiecetokenizer, an unsupervised tokenizer that learns subword units (e.g., byte-pair-encoding (BPE) [Sennrich et al.]) and unigram language model [Kudo.]) for faster training and increased accuracy of the synthetic model. -

Training. Training a model combines the configuration and tokenizer and builds a model, which is stored in the designated directory, that can be used to generate new records.

-

Generation. Once a model is trained, any number of new lines or records can be generated. Optionally, a record validator can be provided to ensure that the generated data meets any constraints that are necessary. See our notebooks for examples on validators.

In addition to the four primary components, the gretel-synthetics package also ships with a set of utilities that are helpful for training advanced synthetics models and evaluating synthetic datasets.

Some of this functionality carries large dependencies, so they are shipped as an extra called utils. To install these dependencies, you may run

pip install gretel-synthetics[utils]

For additional details, please refer to the Utility module API docs.

Differential privacy support for our TensorFlow mode is built on the great work being done by the Google TF team and their TensorFlow Privacy library.

When utilizing DP, we currently recommend using the character tokenizer as it will only create a vocabulary of single tokens and removes the risk of sensitive data being memorized as actual tokens that can be replayed during generation.

There are also a few configuration options that are notable such as:

predict_batch_sizeshould be set to 1dpshould be enabledlearning_rate,dp_noise_multiplier,dp_l2_norm_clip, anddp_microbatchescan be adjusted to achieve various epsilon values.reset_statesshould be disabled

Please see our example Notebook for training a DP model based on the Netflix Prize dataset.