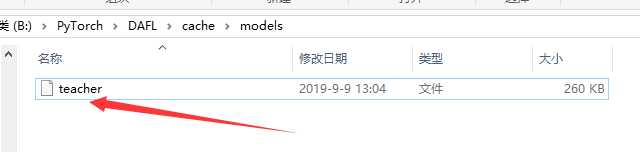

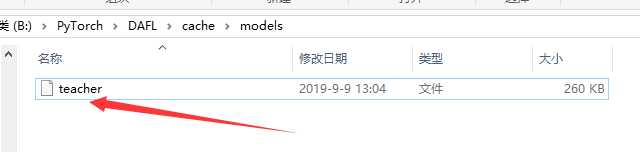

The "teacher" model has been successfully trained, but errors occurred while running "DAFL-train.py",anybody know what's going on? Thank you!

"D:\Program Files\Python368\python.exe" B:/PyTorch/DAFL/DAFL-train.py

[Epoch 0/200] [loss_oh: 0.306945] [loss_ie: -0.662719] [loss_a: -1.541127] [loss_kd: 1.733359]

[Epoch 0/200] [loss_oh: 0.391318] [loss_ie: -0.769967] [loss_a: -1.384207] [loss_kd: 1.527557]

Traceback (most recent call last):

File "", line 1, in

File "D:\Program Files\Python368\lib\multiprocessing\spawn.py", line 105, in spawn_main

exitcode = _main(fd)

File "D:\Program Files\Python368\lib\multiprocessing\spawn.py", line 114, in _main

prepare(preparation_data)

File "D:\Program Files\Python368\lib\multiprocessing\spawn.py", line 225, in prepare

_fixup_main_from_path(data['init_main_from_path'])

File "D:\Program Files\Python368\lib\multiprocessing\spawn.py", line 277, in _fixup_main_from_path

run_name="mp_main")

File "D:\Program Files\Python368\lib\runpy.py", line 263, in run_path

pkg_name=pkg_name, script_name=fname)

File "D:\Program Files\Python368\lib\runpy.py", line 96, in _run_module_code

mod_name, mod_spec, pkg_name, script_name)

File "D:\Program Files\Python368\lib\runpy.py", line 85, in _run_code

exec(code, run_globals)

### File "B:\PyTorch\DAFL\DAFL-train.py", line 190, in

for i, (images, labels) in enumerate(data_test_loader):

File "D:\Program Files\Python368\lib\site-packages\torch\utils\data\dataloader.py", line 193, in iter

return _DataLoaderIter(self)

File "D:\Program Files\Python368\lib\site-packages\torch\utils\data\dataloader.py", line 469, in init

w.start()

File "D:\Program Files\Python368\lib\multiprocessing\process.py", line 105, in start

self._popen = self._Popen(self)

File "D:\Program Files\Python368\lib\multiprocessing\context.py", line 223, in _Popen

return _default_context.get_context().Process._Popen(process_obj)

File "D:\Program Files\Python368\lib\multiprocessing\context.py", line 322, in _Popen

return Popen(process_obj)

File "D:\Program Files\Python368\lib\multiprocessing\popen_spawn_win32.py", line 33, in init

prep_data = spawn.get_preparation_data(process_obj._name)

File "D:\Program Files\Python368\lib\multiprocessing\spawn.py", line 143, in get_preparation_data

_check_not_importing_main()

File "D:\Program Files\Python368\lib\multiprocessing\spawn.py", line 136, in _check_not_importing_main

is not going to be frozen to produce an executable.''')

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

Traceback (most recent call last):

File "D:\Program Files\Python368\lib\site-packages\torch\utils\data\dataloader.py", line 511, in _try_get_batch

data = self.data_queue.get(timeout=timeout)

File "D:\Program Files\Python368\lib\multiprocessing\queues.py", line 105, in get

raise Empty

queue.Empty

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

### File "B:/PyTorch/DAFL/DAFL-train.py", line 190, in

for i, (images, labels) in enumerate(data_test_loader):

File "D:\Program Files\Python368\lib\site-packages\torch\utils\data\dataloader.py", line 576, in next

idx, batch = self._get_batch()

File "D:\Program Files\Python368\lib\site-packages\torch\utils\data\dataloader.py", line 553, in _get_batch

success, data = self._try_get_batch()

File "D:\Program Files\Python368\lib\site-packages\torch\utils\data\dataloader.py", line 519, in _try_get_batch

raise RuntimeError('DataLoader worker (pid(s) {}) exited unexpectedly'.format(pids_str))

RuntimeError: DataLoader worker (pid(s) 16596) exited unexpectedly

进程已结束,退出代码 1