Hi,

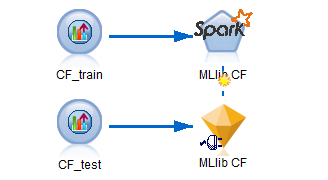

I am getting an error when I run MLlib_CF extension. I have AS 2.1, and I have verified that Spark Client is installed and running. Thank you.

This is the error message.

[2016-01-04 10:53:05] Error executing ASL: Error on executing the python script to generate the output DataModel with cause:

Traceback (most recent call last):

File "/opt/ibm/spss/analyticserver/2.1/ae_wlpserver/usr/servers/aeserver/as-temp/1520dc54ceb-8e170d8b310502fe/as.py", line 16, in

df = ascontext.getSparkInputData()

File "/opt/ibm/spss/analyticserver/2.1/ae_wlpserver/usr/servers/aeserver/configuration/scripts/com.spss.ibm.pythonscript.zip/spss/pyspark/as_context.py", line 98, in getSparkInputData

File "/usr/iop/current/spark-client/python/pyspark/sql/context.py", line 346, in createDataFrame

rows = rdd.take(10)

File "/usr/iop/current/spark-client/python/pyspark/rdd.py", line 1277, in take

res = self.context.runJob(self, takeUpToNumLeft, p, True)

File "/usr/iop/current/spark-client/python/pyspark/context.py", line 897, in runJob

allowLocal)

File "/usr/iop/current/spark-client/python/lib/py4j-0.8.2.1-src.zip/py4j/java_gateway.py", line 538, in call

File "/usr/iop/current/spark-client/python/lib/py4j-0.8.2.1-src.zip/py4j/protocol.py", line 300, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling z:org.apache.spark.api.python.PythonRDD.runJob.

: org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 35.0 failed 4 times, most recent failure: Lost task 0.3 in stage 35.0 (TID 235, bigivm): org.apache.spark.SparkException:

Error from python worker:

/usr/bin/python: No module named pyspark

PYTHONPATH was:

/hadoop/yarn/local/filecache/10/spark-assembly.jar

java.io.EOFException

at java.io.DataInputStream.readInt(DataInputStream.java:392)

at org.apache.spark.api.python.PythonWorkerFactory.startDaemon(PythonWorkerFactory.scala:163)

at org.apache.spark.api.python.PythonWorkerFactory.createThroughDaemon(PythonWorkerFactory.scala:86)

at org.apache.spark.api.python.PythonWorkerFactory.create(PythonWorkerFactory.scala:62)

at org.apache.spark.SparkEnv.createPythonWorker(SparkEnv.scala:130)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:73)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:277)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:244)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:63)

at org.apache.spark.scheduler.Task.run(Task.scala:70)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:213)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1273)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1264)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1263)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:47)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1263)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:730)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:730)

at scala.Option.foreach(Option.scala:236)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:730)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:1457)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:1418)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:48)

.