|

|

|

|

|

|

|

|---|---|---|---|---|---|---|

| Junting Pan | Cristian Canton Ferrer | Kevin McGuinness | Noel O'Connor | Jordi Torres | Elisa Sayrol | Xavier Giro-i-Nieto |

A joint collaboration between:

|

|

|

|

|

|

|---|---|---|---|---|---|

| Insight Centre for Data Analytics | Dublin City University (DCU) | Microsoft | Barcelona Supercomputing Center | Universitat Politecnica de Catalunya (UPC) |

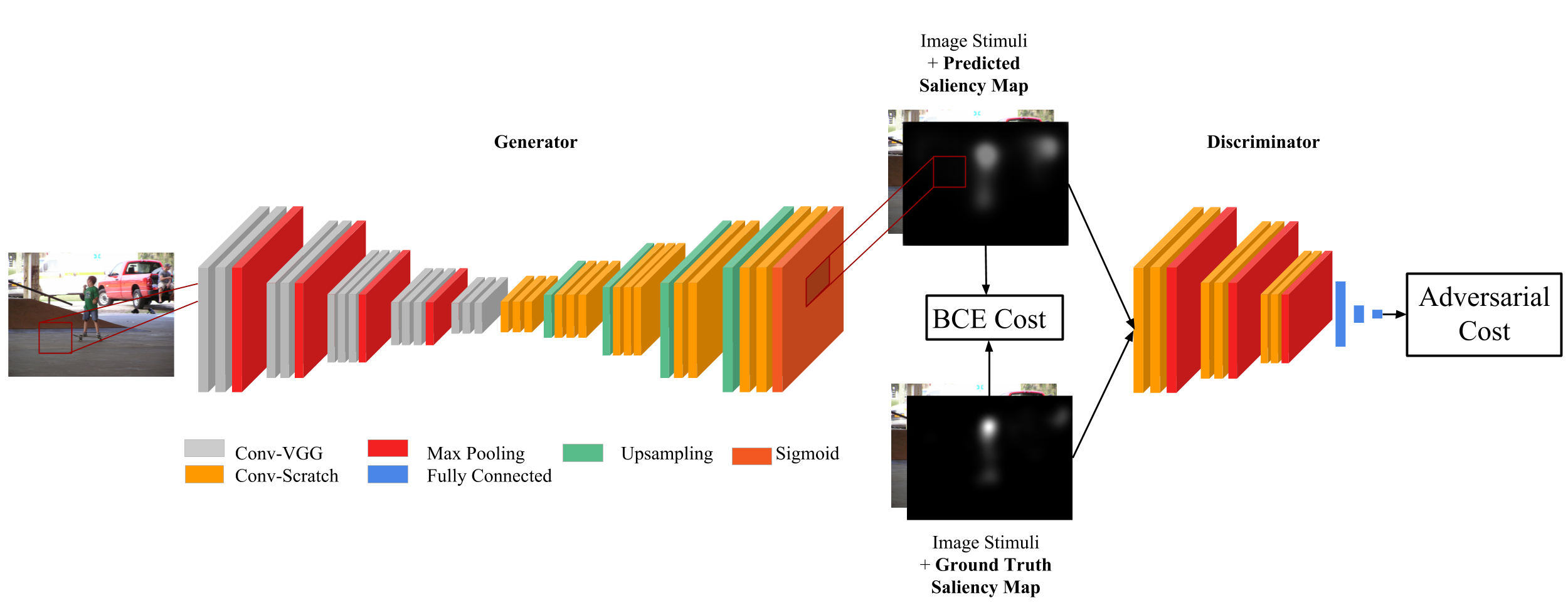

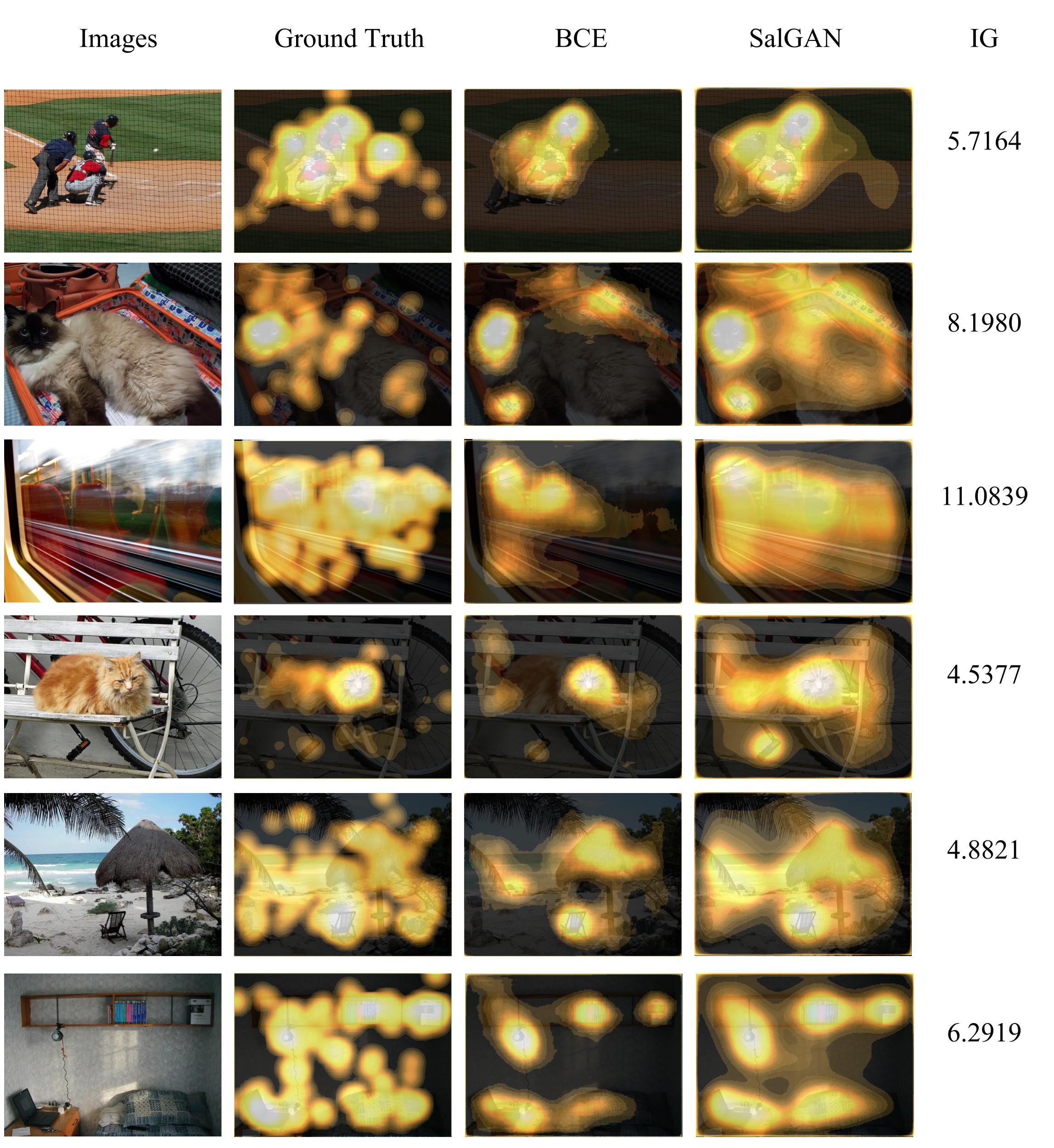

We introduce SalGAN, a deep convolutional neural network for visual saliency prediction trained with adversarial examples. The first stage of the network consists of a generator model whose weights are learned by back-propagation computed from a binary cross entropy (BCE) loss over downsampled versions of the saliency maps. The resulting prediction is processed by a discriminator network trained to solve a binary classification task between the saliency maps generated by the generative stage and the ground truth ones. Our experiments show how adversarial training allows reaching state-of-the-art performance across different metrics when combined with a widely-used loss function like BCE.

<iframe src="//www.slideshare.net/slideshow/embed_code/key/5cXl80Fm2c3ksg" width="595" height="485" frameborder="0" marginwidth="0" marginheight="0" scrolling="no" style="border:1px solid #CCC; border-width:1px; margin-bottom:5px; max-width: 100%;" allowfullscreen> </iframe>Find the extended pre-print version of our work on arXiv. The shorter extended abstract presented as spotlight in the CVPR 2017 Scene Understanding Workshop (SUNw) is available here.

Please cite with the following Bibtex code:

@InProceedings{Pan_2017_SalGAN,

author = {Pan, Junting and Canton, Cristian and McGuinness, Kevin and O'Connor, Noel E. and Torres, Jordi and Sayrol, Elisa and Giro-i-Nieto, Xavier and},

title = {SalGAN: Visual Saliency Prediction with Generative Adversarial Networks},

booktitle = {arXiv},

month = {January},

year = {2017}

}

You may also want to refer to our publication with the more human-friendly Chicago style:

Junting Pan, Cristian Canton, Kevin McGuinness, Noel E. O'Connor, Jordi Torres, Elisa Sayrol and Xavier Giro-i-Nieto. "SalGAN: Visual Saliency Prediction with Generative Adversarial Networks." arXiv. 2017.

The parameters to run SalGAN can be downloaded here:

If you wanted to train the model, you will also need this additional file

As explained in our paper, our networks were trained on the training and validation data provided by SALICON.

Two different dataset were used for test:

Our paper presents two convolutional neural networks, one correspends to the Generator (Saliency Prediction Network) and the another is the Discriminator for the adversarial training. To compute saliency maps only the Generator is needed.

SalGAN is implemented in Lasagne, which at its time is developed over Theano.

pip install -r https://raw.githubusercontent.com/imatge-upc/saliency-salgan-2017/master/requirements.txt

We have prepared this Docker container with all necessary dependencies for computing saliency maps with SalGAN. You will need to use nvidia-docker.

Using the container is like connecting via ssh to a machine. To start an interactive session run:

>> sudo nvidia-docker run -it --entrypoint='bash' -w /home/ evamohe/salgan

This will open a terminal within the container located in the '/home' folder.

Yo will find Salgan code in "/home/salgan". So if you want to test the installation, within the container, run:

>> cd /home/salgan/scripts

>> THEANO_FLAGS=mode=FAST_RUN,device=gpu0,floatX=float32,lib.cnmem=0.5,optimizer_including=cudnn python 03-predict.py

That will process the sample images located in "/home/salgan/images" and store them in "/home/salgan/saliency". To exit the container, run:

>> exit

You migh want to process your own data with your own custom scripts. For that, you can mount different local folders in the container. For example:

>> sudo nvidia-docker run -v $PATH_TO_MY_CODE:/home/code -v $PATH_TO_MY_DATA:/home/data -it --entrypoint='bash' -w /home/

will open a new session in the container, with '/home/code' and '/home/data' folders that will be share with your computer. If you edit your code locally, the changes will be updated automatically in the container. Similarly, all the files generated in '/home/data' will be available in your original data folder.

To train our model from scrath you need to run the following command:

THEANO_FLAGS=mode=FAST_RUN,device=gpu,floatX=float32,lib.cnmem=1,optimizer_including=cudnn python 02-train.py

In order to run the test script to predict saliency maps, you can run the following command after specifying the path to you images and the path to the output saliency maps:

THEANO_FLAGS=mode=FAST_RUN,device=gpu,floatX=float32,lib.cnmem=1,optimizer_including=cudnn python 03-predict.py

With the provided model weights you should obtain the follwing result:

|

|

|---|

Download the pretrained VGG-16 weights from: vgg16.pkl

Bat-Orgil Batsaikhan and Catherine Qi Zhao from the University of Minnesota released a PyTorch implementation in 2018 as part of their poster "Generative Adversarial Network for Videos and Saliency Map".

We would like to especially thank Albert Gil Moreno and Josep Pujal from our technical support team at the Image Processing Group at the UPC.

|

|

|---|---|

| Albert Gil | Josep Pujal |

| We gratefully acknowledge the support of NVIDIA Corporation with the donation of the GeoForce GTX Titan Z and Titan X used in this work. |  |

| The Image ProcessingGroup at the UPC is a SGR14 Consolidated Research Group recognized and sponsored by the Catalan Government (Generalitat de Catalunya) through its AGAUR office. |  |

| This work has been developed in the framework of the projects BigGraph TEC2013-43935-R and Malegra TEC2016-75976-R, funded by the Spanish Ministerio de Economía y Competitividad and the European Regional Development Fund (ERDF). |  |

| This publication has emanated from research conducted with the financial support of Science Foundation Ireland (SFI) under grant number SFI/12/RC/2289. |  |

If you have any general doubt about our work or code which may be of interest for other researchers, please use the public issues section on this github repo. Alternatively, drop us an e-mail at mailto:[email protected].

<script> (function(i,s,o,g,r,a,m){i['GoogleAnalyticsObject']=r;i[r]=i[r]||function(){ (i[r].q=i[r].q||[]).push(arguments)},i[r].l=1*new Date();a=s.createElement(o), m=s.getElementsByTagName(o)[0];a.async=1;a.src=g;m.parentNode.insertBefore(a,m) })(window,document,'script','https://www.google-analytics.com/analytics.js','ga'); ga('create', 'UA-7678045-13', 'auto'); ga('send', 'pageview'); </script>