I am passionate about computer vision, deep learning and software design.

Without a particular order, things I am interested in: computer vision, software, martial arts, API design, Neapolitan pizza, procedural generation, pen & paper, developer experience, technical writing, reading, pose estimation and more that I might find the time to add later 😅.

If you want to reach out, feel free to message me on my Twitter, @holylorenzo!

Here on GitHub, I mainly work on better tooling for deep learning in Julia. To do this, I've authored several Julia libraries, including:

- FastAI.jl, a high-level interface for complete deep learning projects (approved by fastai creators) (see the announcement post),

- FluxTraining.jl, an extensible training loop with best practices,

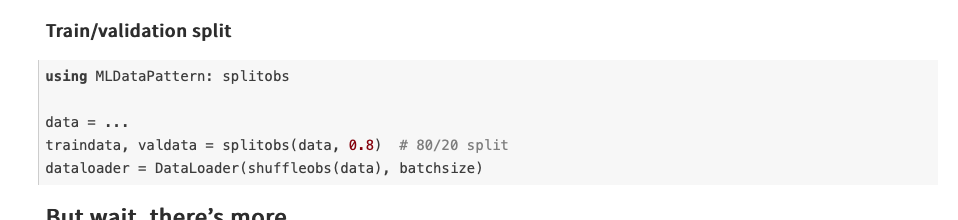

- DataLoaders.jl, an efficient, parallel data loader for larger-than-memory datasets

- DataAugmentation.jl: high-performance, composable data augmentations for 2D and 3D spatial data like images, segmentation masks, and keypoints

- Pollen.jl, a documentation system to build beautiful documentation pages for your libraries (see this example page)

I'm also a member of the FluxML organization. If you're interested in contributing, come say hi on the Zulip channel or on the biweekly ML ecosystem call (find the link here).