Describe the bug

Purview function out returns - Forbidden

To Reproduce

Steps to reproduce the behavior:

- Setup connector-only deployment

- Run notebook that produces lineage

Expected behavior

Lineage does not show up on the Purview portal

Logs

2022-06-28 15:50:11.071

Executing 'Functions.PurviewOut' (Reason='(null)', Id=a35694d7-3354-4584-99c1-b941a6db96bc)

Information

2022-06-28 15:50:11.071

Trigger Details: PartionId: 0, Offset: 1635232, EnqueueTimeUtc: 2022-06-28T15:50:11.0590000Z, SequenceNumber: 233, Count: 1

Information

2022-06-28 15:50:11.076

Token to access Purview was generated

Information

2022-06-28 15:50:11.077

Got Purview Client!

Information

2022-06-28 15:50:11.077

Enter PurviewOut

Information

2022-06-28 15:50:11.153

OlToPurviewParsingService-GetPurviewFromOlEvent

Error

2022-06-28 15:50:11.154

PurviewOut: {"entities": [{"typeName":"spark_application","guid":"-1","attributes":{"name":"order_data_merge","appType":"notebook","qualifiedName":"notebook://users/[email protected]/order_data_merge"}},{"typeName":"spark_process","guid":"-2","attributes":{"name":"/Users/[email protected]/Order_data_merge/mnt/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data","qualifiedName":"sparkprocess://https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_one_data_delta:https://dtdeveusadls.dfs.core.windows.net/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data","columnMapping":"","executionId":"0","currUser":"","sparkPlanDescription":"{"_producer":"[https://github.com/OpenLineage/OpenLineage/tree/0.8.2/integration/spark](https://github.com/OpenLineage/OpenLineage/tree/0.8.2/integration/spark/)","_schemaURL":"https://openlineage.io/spec/1-0-2/OpenLineage.json#/$defs/RunFacet","plan":[{"class":"org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand","num-children":0,"query":[{"class":"org.apache.spark.sql.catalyst.plans.logical.Union","num-children":2,"children":[0,1]},{"class":"org.apache.spark.sql.execution.datasources.LogicalRelation","num-children":0,"relation":null,"output":[[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_id","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3142,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_date","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3143,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"product_details","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3144,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"quantity","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3145,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_total","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3146,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}]],"isStreaming":false},{"class":"org.apache.spark.sql.execution.datasources.LogicalRelation","num-children":0,"relation":null,"output":[[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_id","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3152,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_date","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3153,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"product_description","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3154,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"quantity","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3155,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_total","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3156,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}]],"isStreaming":false}],"dataSource":null,"options":null,"mode":null}]}","inputs":[{"typeName":"azure_datalake_gen2_path","uniqueAttributes":{"qualifiedName":"https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_one_data_delta"}},{"typeName":"azure_datalake_gen2_path","uniqueAttributes":{"qualifiedName":"https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_two_data_delta"}}],"outputs":[{"typeName":"azure_datalake_gen2_path","uniqueAttributes":{"qualifiedName":"https://dtdeveusadls.dfs.core.windows.net/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data"}}]},"relationshipAttributes":{"application":{"qualifiedName":"notebook://users/[email protected]/order_data_merge","guid":"-1"}}}]}

Information

2022-06-28 15:50:11.154

Calling SendToPurview

Information

2022-06-28 15:50:11.154

Not found Attribute columnMapping on { "typeName": "spark_application", "guid": "-1", "attributes": { "name": "order_data_merge", "appType": "notebook", "qualifiedName": "notebook://users/[email protected]/order_data_merge" } } i is not a Process Entity!

Information

2022-06-28 15:50:11.154

New Entity Initialized in the process with a passed Purview Client: Nome:order_data_merge - qualified_name:notebook://users/[email protected]/order_data_merge - Guid:-1000

Information

2022-06-28 15:50:11.375

New Entity Initialized in the process with a passed Purview Client: Nome:merged_order_data - qualified_name:https://dtdeveusadls.dfs.core.windows.net/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data - Guid:-1002

Information

2022-06-28 15:50:11.417

Error Loading to Purview: Return Code: Forbidden - Reason:Forbidden

Error

2022-06-28 15:50:11.418

outputs Entity: https://dtdeveusadls.dfs.core.windows.net/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data Type: azure_datalake_gen2_path, Not found, Creating Dummy Entity

Information

2022-06-28 15:50:11.418

Entities to load: { "entities": [ { "typeName": "purview_custom_connector_generic_entity_with_columns", "guid": -1000, "attributes": { "name": "order_one_data_delta", "qualifiedName": "https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_one_data_delta", "data_type": "azure_datalake_gen2_path", "description": "Data Assets order_one_data_delta" }, "relationshipAttributes": {} }, { "typeName": "purview_custom_connector_generic_entity_with_columns", "guid": -1001, "attributes": { "name": "order_two_data_delta", "qualifiedName": "https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_two_data_delta", "data_type": "azure_datalake_gen2_path", "description": "Data Assets order_two_data_delta" }, "relationshipAttributes": {} }, { "typeName": "purview_custom_connector_generic_entity_with_columns", "guid": -1002, "attributes": { "name": "merged_order_data", "qualifiedName": "https://dtdeveusadls.dfs.core.windows.net/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data", "data_type": "azure_datalake_gen2_path", "description": "Data Assets merged_order_data" }, "relationshipAttributes": {} } ] }

Information

2022-06-28 15:50:11.418

Sending this payload to Purview: {"entities": [ { "typeName": "purview_custom_connector_generic_entity_with_columns", "guid": -1000, "attributes": { "name": "order_one_data_delta", "qualifiedName": "https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_one_data_delta", "data_type": "azure_datalake_gen2_path", "description": "Data Assets order_one_data_delta" }, "relationshipAttributes": {} }, { "typeName": "purview_custom_connector_generic_entity_with_columns", "guid": -1001, "attributes": { "name": "order_two_data_delta", "qualifiedName": "https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_two_data_delta", "data_type": "azure_datalake_gen2_path", "description": "Data Assets order_two_data_delta" }, "relationshipAttributes": {} }, { "typeName": "purview_custom_connector_generic_entity_with_columns", "guid": -1002, "attributes": { "name": "merged_order_data", "qualifiedName": "https://dtdeveusadls.dfs.core.windows.net/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data", "data_type": "azure_datalake_gen2_path", "description": "Data Assets merged_order_data" }, "relationshipAttributes": {} } ]}

Information

2022-06-28 15:50:11.458

5c6c45d9-f3ba-437c-b1af-37370288541f

Error

2022-06-28 15:50:11.494

Error Loading to Purview: Return Code: Forbidden - Reason:Forbidden

Error

2022-06-28 15:50:11.494

Processes to load: { "entities": [ { "typeName": "spark_application", "guid": -1000, "attributes": { "name": "order_data_merge", "qualifiedName": "notebook://users/[email protected]/order_data_merge", "data_type": "spark_application", "description": "Data Assets order_data_merge" }, "relationshipAttributes": {} }, { "typeName": "spark_process", "guid": -1001, "attributes": { "name": "/Users/[email protected]/Order_data_merge/mnt/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data", "qualifiedName": "sparkprocess://https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_one_data_delta:https://dtdeveusadls.dfs.core.windows.net/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data", "columnMapping": "", "executionId": "0", "currUser": "", "sparkPlanDescription": "{"_producer":"[https://github.com/OpenLineage/OpenLineage/tree/0.8.2/integration/spark](https://github.com/OpenLineage/OpenLineage/tree/0.8.2/integration/spark/)","_schemaURL":"https://openlineage.io/spec/1-0-2/OpenLineage.json#/$defs/RunFacet","plan":[{"class":"org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand","num-children":0,"query":[{"class":"org.apache.spark.sql.catalyst.plans.logical.Union","num-children":2,"children":[0,1]},{"class":"org.apache.spark.sql.execution.datasources.LogicalRelation","num-children":0,"relation":null,"output":[[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_id","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3142,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_date","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3143,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"product_details","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3144,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"quantity","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3145,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_total","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3146,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}]],"isStreaming":false},{"class":"org.apache.spark.sql.execution.datasources.LogicalRelation","num-children":0,"relation":null,"output":[[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_id","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3152,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_date","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3153,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"product_description","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3154,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"quantity","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3155,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_total","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3156,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}]],"isStreaming":false}],"dataSource":null,"options":null,"mode":null}]}", "inputs": [ { "typeName": "purview_custom_connector_generic_entity_with_columns", "uniqueAttributes": { "qualifiedName": "https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_one_data_delta" } }, { "typeName": "purview_custom_connector_generic_entity_with_columns", "uniqueAttributes": { "qualifiedName": "https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_two_data_delta" } } ], "outputs": [ { "typeName": "purview_custom_connector_generic_entity_with_columns", "uniqueAttributes": { "qualifiedName": "https://dtdeveusadls.dfs.core.windows.net/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data" } } ] }, "relationshipAttributes": { "application": { "qualifiedName": "notebook://users/[email protected]/order_data_merge", "guid": -1000 } } } ] }

Information

2022-06-28 15:50:11.495

Sending this payload to Purview: {"entities": [ { "typeName": "spark_application", "guid": -1000, "attributes": { "name": "order_data_merge", "qualifiedName": "notebook://users/[email protected]/order_data_merge", "data_type": "spark_application", "description": "Data Assets order_data_merge" }, "relationshipAttributes": {} }, { "typeName": "spark_process", "guid": -1001, "attributes": { "name": "/Users/[email protected]/Order_data_merge/mnt/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data", "qualifiedName": "sparkprocess://https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_one_data_delta:https://dtdeveusadls.dfs.core.windows.net/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data", "columnMapping": "", "executionId": "0", "currUser": "", "sparkPlanDescription": "{"_producer":"[https://github.com/OpenLineage/OpenLineage/tree/0.8.2/integration/spark](https://github.com/OpenLineage/OpenLineage/tree/0.8.2/integration/spark/)","_schemaURL":"https://openlineage.io/spec/1-0-2/OpenLineage.json#/$defs/RunFacet","plan":[{"class":"org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand","num-children":0,"query":[{"class":"org.apache.spark.sql.catalyst.plans.logical.Union","num-children":2,"children":[0,1]},{"class":"org.apache.spark.sql.execution.datasources.LogicalRelation","num-children":0,"relation":null,"output":[[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_id","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3142,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_date","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3143,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"product_details","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3144,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"quantity","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3145,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_total","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3146,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}]],"isStreaming":false},{"class":"org.apache.spark.sql.execution.datasources.LogicalRelation","num-children":0,"relation":null,"output":[[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_id","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3152,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_date","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3153,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"product_description","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3154,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"quantity","dataType":"integer","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3155,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}],[{"class":"org.apache.spark.sql.catalyst.expressions.AttributeReference","num-children":0,"name":"order_total","dataType":"string","nullable":true,"metadata":{},"exprId":{"product-class":"org.apache.spark.sql.catalyst.expressions.ExprId","id":3156,"jvmId":"46c5a018-e302-415c-b122-71819d519700"},"qualifier":[]}]],"isStreaming":false}],"dataSource":null,"options":null,"mode":null}]}", "inputs": [ { "typeName": "purview_custom_connector_generic_entity_with_columns", "uniqueAttributes": { "qualifiedName": "https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_one_data_delta" } }, { "typeName": "purview_custom_connector_generic_entity_with_columns", "uniqueAttributes": { "qualifiedName": "https://dtdeveusadls.dfs.core.windows.net/int/md_supradata_insights/purview/lineage/open_lineage_test/order_two_data_delta" } } ], "outputs": [ { "typeName": "purview_custom_connector_generic_entity_with_columns", "uniqueAttributes": { "qualifiedName": "https://dtdeveusadls.dfs.core.windows.net/cur/md_supradata_insights/purview/lineage/open_lineage_test/merged_order_data" } } ] }, "relationshipAttributes": { "application": { "qualifiedName": "notebook://users/[email protected]/order_data_merge", "guid": -1000 } } } ]}

Information

2022-06-28 15:50:11.564

92710849-1f88-4934-9b9e-f8e2ea3f0baf

Error

2022-06-28 15:50:11.600

Error Loading to Purview: Return Code: Forbidden - Reason:Forbidden

Error

2022-06-28 15:50:11.659

Error Loading to Purview: Return Code: Forbidden - Reason:Forbidden

Error

2022-06-28 15:50:11.697

Error Loading to Purview: Return Code: Forbidden - Reason:Forbidden

Error

2022-06-28 15:50:11.736

Error Loading to Purview: Return Code: Forbidden - Reason:Forbidden

Error

2022-06-28 15:50:11.772

Error Loading to Purview: Return Code: Forbidden - Reason:Forbidden

Error

2022-06-28 15:50:11.772

Executed 'Functions.PurviewOut' (Succeeded, Id=a35694d7-3354-4584-99c1-b941a6db96bc, Duration=701ms)

Information

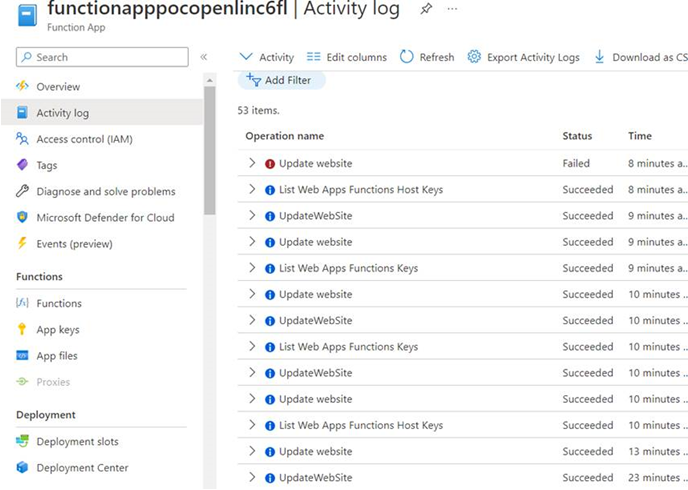

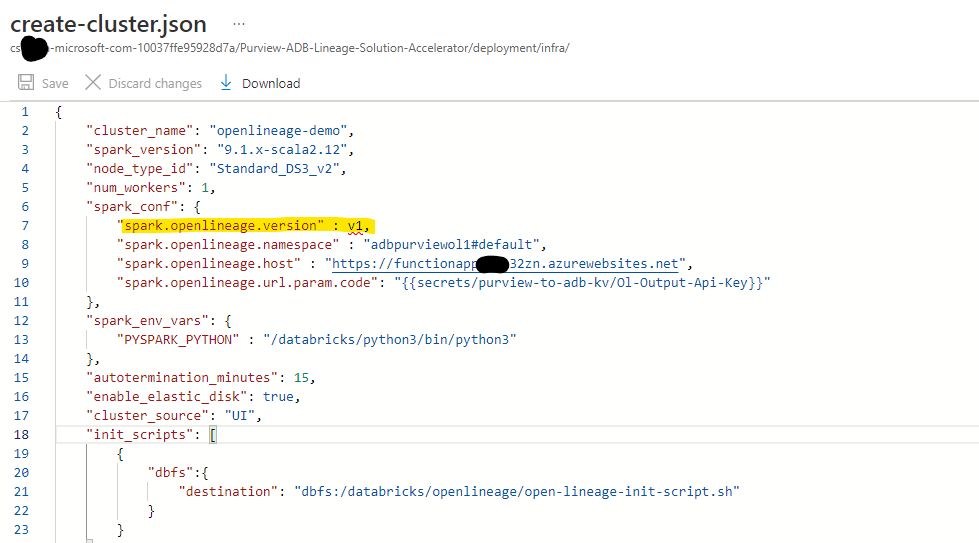

Screenshots

If applicable, add screenshots to help explain your problem.

Desktop (please complete the following information):

- OS: [e.g. Windows, Mac]

- OpenLineage Version: 0.8.2

- Databricks Runtime Version: 7.3

- Cluster Type: [e.g. Job, Interactive]

- Cluster Mode: [e.g. Standard, High Concurrency, Single]

- Using Credential Passthrough: [e.g. Yes, No]

Additional context

Purview has Private endpoint