This is for the 2020 NaNoGenMo. See here for the 2021 repo!

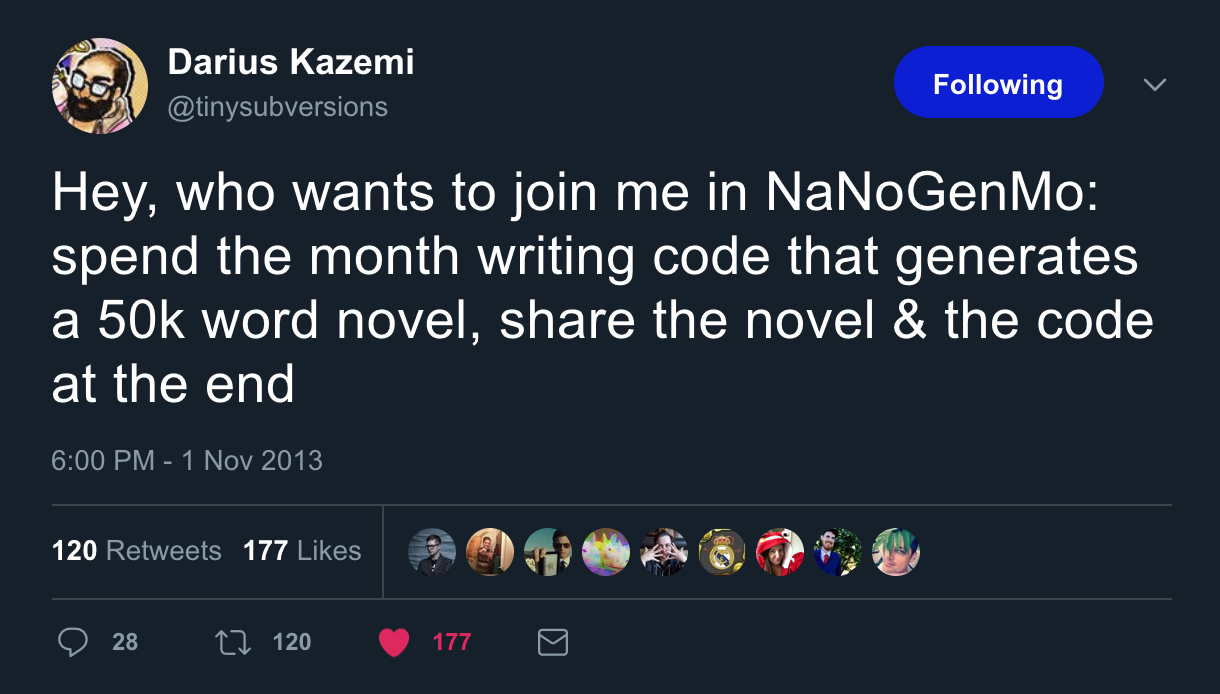

National Novel Generation Month - based on an idea Darius tweeted on a whim, where people are challenged to write code that writes a novel.

This is the 2020 edition. For previous years see:

Spend the month of November writing code that generates a novel of 50k+ words. This is in the spirit of National Novel Writing Month's interesting definition of a novel as 50,000 words of fiction.

The only rule is that you share at least one novel and also your source code at the end.

The source code does not have to be licensed in a particular way, so long as you share it. The code itself does not need to be on GitHub, either. We use this repo as a place to organize the community. (Convenient because many programmers have GitHub accounts and the Issues section works like a forum with excellent syntax highlighting.)

The "novel" is defined however you want. It could be 50,000 repetitions of the word "meow" (and yes it's been done!). It could literally grab a random novel from Project Gutenberg. It doesn't matter, as long as it's 50k+ words.

Please try to respect copyright. We're not going to police it, as ultimately it's on your head if you want to just copy/paste a Stephen King novel or whatever, but the most useful/interesting implementations are going to be ones that don't engender lawsuits.

This activity starts at 12:01am GMT on Nov 1st and ends at 12:01am GMT Dec 1st.

Open an issue on this repo and declare your intent to participate. If you already have some inkling of the kind of project you'll be doing, please title your issue accordingly. You may continually update the issue as you work over the course of the month. Feel free to post dev diaries, sample output, etc.

If you have more than one project you're attempting, feel free to post a new issue for that project and keep that one up to date as well.

Also feel free to comment on other participants' issues.

Official admins for NaNoGenMo are @dariusk, @hugovk, @MichaelPaulukonis, and @mewo2. We'll be doing our best to keep the issues section well organized and tagged.

There's an open issue where you can add resources (libraries, corpuses, APIs, techniques, etc).

There are already a ton of resources on the old resources threads from 2013, 2014, 2015, 2016, 2017, 2018, and 2019.

You might want to check out corpora, a repository of public domain lists of things: animals, foods, names, occupations, countries, etc.

Have fun!