sadnow / animationkit-ai Goto Github PK

View Code? Open in Web Editor NEWAnimationKit: AI Upscaling & Interpolation using Real-ESRGAN+RIFE

License: Other

AnimationKit: AI Upscaling & Interpolation using Real-ESRGAN+RIFE

License: Other

This toggle would allow for automatic backup and retrieval of AnKit and its dependencies to a user-specified location.

360Diffusion did this on a small scale, but not fill root directory backups.

https://github.com/sadnow/S2ML-Art-Generator/blob/main/S2ML_Art_Generator.ipynb did this, but I believe it actually made it where the program operated using files from the Drive root directory.

Due to filespeed bottlenecks, it may be more efficient to copy Drive backup files to a Colab session on each run. Either that or copy it to ram. Due to individual file transfer speed bottlenecks, it might be wisest to have this function save a zip file of the root directory rather than doing individual file copies.

Backing up /content/ using usual methods means that all of Google drive will also be backed up

Potential solution: Download dependencies, create a zip archive of /content/, then mount Drive

Real-ESRGAN offers a semi-newish inference_realesrgan_video.py, which does some of the previous ffmpeg work AnKit was doing by default (and also supports the newer anime-video models). However, AnKit's original chaining method (automatically transitioning from Source frames /mp4 > Real-ESRGAN > RIFE > Output destination) may still have uses with alternate image or video up-scaling tech, if/when future implementations ever occur.

This issue tracks the two planned implementations for chaning methods (which will be distinguished as method_a and method_b in code):

Hurdle: "error: unrecognized arguments: --netscale 4"

Originally posted by @sadnow in #2 (comment)

Method B chaining is the first method being tested with keep_audio (#11 ). If a user inputs an mp4 that doesn't contain audio, they will receive this error:

Stream map '1:a:0' matches no streams.

To ignore this, add a trailing '?' to the map.

We want to have AnKit verify whether a file contains sound - and if not (while keep_sound is checked) - automatically disable keep_sound to prevent the error from occuring.

I LOVE THIS NOTEBOOK! Major props. One question, the first few videos came out H265 and perfect, but after a few times running the notebook with different videos/input frame folders, the video fails to compress properly? Example, the video will save to my drive and will be available for download, but once I download and attempt to play the video, it won't open on any media player, yet will play while still loaded in my drive? I'm thinking that it could be a compression issue, but any help will be appreciated as I LOVE this notebook, if I haven't said it again. Thanks in advance, and amazing work!

Since my temporary departure from developments 2-3 months ago, a variety of bugs have sprung up, likely as a result of ever-changing dependencies. This thread will track the status of bringing AnKit back to its previous functioning state.

Some programs output as 1.jpg, 2.jpg, 3.jpg etc. whereas others output as 001.jpg, 002.jpg. etc.

Right now, the non-botched build does the former.

I need to make it auto-recognize the flow of a directory and have it sort accordingly.

a16434f hides the following options:

"'RealESRGANv2-anime-xsx2','RealESRGANv2-animevideo-xsx2-nousm','RealESRGANv2-animevideo-xsx2','RealESRGANv2-anime-xsx4','RealESRGANv2-animevideo-xsx4-nousm','RealESRGANv2-animevideo-xsx4"

Originally posted by @sadnow in #5 (comment)

These newer Real-ESRGAN's functions will be tested and integrated. Prior functions will be preserved and left as a toggle for users.

For documentation, I guess I could refer to this as Method-A & Method-B AnKit Chaining. Sounds fancy.

Reference links: https://github.com/xinntao/Real-ESRGAN/blob/master/docs/anime_video_model.md

https://stackoverflow.com/questions/678236/how-to-get-the-filename-without-the-extension-from-a-path-in-python?rq=1

--- Code overhaul

This would also keep_duration. Feature originally attempted in d8d62fd but abandoned due to confusing coding practices

For implementing Method A compatibility, see the ffmpeg examples on https://github.com/xinntao/Real-ESRGAN/blob/master/docs/anime_video_model.md

hmm, getting the following error since this afternoon:

Creating /content/frames_storage/upscaled_frame_storage ...

/content/Real-ESRGAN

Traceback (most recent call last):

File "inference_realesrgan.py", line 128, in <module>

main()

File "inference_realesrgan.py", line 84, in main

from gfpgan import GFPGANer

File "/usr/local/lib/python3.7/dist-packages/gfpgan/__init__.py", line 2, in <module>

from .archs import *

File "/usr/local/lib/python3.7/dist-packages/gfpgan/archs/__init__.py", line 10, in <module>

_arch_modules = [importlib.import_module(f'gfpgan.archs.{file_name}') for file_name in arch_filenames]

File "/usr/local/lib/python3.7/dist-packages/gfpgan/archs/__init__.py", line 10, in <listcomp>

_arch_modules = [importlib.import_module(f'gfpgan.archs.{file_name}') for file_name in arch_filenames]

File "/usr/lib/python3.7/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "/usr/local/lib/python3.7/dist-packages/gfpgan/archs/gfpganv1_cleanonnx_arch.py", line 8, in <module>

from .stylegan2_cleanonnx_arch import StyleGAN2GeneratorCleanONNX

ModuleNotFoundError: No module named 'gfpgan.archs.stylegan2_cleanonnx_arch'

Investigating.

In the following gist, I'm using the following for splitframes:

!ffmpeg -r 23.98 -pattern_type glob -i '*.png' -c:v libx264 -r 23.98 -pix_fmt yuv420p '/content/frames_storage/init_frame_storage/frames2video.mp4' #documented example realsrgan-video.md

Bug occurs with individual_frames on.

Attempted workarounds:

Occurs in https://gist.github.com/sadnow/0da9e55b7ae5e3ae2203560cd22d1cc8

Temporary bandaid: I am disabling fps autodetect for individual_frames configs & setting to 24.

This saves a tiny bit of space in the UI.

"inference_realesrgan.py: error: unrecognized arguments: --model_path /content/Real-ESRGAN/experiments/pretrained_models/RealESRGAN_x4plus.pth --netscale 4

/content/Real-ESRGAN/results"

Re-evaluating available params

Originally posted by @sadnow in #2 (comment)

This is an evolution of the previous classes and functions AnKit utilized pre-2.5.

Rough draft:

class SadKit:

def __init__(self):

## instantiating the 'Inner' class

#self.debug = self.Debug()

self.path = self.Path()

self.image_tools = self.Image_tools()

#Ank = self.AnimationKit_colab

# self.dprint = self.dprint

#dprint = self.debug_print

import os.path

from os import path

def debug_print(self, msg): #debug print

print(msg) #make this colored eventually

#code to clear screen

class Image_tools:

def frames2video(self, input):

#!ffmpeg -framerate $_target_fps -pattern_type glob -i '*.png' -y '/content/frames2video.mp4' #old method

print("RUNNING THE EXPERIMENTAL")

!ffmpeg -r 24 -pattern_type glob -i '*.png' -c:v libx264 -r 23.98 -pix_fmt yuv420p '/content/frames_storage/init_frame_storage/frames2video.mp4' #documented example realsrgan-video.md

#note: detect_fps might be wonky

class Path:

#intent(s): detect, create, insert, type_id, remove_extension, append_prefix, append_suffix

#

def detect_dir(self, input): #/content/example.mp4 would become /content/

dir = os.path.dirname(input)

return dir

def make(self, input):

!mkdir -p $input

def type_id(self, input): #determine whether file or directory

#types: file,dir

import os.path

from os import path

input = self.sanitize(input)

if os.path.isdir(input): type = 'dir'

elif os.path.isfile(input): type = 'file'

else: type = 'error'

#print(type)

return type

#else: print("ERROR: Your input file is neither an mp4 nor a frame directory.")

def sanitize(self, input):

import os.path

input = os.path.normpath(input)

return input

def filter_by_extension(self, input_dir, output_dir, file_ext): #sorts a dir's files by extension and copies matching files to new dir

#print("\n Beginning sortFrames... ")

if not self.query(output_dir):

self.make(output_dir)

#print("\n Copying frames to "+'/content/frames_storage/init_frame_storage'+" for processing...\n")

%cd $input

#!find -maxdepth 1 -name '*.png' -print0 | xargs -0 cp -t '/content/frames_storage/init_frame_storage'

!find -maxdepth 1 -name '*.'$file_ext -print0 | xargs -0 cp -t $output

%cd $output

!find . -type f -name "*."$file_ext -execdir bash -c 'mv "$0" "${0##*_}"' {} \; #removes anything not numbered (junk files) from new dir

self.dir_file_suffix_padding(output_dir)

print("\n Finished copying frames to "+'/content/frames_storage/init_frame_storage'+".\n")

print("Completed sortFrames... \n")

def dir_file_suffix_padding(input_dir): #pads all filenames within a dir

%cd $input_dir

!rename 's/\d+/sprintf("%05d",$&)/e' * #adds padding to numbers

return

def query(self, input):

if self.type_id(input) == 'file' or self.type_id(input) == 'dir':

#print(self.type_id(input))

return True

else: return False

def upload(self, input):

import os

from google.colab import files

import shutil

# upload images

upload_folder = 'upload'

result_folder = 'results'

if os.path.isdir(upload_folder):

shutil.rmtree(upload_folder)

if os.path.isdir(result_folder):

shutil.rmtree(result_folder)

os.mkdir(upload_folder)

os.mkdir(result_folder)

#

uploaded = files.upload()

for filename in uploaded.keys():

dst_path = os.path.join(upload_folder, filename)

print(f'move {filename} to {dst_path}')

shutil.move(filename, dst_path)

######################

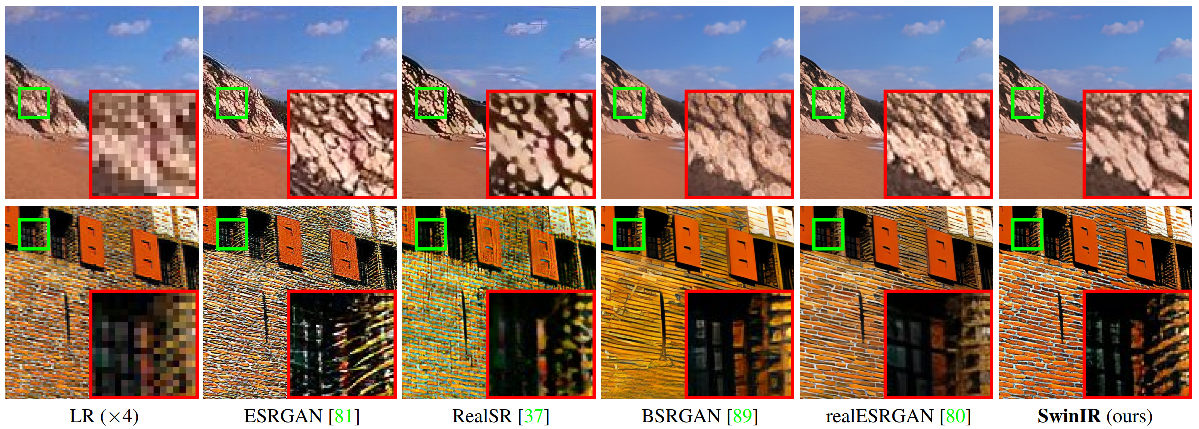

This would help to highlight the differences between different tool models and versions of AnKit.

Currently looking into reusing the technique found in Real-ESRGAN Colab demo's; cell 4 named "Visualization." (https://colab.research.google.com/drive/1k2Zod6kSHEvraybHl50Lys0LerhyTMCo)

Currently, there's a checkbox to import_mp4_file, which cancels out importing from individual frame paths.

#207 I think we implemented a proper processing method.

Originally posted by @hzwer in hzwer/ECCV2022-RIFE#137 (comment)

OOP was something I was originally aiming for. This will require a rewrite of some of the code but will hopefully ultimately be easier to work with.

Commits will be tracked here.

Macrogoals

Microgoals

When I try running an MP4 input I get this error.

Any ideas?

Hi there, your Notebook is very cool !

A couple of - maybe interesting - suggestions:

Hope that inspires !

It appears all that needs done is frames2video, and then pass the compiled mp4 through new_method (formerly method_b)

Official example in animevideo docs: ffmpeg -r 23.98 -i out_frames/frame%08d.jpg -c:v libx264 -r 23.98 -pix_fmt yuv420p output.mp4

A2.6 implementation: !ffmpeg -framerate $_target_fps -pattern_type glob -i '*.png' -y '/content/frames2video.mp4'

a16434f offers options for different upscaling (Real-ESRGAN models); but Practical-RIFE also has multiple models with varying pros and cons.

The following snippet is for archival purposes:

Thanks @hzwer for the information. Could you give me the link to the best model that is best for anime?

quality (both 2D and 3D):

2.3 > 2.4 > 4.0 > 3.1 > 3.9 > 3.8

speed:

4.0 > 3.9 > 3.8 > 3.1 > 2.3/2.4

I don't recommend using other versions as they are either redundant or broken.

Originally posted by @PweSol in hzwer/Practical-RIFE#10 (comment)

I'm using the animation kit-AI on google colab and cuda is running out of memory, I want to specify the tile size, please tell me where to insert the code! -I want to specify the tile size, but I need to know where to insert the code! What code do I put it in?

↓URL

https://colab.research.google.com/github/sadnow/AnimationKit-AI_Upscaling-Interpolation_RIFE-RealESRGAN/blob/main/AnimationKit_Rife_RealESRGAN_Upscaling_Interpolation.ipynb#scrollTo=MhMORNgduDyt

Error message "

Error CUDA out of memory.Tried to allocate 7.91 GiB (GPU 0; 14.76 GiB total capacity; 2.51 GiB already allocated; 2.81 GiB free; 10.90 GiB reserved in total by PyTorch) If reserved memory is more than allocated memory, try setting max_split_size_mb to prevent fragmentation. See the documentation on memory management and PYTORCH_CUDA_ALLOC_CONF.

If CUDA runs out of memory, try setting -tile to a smaller number.

Test 428 A00429

"

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.