Comments (19)

For future reference, this Stack Overflow thread provides insight into some options that give us better mesh-oriented folds:

https://stackoverflow.com/a/53908416/764307

from augraphy.

This feature was also in my mind and I would like to work on it in my next PR.

from augraphy.

@shaheryar1 Have you had a chance to work out some ideas for generating random 2D maps that simulate these 3D effects? If so, can you share a code branch where you are working on this so that other can provide feedback and ideas?

DocCreator's 3D transforms use a library of 3D meshes that warp the document and apply a ray casting type shader. However, that method is substantially more complicated than what is needed to emulate the intended effects. If we can use simpler transforms, then we can maintain higher performance and more maintainable code.

For example, we can start with just the affine warps and worry about the variable brightness as a secondary concern. For the warp, we have to start with just the question of how we create the 2D transformation map (without actually adding a 3rd dimension for the depth/altitude of the positional shift, which would be used for relative darkness/brightness based on direction of light source).

As parameters to generating the 2D mesh, I figure we might randomize things like the gradient (angle of ascent/descent), intensity (max peak/valley), angle of fold's central line relative to page -- there are probably better params and names than these.

But, I'm not sure how one would create 2D meshes using math functions or these params. Do you or others have some ideas?

from augraphy.

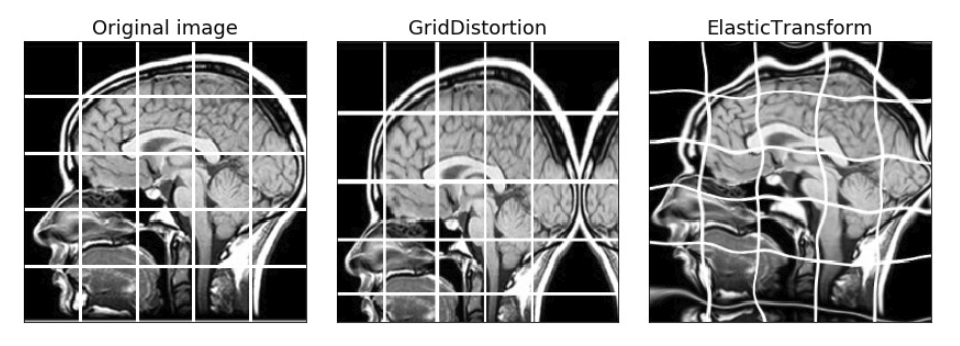

In addition to the scikit-image affine warp implementation mentioned in the issue, we might borrow ideas from Albumentations implementation of elastic warp transform.

from augraphy.

@shaheryar1 are you still working on this? Otherwise i will look further on how to implement this. Actually i think it will be better if we avoid those 3D meshes, it would be much more complicated with that. Affine warp should be able to do something like this, but definitely need more work if we want to make it looks natural.

from augraphy.

The affine warp requires a 2D "mesh" of sorts. Basically, the mesh represents the "before" and "after" positions of key points fed into the warp processing.

If we get to adding lighting or differing brightness/darkness levels, then a 3rd dimension of the mesh will be required to represent a "z index". But, we will want to get the warp functional before worrying with brightness or darkness.

@shaheryar1 is going to work on #13 first. So @kwcckw, feel free to work on an implementation of this feature and we can all collaborate on a solution based on the results of your initial experiments.

from augraphy.

I researched on this and there wasn't much literature I found on this. For initial experiments, I thought to try applying 2-D affine transformation on sub-part of the image i:e on straight vertical/horizontal line with 5-10 pixel width. But did not got a chance to try this approach. @kwcckw you can try this idea to kick start this.

from augraphy.

I researched on this and there wasn't much literature I found on this. For initial experiments, I thought to try applying 2-D affine transformation on sub-part of the image i:e on straight vertical/horizontal line with 5-10 pixel width. But did not got a chance to try this approach. @kwcckw you can try this idea to kick start this.

Thanks, from the suggestion by @jboarman , i'm thinking to try grid distortion and elastic transform from here:

https://github.com/albumentations-team/albumentations

Possibly the combination of them as well.

from augraphy.

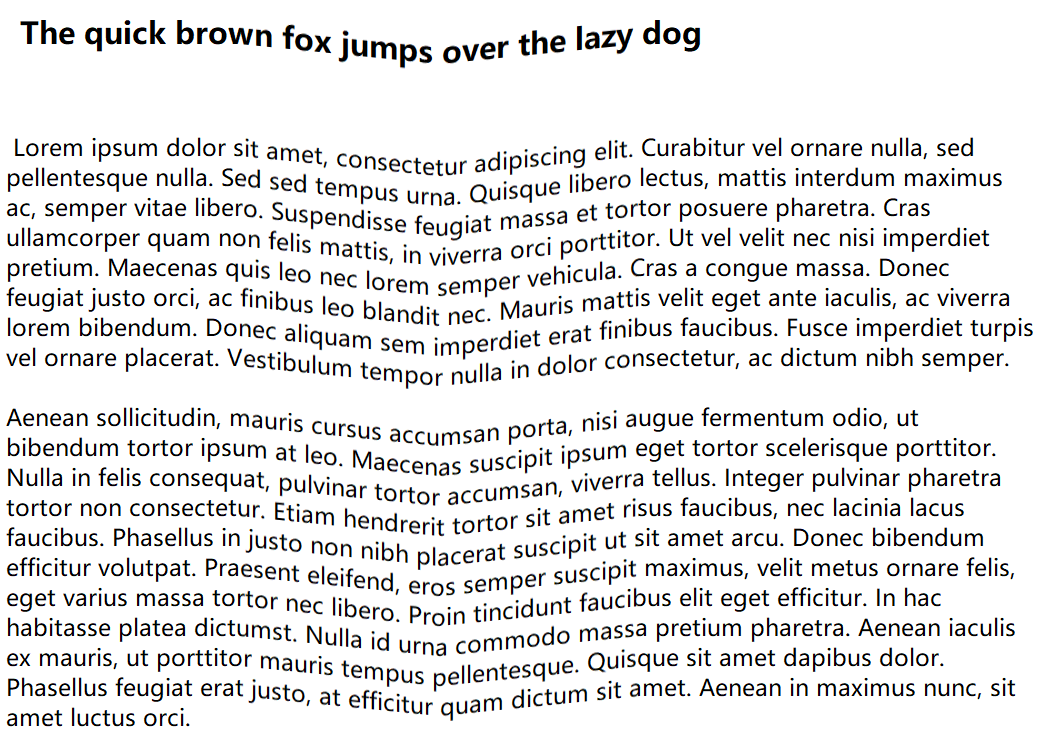

This is the result from 2 affine transform:

Looks like we need further lighting related processing to make it looks more natural, specifically on the folding area.

from augraphy.

That’s a great first start. Can you share your code in some way? As a Colab notebook would be ideal, but even as a gist would be OK.

To get the lighting, we have to translate the implied mesh into a mask that we add to to the warped image. So, for each position, we are either descending or ascending, and the value is therefore a positive or negative number. Adding that layer to the base, ensuring a pixel does not go below 0 or above 255, will then darken or lighten the image.

For a simple BW image like that example, we’ll only see darkening. Direction of lighting can be dealt with later.

from augraphy.

That’s a great first start. Can you share your code in some way? As a Colab notebook would be ideal, but even as a gist would be OK.

To get the lighting, we have to translate the implied mesh into a mask that we add to to the warped image. So, for each position, we are either descending or ascending, and the value is therefore a positive or negative number. Adding that layer to the base, ensuring a pixel does not go below 0 or above 255, will then darken or lighten the image.

For a simple BW image like that example, we’ll only see darkening. Direction of lighting can be dealt with later.

Okay, but i need to figure out the general function to shift the image after the transformation first, and google colab should be good enough to test the code.

from augraphy.

Here is the draft of the code in google colab:

https://drive.google.com/drive/folders/1t4UBurLbD-asR9NvERhoOVQSnkgKyrL0?usp=sharing

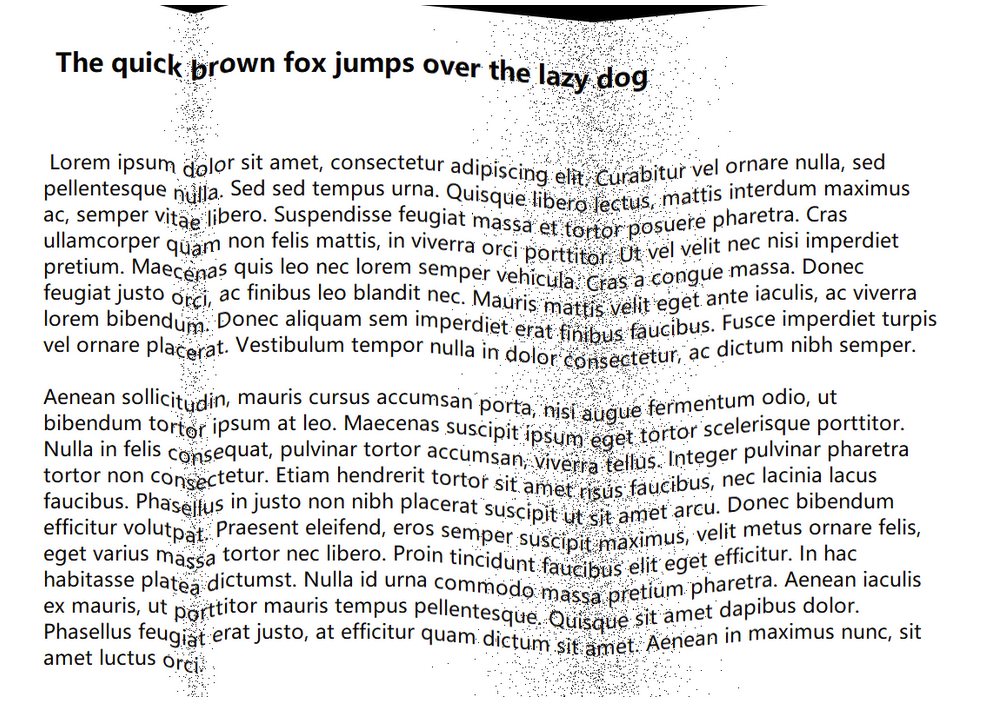

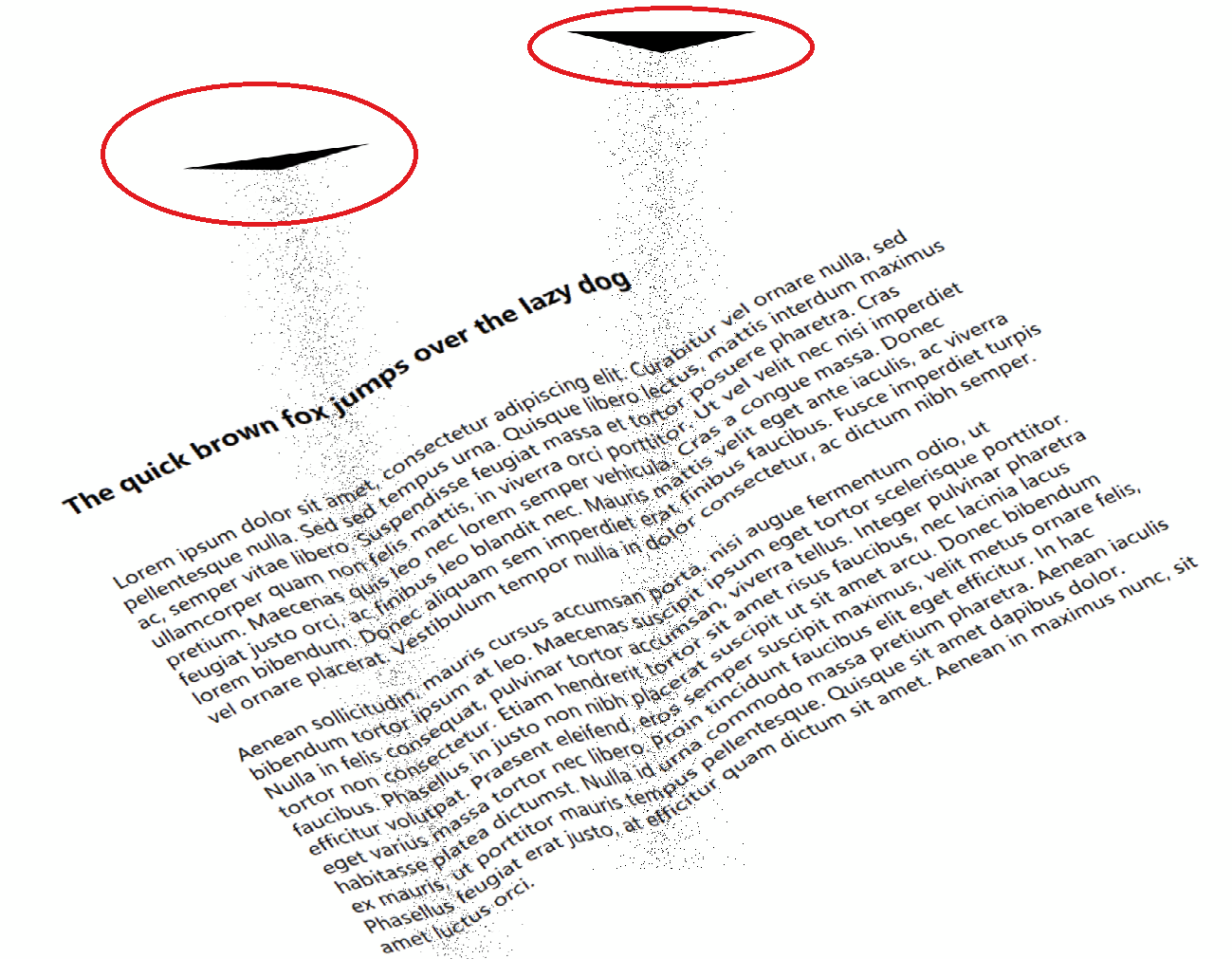

I added some noises and we are able to fold 2 locations too:

I think it looks much more better now with the noises.

from augraphy.

This is a great starting point. We should go ahead and proceed with creating a PR based on this implementation since it is viable as it is now. We should continue to track this as an open issue, or split out a V2 for a more advanced semi-3D implementation down the road, but this is great as it is now.

What parameters do you see that we should randomize for this version of the implementation?

Some ideas for options and potential default values:

fold_count = [0,1,2,3]- randomly selected # of folds on pageorientation = [90, 45, 0, (35, 55)]- angle of fold's central line relative to page; where tuple represents a fluid range of angle valuesorientation_jitter = 10- degrees of fold angle variationfold_noise = 0.5- intensity of noise from 0..1; should just embed some level of jitter in this as I don't think it's necessary to parameterize noise jittergradient_width = 0.01- simplistic measure of the space affected by fold prior to being warped (in units of percentage of width of page)gradient_height = 0.01- simplistic measure of depth of fold (unit measured as percentage page width)

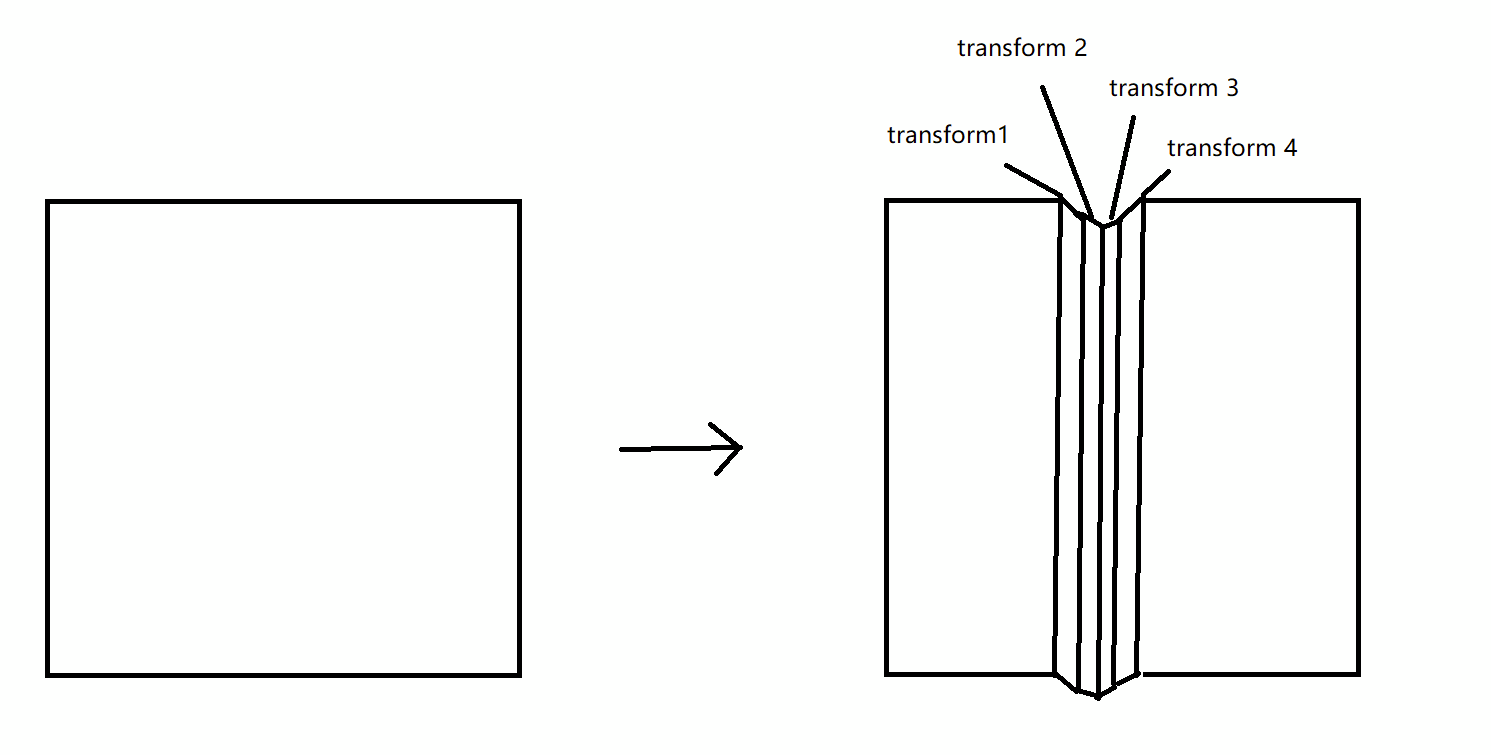

This sample sketch below provides a half-baked concept for a gradient intensity measure. This could very easily change in the future as we advance this feature, and we should not be afraid of breaking changes later with a feature that is so new. I'm pretty open to how we parameterize that fold intensity measure as there are clearly lots of ways to handle that.

from augraphy.

This is a great starting point. We should go ahead and proceed with creating a PR based on this implementation since it is viable as it is now. We should continue to track this as an open issue, or split out a V2 for a more advanced semi-3D implementation down the road, but this is great as it is now.

Okay, i will put it up as a draft and create a pull request later once we finalized the parameters below.

What parameters do you see that we should randomize for this version of the implementation?

Some ideas for options and potential default values:

* `fold_count = [0,1,2,3]` - randomly selected # of folds on page

Yes, i think randomly would be better instead of let user specify the folding x location. Also actually we can create folding effect at the edges of image too, so that it would looks like curling up or down:

* `orientation = [90, 45, 0, (35, 55)]` - angle of fold's central line relative to page; where tuple represents a fluid range of angle values * `orientation_jitter = 10` - degrees of fold angle variation

I need to think about this and test a bit first, since it might not be straight forward to create diagonal lines. One of the workaround is to rotate the image and fold them multiple times, for example:

But do take note right now there's artifacts (in red circle) from the perspective transformation, which I haven't check on the possible workaround yet.

* `fold_noise = 0.5` - intensity of noise from 0..1; should just embed some level of jitter in this as I don't think it's necessary to parameterize noise jitter

Yea, just noise should be sufficient. I think level of noises should be controlled by how far the noise from the center of the folding, and right now the code is doing that, where the noise is heaviest at the center of folding.

* `gradient_width = 0.01` - simplistic measure of the space affected by fold prior to being warped (in units of percentage of width of page) * `gradient_height = 0.01` - simplistic measure of depth of fold (unit measured as percentage page width)This sample sketch below provides a half-baked concept for a gradient intensity measure. This could very easily change in the future as we advance this feature, and we should not be afraid of breaking changes later with a feature that is so new. I'm pretty open to how we parameterize that fold intensity measure as there are clearly lots of ways to handle that.

Yes, i think this should be feasible, where gradient width would be the folding x length while gradient height would be the distortion in depth . And right now the algorithm is using 2 transforms, at left and right side of the folding:

It could be smoother with more than 2 transforms:

So at this point, do you think we need this kind of complexity?

from augraphy.

It could be smoother with more than 2 transforms .... So at this point, do you think we need this kind of complexity?

Regarding your last question, I think 2 transforms is good enough for V1 of this augmentation. Taking it further would likely consider some semi-3D aspects and direction of light source etcetera that will require more thought and not worth it for where we are with the library.

Also, regarding the folds at arbitrary angles, that too can be deferred to a future date since it's not truly necessary for a V1 implementation. It's something we can improve later as needed.

from augraphy.

It could be smoother with more than 2 transforms .... So at this point, do you think we need this kind of complexity?

Regarding your last question, I think 2 transforms is good enough for V1 of this augmentation. Taking it further would likely consider some semi-3D aspects and direction of light source etcetera that will require more thought and not worth it for where we are with the library.

Also, regarding the folds at arbitrary angles, that too can be deferred to a future date since it's not truly necessary for a V1 implementation. It's something we can improve later as needed.

Alright got it, thanks for the input.

from augraphy.

This looks really good already, great work @kwcckw! Please submit a PR adding this augmentation.

I think the artifacts you circled above look pretty natural; the edge of the "page" looks clean except for the corner where it was folded, and the dark spots would appear when copied by a real scanner (see images in #13 ).

In the future, we can probably also approximate part of the "crumpled paper" effect I was thinking of in #17 by adding lots of these folds with small gradient widths and varying lengths (so the fold doesn't extend across the whole image).

from augraphy.

This looks really good already, great work @kwcckw! Please submit a PR adding this augmentation.

Thanks, i just submitted the pull request, but still it might be buggy in some conditions, need to test further.

I think the artifacts you circled above look pretty natural; the edge of the "page" looks clean except for the corner where it was folded, and the dark spots would appear when copied by a real scanner (see images in #13 ).

Yea, the edges may look more natural if we apply perspective transform a few more times to smoothen it, but that probably would be overkill for now.

In the future, we can probably also approximate part of the "crumpled paper" effect I was thinking of in #17 by adding lots of these folds with small gradient widths and varying lengths (so the fold doesn't extend across the whole image).

Right, but the challenge i can see is how to make it looks natural, since if the fold doesn't extend across the whole image, there's a lot of work need to be done on the starting line of folding effect. Right now i still don't have a clear idea to address on this problem yet.

from augraphy.

PR #28 has been merged.

from augraphy.

Related Issues (20)

- Callable pipeline HOT 2

- Develop Augmentations that Simulate Images Taken by Camera Phone

- Add Pattern-based Distortion Generator HOT 6

- Add `InkShifter` Augmentation to Shift Ink Pixels Randomly and Follow Background Shadows

- Add Wrinkled, Crumpled Paper Augmentation HOT 1

- Create Utility Function for Exposing Underlying Pixel Colors

- Empty Pipeline lowers image quality HOT 12

- Add Augmentation for Shadows Cast onto Camera-Sourced Document Images HOT 1

- Create Baseline Performance Benchmark; Apply Initial Optimizations Using Numba HOT 2

- Create Example Using Dataloader for PyTorch and TensorFlow HOT 1

- Add Python 3.11 Support, Drop Python 3.7 HOT 2

- Images Broken in PyPI Listing HOT 2

- Reflected Light from Camera Flash or other Bright Sources HOT 1

- Add Color Shifting / 3D Blur Effect HOT 4

- Improve PageBorder effect HOT 1

- Add support for bounding box, keypoints and mask. HOT 1

- Add `InkColorSwap` to Replace the Color Used for Lettering in a Document HOT 1

- Add `InkMottling` Augmentation to Ensure Ink is Non-Uniform HOT 1

- Add support for image with alpha layer. HOT 1

- Color range in InkBleed is not working HOT 1

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from augraphy.