sparkfish / augraphy Goto Github PK

View Code? Open in Web Editor NEWAugmentation pipeline for rendering synthetic paper printing, faxing, scanning and copy machine processes

Home Page: https://github.com/sparkfish/augraphy

License: MIT License

Augmentation pipeline for rendering synthetic paper printing, faxing, scanning and copy machine processes

Home Page: https://github.com/sparkfish/augraphy

License: MIT License

Here are some thoughts about the architecture of the project going forward, from the perspective of composability. In the next 4 paragraphs, I propose some changes to the design of the base classes.

Function-level composability

Few of the existing Augmentation modules use more than the __call__ method to perform their transformations. All of this behavior should be factored out into other functions that can then be called in the __call__ method in sequence, or as the composition of those functions. These functions ideally would all be written in the "pure" style, where they operate only on their inputs and return a result, rather than operating on state defined elsewhere. This will make debugging easier and will also most likely surface some functions as candidates for refactoring into another library. As an example, the create_scanline_mask method in DirtyRollersAugmentation is a prime candidate for splitting up into smaller functions.

Module-level composability

The current modules are all written in a tightly coupled style, where operations are applied to input images and then stored directly in a pipeline phase, from the __call__ method of the augmentation. This should be changed so the call method instead takes an image as argument, then returns itself and the result of the completed operation as a tuple or list. Then the calling pipeline can sequence these on its own, without the augmentations being able to "see" the pipeline they're a part of. We would then be free to modify the internal behavior of augmentations or pipelines, so long as we maintain their interfaces.

This would facilitate direct composition of Augmentations too; in this case, it would be possible to write an Augmentation definition like lambda img: Augmentation2(Augmentation1(img)[1]), which ignores the first element of the tuple returned by Augmentation1 (namely, Augmentation1 itself) and operates on the output image.

Pipeline-level composability

Since all Augmentations are fundamentally matrix operations on the numpy.array representation of image data, their compositions are (or can be) given by matrix compositions, which gives us some nice mathematical properties. In particular, the associativity of matrix composition lifts to associativity of Augmentation composition, once module-level composability is ensured. This means that Augmentation3(Augmentation2(Augmentation1(image))) is the same no matter whether we combine Augmentation3 and Augmentation2 first or combine Augmentation2 and Augmentation1 first.

It gets better: AugmentationSequences are really just wrappers around lists, and so they also compose associatively: running Sequence1 and then Sequence 2 is the same as running (Sequence1.augmentations + Sequence2.augmentations).

Better still: AugmentationPipelines are dictionaries underneath, and have an associative composition too, but may involve renaming keys in the addition. AugmentationPipelines are actually already a mechanism of Augmentation composition, in the sense that running Pipeline1 and then Pipeline2 in sequence should be the same as running one pipeline that contains all phases of Pipeline1 followed by all phases of Pipeline2.

Factor probability out of Augmentation?

Currently the only state & behavior inherited by extenders of Augmentation is the probability. I submit that "probability of being applied" is not a property inherent to Augmentations, but rather to the pipeline running the Augmentations. As such, probability should be factored out of every Augmentation and into the pipeline class, along with the relevant calculations. This would allow, for example, OneOf to just sample its sequence to get an augmentation to run, rather than needing to inspect every member of the AugmentationSequence it is given, and perform an extra calculation with the probabilities it finds.

Some of my favorite moments as a teacher come when I make mistakes in front of my students. Here's one for my colleagues:

Perhaps embarrassingly, I just learned (while making OverlayBuilder) that numpy arrays are accessed in row-major order, so calculations involving image height happen in the first entry of the array access, meaning that expression1 in array[expression1, expression2] will affect the y-component of the index position. In the process of writing that class, I ran into a bug where, when I tried to draw binder holes on the left side of the image, the output had binder holes along the top, transposed from my intent.

This is undoubtedly old news to everyone else who will ever read this, but I want to raise it here anyway; any code I've ever touched that contains numpy arrays should be checked to make sure any relevant computations are actually occurring in the correct position.

I miss Fortran.

I see that currently rotation is being applied in the augmentaionpipeline.py before ink phase .

ink = self.rotate_image(

ink, angle

)

I think it should be added as an individual augmentation in post phase. Because of the following reasons

To add a similar effect from bad photocopy machine, for example:

image source: https://www.kaggle.com/aravindram11/funsdform-understanding-noisy-scanned-documents

At this point, i think it can be done by adding small blobs of different size, and varying their area and frequency to reproduce a similar effect.

For the starters there must an interactive way to see how to create and use different Augraphy pipelines. Multiple Jupyter Notebooks, each representing a different use-case can serve this purpose.

To verify Augraphy's utility, we need to reproduce the document in this notebook.

There are some notes about the effects present in the document, as well as some suggested augmentations you might need when reproducing it. These lists are not exhaustive; you may need to use more, or less, to achieve a faithful reproduction.

We can discuss the reproduction process for this document in this Issue. Discussion about this project in general should go here.

Images that are being augmented need to be standardized to the resolution for which the augmentation is designed. Otherwise, the scale of the augmentation may not match the target image.

This issue proposes to (1) detect the current resolution and (2) change the incoming image to match the normalized standard resolution.

Pixel-oriented augmentations are most easily implemented when a given input scale is assumed as a precondition, This allows the applied effects to be appropriate and tuned for that scale. For example, artifacts applied to the edges of lettering will naturally need to be applied at a scale relative and relevant to the scale of the lettering itself. It is simpler to assume a given DPI as input to such augmentations than to determine a way to make all augmentations generally scale-invariant. As a path to scale invariance, this issue aims to re-scale the input to the optimal resolution. The original scale can be restored at the end of the pipeline execution if desired.

To detect the current resolution of any image, we could make guesses based on the size of the image. This will likely work for normal documents. However, a lot of our images that are being tested don't fit this profile.

Another approach is to scan vertically and horizontally to capture average stroke widths along with average gaps between strokes to make a probabilistic guess of the image's current resolution.

Both of these approaches can be used in tandem, and both will require testing to determine what values make the most sense.

The resolution that we should target by default should match with current OCR guidance, which is:

Some augmentations may benefit from a higher DPI prior to down sampling to the target DPI needed. However, to keep this simple for now, we should avoid that extra processing step, so that everything is uniform once the images have been prepped for processing.

Here are the effects that a faxed document experiences:

The resolution should be assumed to have already been normalized (to 300 DPI) in advance of calling this augmentation, OR the source resolution could be provided as a parameter so that proper scaling can be calcutated to a convert to 200 DPI. Will may need to re-scale it back to the source resolution (after being downsampled) so that other augmentations, that rely on a normalized resolution, can be applied after this augmentation has been applied.

Phaxio has a short and simple article showing the difference in thresholded ("monochrome") vs halftoned faxes.

Thresholding basics are covered in this OpenCV Tutorial.

To improve composability of pipelines and to follow current standard practices exhibited by Albumentations, we should document and update our randomness functionality:

p as probability parameter for consistency with AlbumentationsSee Pytorch notes on reproducibility for ideas on what we need to document.

I tried to generate consistent output from the image but looks like it doesn't seem to be so straight forward. So wouldn't it be better if we making those randomly initialized parameters as optional? So that we can get consistent output with the same pipeline.

Right now i can see the problem is there are so many randomize parameters and it would be difficult to get consistent output style from a same pipeline, so if i want to use the same pipeline with multiple papers, i might end up with different inconsistent style output.

To verify Augraphy's utility, we need to reproduce the document in this notebook.

There are some notes about the effects present in the document, as well as some suggested augmentations you might need when reproducing it. These lists are not exhaustive; you may need to use more, or less, to achieve a faithful reproduction.

We can discuss the reproduction process for this document in this Issue. Discussion about this project in general should go here.

The test.py file returns following error:

Traceback (most recent call last):

File "test.py", line 41, in <module>

crappified = pipeline.augment(img)

File "autography_tests/env/lib/python3.8/site-packages/Augraphy/AugraphyPipeline.py", line 40, in augment

self.paper_phase(data)

File "autography_tests/env/lib/python3.8/site-packages/Augraphy/Augmentations.py", line 29, in __call__

augmentation(data)

File "autography_tests/env/lib/python3.8/site-packages/Augraphy/Augmentations.py", line 687, in __call__

texture = self.get_texture(shape)

File "autography_tests/env/lib/python3.8/site-packages/Augraphy/Augmentations.py", line 755, in get_texture

texture = random.choice(self.paper_textures)

File "/usr/lib/python3.8/random.py", line 290, in choice

raise IndexError('Cannot choose from an empty sequence') from None

IndexError: Cannot choose from an empty sequence

The first iteration of the for loop runs fine.

Some of our augmentations fail when given input images that are too small.

For example, the Letterpress augmentation generates Gaussian blobs for the ink layer, which can be up to 95 pixels in diameter. When this blob is applied to the ink layer, an error is thrown by this line or the next, if the input image is smaller than the generated blob. We try to generate a random range where the end value of the range is smaller than the starting value.

We should determine a reasonable minimum size for images, or adjust the default values of our augmentations to allow for very small images. I think it's reasonable to say that 100x100 pixels is the minimum size, but I can think of valid reasons to work on smaller ones; single-line documents, for example, or a signature.

This is part of a bigger discussion around tuning the defaults values of every augmentation, and of the default pipeline, to produce even more realistic results.

Hi,

Facing error with :

post_phase = AugmentationSequence([

DirtyRollersAugmentation(

line_width_range=(8, 12),

probability=1),

LightingGradientAugmentation(),

BrightnessAugmentation('post'),

SubtleNoiseAugmentation(),

JpegAugmentation()

])

Error:

File "C:\augraphy\augraphy-dev\src\Augraphy\Augmentations.py", line 236, in __call__

if (not self.debug and rotate):

AttributeError: 'DirtyRollersAugmentation' object has no attribute 'debug

There wasn't any "self.debug" so the "self.debug" should be removed?

Same as "self.transform":

File "C:\augraphy\augraphy-dev\src\Augraphy\Augmentations.py", line 240, in __call__

mask = self.transform(self.create_scanline_mask, image.shape[1], image.shape[0], line_width)

AttributeError: 'DirtyRollersAugmentation' object has no attribute 'transform'

Or DirtyRollersAugmentation is still under development?

Hi,

I'm facing an inconsistent error with the added brightness augmentation, here's the snippet of my code:

pipeline = default_augraphy_pipeline()

pipeline.ink_phase.augmentations.append(BrightnessAugmentation('ink'))

paper_factory = pipeline.paper_phase.augmentations[0]

paper_factory.probability = 1.0

jpeg_aug = pipeline.post_phase.augmentations[-1]

jpeg_aug.quality_range = (10, 20)

img = np.array(create_pdf417())

crappified = pipeline.augment(img)

for i in range(10):

crappified = pipeline.augment(img)

Upon checking, looks like image are not always returned in bgr (3 channels) from the AugmentationResult although the input is in 3 channels:

Could you check on this?

Thank you.

To verify Augraphy's utility, we need to reproduce the document in this notebook.

There are some notes about the effects present in the document, as well as some suggested augmentations you might need when reproducing it. These lists are not exhaustive; you may need to use more, or less, to achieve a faithful reproduction.

We can discuss the reproduction process for this document in this Issue. Discussion about this project in general should go here.

Images that are being augmented need to be standardized to the resolution for which the augmentation is designed. Otherwise, the scale of the augmentation may not match the target image.

This issue proposes to (1) detect the current resolution and (2) change the incoming image to match the normalized standard resolution.

To detect the current resolution of any image, we could make guesses based on the size of the image. This will likely work for normal documents. However, a lot of our images that are being tested don't fit this profile.

Another approach is to scan vertically and horizontally to capture average stroke widths along with average gaps between strokes to make a probabilistic guess of the image's current resolution.

Both of these approaches can be used in tandem, and both will require testing to determine what values make the most sense.

The resolution that we should target by default should match with current OCR guidance, which is:

Some augmentations may benefit from a higher DPI prior to down sampling to the target DPI needed. However, to keep this simple for now, we should avoid that extra processing step, so that everything is uniform once the images have been prepped for processing.

Currently, the AugmentationSequence class contains a method called add_transparency_line which has behavior dependent on the use_consistent_lines value of an object extending LowInkLineAugmentation, currently an LowInkRandomLinesAugmentation or LowInkPeriodicLinesAugmentation object.

There are two issues:

We should move this behavior out to another place. Probably to AugmentationPipeline for now, but this has a similar effect there.

Motivation

It's not uncommon to scan documents that have undergone some physical deformation, like tearing, folding, or crinkling. The resulting changes in the surface of the paper generally become more apparent after digitization, causing difficulties for humans and machines reading the text.

See, for example, the image at the top of this post

It would be useful to be able to generate images of "damaged" documents, for training models in settings like healthcare (medical records), law (contracts), finance (invoicing), and so on.

There are several forms of damage that could be applied. Off the top of my head:

... to name a few

Implementation

There are several paths forward for something like this. The most naive way would be to take some images of damaged paper and use these as the base image for existing pipelines. One much more sophisticated approach would be generating a 3D model (perhaps using Blender API?) and applying an image of a document as a texture.

Small enough images (less than 30 pixels in width) can trigger a division by zero in LowInkPeriodicLines.

The __call__ method generates a random int in the range (10,30), the default period_range. Then in add_periodic_transparency_lines(), the image width is floor-divided by this int, producing a zero.

This zero is then used in a modulo here, throwing the error.

This isn't the first time testing has uncovered bugs like this; the Folding augmentation can also fail on images smaller than 20x20. I suggest we determine a minimum image size (maybe 50x50?) we support, check input image dimensions in the AugraphyPipeline, and throw an exception to quit the program if the input is too small. We can warn about this in the documentation.

Right now we only have two paper textures, but even just two or three more would dramatically increase the space of generated images.

We already simulate different textures by applying several filters in the paper phase, but even small differences in the texture of the base image should translate to significant differences when gamma is modified, which in turn will mean more robust data for training edge-detection models, etc.

Bleed-through is caused by the seeping of ink from the reverse side of the page, through the sheet of paper, and into the front side of the page. This faint bleed-through effect can be seen from a duplex-printed document, ink pressed document, or simply a document scanned or copied from a thin paper source.

Using these sample images, we can construct the intuition for how we can simulate the bleed-through effect:

To show the capabilities this repo should add an interactive web-demo where the end-user can apply any type of augmentation, adjusts the parameters , to see the results of any augmentation Sequence in real time.

@kwcckw I found some bugs in folding.py, can you take a look?

fold_y_shift_min and fold_y_shift_max in folding.py will become 0 after multiplication by a number in (0.1,0.2) due to the int cast, which makes fold_y_shift on the following line fail trying to generate a random from an empty range. This can be resolved by restricting images to be a minimum of 10x10.fold_y_shift is sometimes 0 for similar reasons, which makes the call to img_warped[:-fold_y_shift ,:,:] on line 158 fail. It tries to take an empty slice of the array using :-fold_y_shift, which makes the img_fuse[cys:cye,cxs:cxe,:] call on that same line fail with a ValueError: could not broadcast input array from shape (0,14,3) into shape (95,14,3) (for example - the real numbers are different every time, but the first value of the triple is always 0 because no elements were selected by the array slice.)four_point_transform(), but I haven't been able to track down why yet. Here's the stack trace:============================== FAILURES ===============================

________________________ test_folding_pipeline ________________________

random_image = array([[[168, 217, 242],

[144, 158, 82],

[194, 42, 206],

[ 89, 208, 93],

[ 23, 197,...57],

[255, 235, 18],

[161, 34, 28],

[231, 253, 229],

[ 78, 119, 244]]], dtype=uint8)

folding_pipeline = ink_phase = AugmentationSequence([

])

paper_phase = AugmentationSequence([

])

post_phase = AugmentationSequence([

])...Pipeline(ink_phase, paper_phase, post_phase, ink_color_range=(0, 0), paper_color_range=(255, 255), rotate_range=(0, 0))

def test_folding_pipeline(random_image, folding_pipeline):

> crappified = folding_pipeline.augment(random_image)

test_folding_pipeline.py:22:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

augraphy/base/augmentationpipeline.py:144: in augment

self.post_phase(data)

augraphy/base/augmentationsequence.py:28: in __call__

augmentation(data)

augraphy/augmentations/folding.py:224: in __call__

image_fold = self.apply_folding(image_fold, ysize, xsize, self.gradient_width, self.gradient_height, self.fold_noise)

augraphy/augmentations/folding.py:205: in apply_folding

img_fold_l = self.warp_fold_left_side(img, ysize, fold_noise, fold_x, fold_width_one_side, fold_y_shift)

augraphy/augmentations/folding.py:99: in warp_fold_left_side

img_warped = self.four_point_transform(img_crop,source_pts,destination_pts, cxsize, cysize+fold_y_shift)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = Folding(fold_count=2, fold_noise=0.1, gradient_width=(0.1, 0.2), gradient_height=(0.01, 0.02),p=0.5)

image = array([], shape=(12, 0, 3), dtype=uint8)

pts = array([[ 0., 0.],

[ 0., 12.],

[ 0., 12.],

[ 0., 0.]], dtype=float32)

dst = array([[ 0., 0.],

[ 0., 12.],

[ 0., 12.],

[ 0., 0.]], dtype=float32)

xs = 0, ys = 12

def four_point_transform(self, image,pts,dst, xs,ys):

M = cv2.getPerspectiveTransform(pts, dst)

> img_warped = cv2.warpPerspective(image, M, (xs, ys))

E cv2.error: OpenCV(4.5.3) /tmp/pip-req-build-3umofm98/opencv/modules/imgproc/src/imgwarp.cpp:3144: error: (-215:Assertion failed) _src.total() > 0 in function 'warpPerspective'

augraphy/augmentations/folding.py:49: error

======================= short test summary info =======================

FAILED test_folding_pipeline.py::test_folding_pipeline - cv2.error: ...

========================== 1 failed in 0.32s ==========================You can test this yourself by making test_folding_augmentation.py in the project directory with the following contents:

import random

import numpy as np

import pytest

from augraphy import *

@pytest.fixture

def folding_pipeline():

return AugraphyPipeline([], [], [Folding()])

@pytest.fixture

def random_image():

xdim = random.randint(1,500)

ydim = random.randint(1,500)

return np.random.randint(

low=0,

high=255,

size=(xdim,ydim,3),

dtype=np.uint8)

def test_folding_augmentation(random_image, folding_pipeline):

crappified = folding_pipeline.augment(random_image)and running pytest test_folding_augmentation.py a bunch of times (it needs to be run several times because this bug doesn't always appear). You can make the first two bugs appear very reliably by setting xdim and ydim to numbers less than 10.

For easy testing you can run for i in {1..20}; do pytest test_folding_augmentation.py; done;

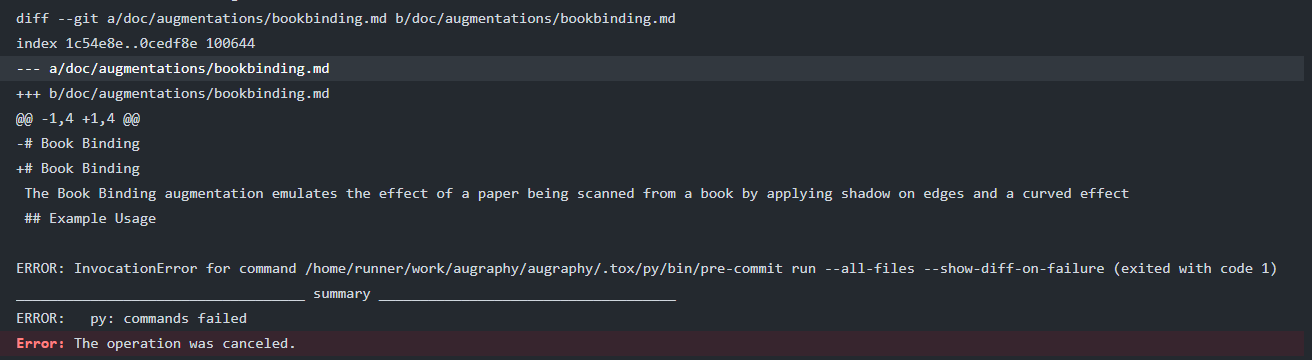

I am trying to create a PR for #63, but facing this error in the test cases. Can someone guide me on how to resolve this?

Looking at Albumentations as a guide, compositions should be streamlined to remove duplicate "Augmentation" naming from the end of each augmentation class added to a composition, for example:

A.Compose([

A.HorizontalFlip(p=0.5),

A.ShiftScaleRotate(p=0.5),

A.RandomBrightnessContrast(p=0.3),

A.RGBShift(r_shift_limit=30, g_shift_limit=30, b_shift_limit=30, p=0.3),

])

Also, it would be nice if a list was automatically mapped to an AugmentationSequence.

Hi,

Looks like i'm facing error as below when running "from Augraphy import default_augraphy_pipeline":

File "C:/augraphy/test1.py", line 12, in <module>

from Augraphy import default_augraphy_pipeline

ImportError: cannot import name 'default_augraphy_pipeline' from 'Augraphy'

probably can replace the following line in init file?

__all__ = ["AugraphyPipeline","default_augraphy_pipeline"]

A separate feature request has been made that damages the paper with a crumpled / wrinkled effect (see #17). This request, however, starts with a simpler problem: a single fold.

The effect is characterized by the following:

The complexity here is creating a nice fold (either inward or outward) with any kind of ridge definition (most obvious in the last image in the set of examples). I propose for the scope of this first issue relating to 3D transformations would be to keep it simple and not attempt to create hard-creased edged but instead focus on smoother folds like the first couple example images.

In general, Augraphy is trying to simplify the process of creating synthetic realistic datasets using only ground truth documents.

Often, training data is not accompanied by clean ground truth sources, which leads to inaccurate training and severely limited volumes of available training data. By starting with clean ground truth data, training sets can be created that represent printed, scanned, copied and faxed documents encountered in the real world AND have 100% accurate training data.

In order to recreate data from these real-world scenarios, we need to create a validation set that is inspired by examples from the real world. Below are sources that may serve as useful source material for attempting to use Augraphy to reproduce the styles and detail seen in these data sets.

RVL-CDIP dataset consists of 400,000 B/W low-resolution (~100 DPI) images in 16 classes, with 25,000 images per class

https://www.cs.cmu.edu/~aharley/rvl-cdip/

NIST-SFRS (Structured Forms Reference Set) consists of 5,590 pages of binary, black-and-white images of synthesized documents from 12 different tax forms from the IRS 1040 Package X for the year 1988. These include Forms 1040, 2106, 2441, 4562, and 6251 together with Schedules A, B, C, D, E, F, and SE.

https://www.nist.gov/srd/nist-special-database-2

Tobacco3482 dataset from Kaggle offers 10 different classes of forms, letters, reports, etc.

https://www.kaggle.com/patrickaudriaz/tobacco3482jpg

FUNSD (Form Understanding Noisy Scanned Documents) dataset on Kaggle comprises 199 real, fully annotated, scanned forms that are noisy and vary widely in appearance.

https://www.kaggle.com/sharmaharsh/form-understanding-noisy-scanned-documentsfunsd

Randomly Collected Documents is a Google Drive share that contains randomly selected public domain documents.

https://drive.google.com/drive/folders/1JMwmRko1gZ_VYtwXkP7CXPPztsNa_3nv?usp=sharing

NoisyOffice data set from University of California, Irvine contains noisy grayscale printed text images and their corresponding ground truth for both real and simulated documents with 4 types of noise: folded sheets, wrinkled sheets, coffee stains, and footprints. For each type of font, one type of Noise: 17 files * 4 types of noise = 72 images.

https://archive.ics.uci.edu/ml/datasets/NoisyOffice

DDI-100 (Distorted Document Images) is a synthetic dataset by Ilia Zharikov ([email protected]) et al based on 7000 real unique document pages and consists of more than 100000 augmented images. Ground truth comprises text and stamp masks, text and characters bounding boxes with relevant annotations.

https://arxiv.org/abs/1912.11658

https://github.com/machine-intelligence-laboratory/DDI-100/tree/master/dataset

https://paperswithcode.com/paper/ddi-100-dataset-for-text-detection-and

Scanners change the gamma of the whole image most of the times. Adding this augmentation with a gamma range of 0.5 to 1.5 in the Post phase can give a variety of different augmentations. Moreover, we can also make it as "Gamma Adjustment" that will automatically detects the appropriate gamma value for each image.

In the course of the past two weeks, I’ve already pushed a few commits that introduced bugs, or outright broke things. As the project grows and changes, debugging will increase in difficulty, and accordingly increase the potential for these errors.

To help mitigate this, I’d like to introduce proper unit and property tests (right now, we only have a script called “test”, which isn’t actually a test), and add these to the pre-commit git hooks to help ensure quality of commits.

Albumentations has a few here using the pre-commit-hooks project that we could take inspiration from, but mostly these are code style checks. I’d really like to have some testing at the level of - for example - “make sure these two numpy arrays have dimensions that allow them to be multiplied”, which would’ve saved me time and the need to make this correction in PR #31

@kwcckw a question about the Folding augmentation:

Currently the Folding augmentation applies to the ink layer, but the fold effect happens to already-printed paper, so shouldn't it go in the post layer?

A new halftone or dithering effect should affect colors and greys more than blacks, which means that text should not get affected by this effect to the same degree as images.

Great reference on dithering (also has some strange VB code at the end that might be informative, maybe).

https://tannerhelland.com/2012/12/28/dithering-eleven-algorithms-source-code.html

Just as we provide adapters for imgaug and albumentations, the imagecorruptions is another library we could add support for:

https://github.com/bethgelab/imagecorruptions

For some reason, they claim this library is not built for augmentations, but this library is being used by imgaug. Further, this library seems to be maintained while imgaug has not been touched in over a year.

As part of the effort to make a set of core augmentations that others can be built from, we’ll want augmentations to handle some basic geometric operations:

It would probably also be useful if these operations could happen to a region in a layer, instead of only the entire layer.

Together, these could be composed to form augmentations that simulate common deformations occurring to real documents:

Old printing methods transfer ink from a press onto paper, essentially like a rubber stamp. The intent of 2 of our existing augmentations ("Dusty Ink" and "Ink Blobs") is to collectively create that "Letterpress" ink effect as seen in this sample image:

There are tons of potential implementations possible, so I think we should reduce the number of augmentations where it's possible to combine them around a particular intent. It also helps to name them after some well known effects such is the case for a "Letterpress" type effect.

The number and significance of the parameters passed to this combined augmentations should also be improved. There's currently more params than is rational for the typical user. Instead, we should aim to make parameters that abstract away these underlying parameters into something more user friendly.

With time, it would be nice to see other competing implementations that are more advanced and improve upon this implementation. For example, with more realistic implementations, the texturization tends to appear more in the center of letters than the edges. Instead of having a separate augmentation, however, this could be managed via a parameter that could be varied within the same augmentation at a later point in time.

TODO

Instead of reinventing the wheel, we should allow transforms from Albumentations and Imgaug libraries to be callable within an augraphy pipeline. In general, our pipeline process should be familiar to a user of Albumentations, so in theory this should be an easy adaptation to support their transforms in an Augraphy pipeline.

In general, we should provide basic transforms which may be defined in these libraries which are needed for the common document-oriented augmentations. So, we'll have some minimal overlap; however, we should not require these other libraries for the default and most common augmentation needs.

Note that Albumentations currently seems to be well maintained while Imgaug has not been updated in a little over a year as of this moment. So, we should prioritize Albumentations over Imgaug. It appears that Imgaug has additional blur transforms, which can be useful to vary since deblurring of basic gaussian blur is fairly easily learnable using deep learning methods; but we should not invest too much effort in supporting a library that is not being maintained.

I spent an uncomfortable amount of time staring at the selected archetypes (in the Google Drive folder everyone reading this should have access to), and wrote some notes about each of them.

These notes are at the top of Colab notebooks in that same Google Drive folder, where we can work on the associated images.

As @kwcckw mentioned in PR #35, the self.transform method is missing from DirtyRollersAugmentation.

This is old functionality that was removed in commit ea09709, but the DirtyRollersAugmentation is disabled (p=0.0) in the default pipeline, so this didn't come up.

We should refactor this augmentation so we can use it again.

Here's a template notebook (currently using PencilScribbles) that we can modify slightly and include in the documentation for each augmentation.

Right now it's pulling the NoisyOffice dataset and uses an image from there as an example.

Putting this Issue here so we can all discuss changes, etc.

We need a project logo.

Creating logos is usually pretty hard. But the process usually starts with identifying words or phrases that relate to the identity being represented.

What ideas do you have for how to represent what Augraphy is doing with the fewest number of impactful words as possible?

Starter Phrases / Thoughts / Words

Questions for Discussion

What other thoughts and ideas go into your concept of what Augraphy is about? How would you reduce these thoughts into more succinct descriptions? How would you visualize some of these ideas?

Once everyone has a chance to provide input, I'll share a first round of logos for reaction.

I saw that the code is upgraded and version is changed to 2.0.5. However, I can't find the installation instructions for developers from source.

Can you please update about this ? @proofconstruction

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.