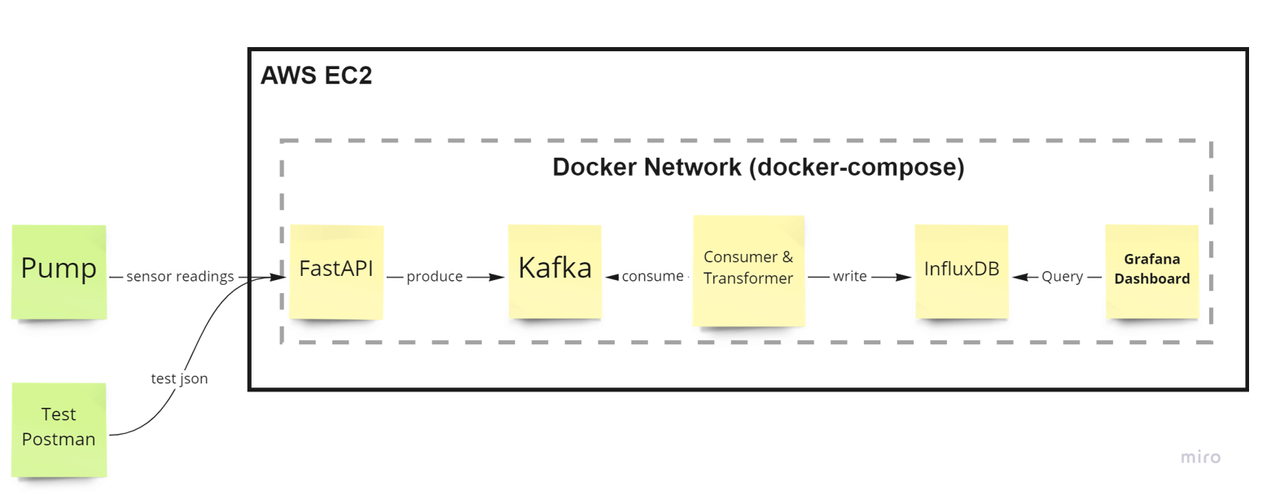

Pumps are considered the nerves of a city infrastructure, failures in these pumps can lead to catastrophic events, not to mention failures in pumps can also lead to inaccessibility of water resources. The dataset used in this project was posted on Kaggle by a user that was complaining about failures occuring in the pump. The water pump is operating in a rural city and it's crucial for the citizens of that city as it provides them with the water resources needed for their daily life, thus any failure of this pump can lead to inaccessibility of the water and problems for the city. In this project I have built a pump sensors monitoring and analysis platform using FastAPI, Kafka, InfluxDB, Grafana, Docker and AWS EC2. The main purpose of the platform is to monitor the sensors of the pump in real-time and also store the data in a timeseries database (InfluxDB) to analyze failures that occurred.

Tech Stack: FastAPI, Apache Kafka, InfluxDB, Grafana, Docker and AWS EC2.

The Data Platform Architecture is as following

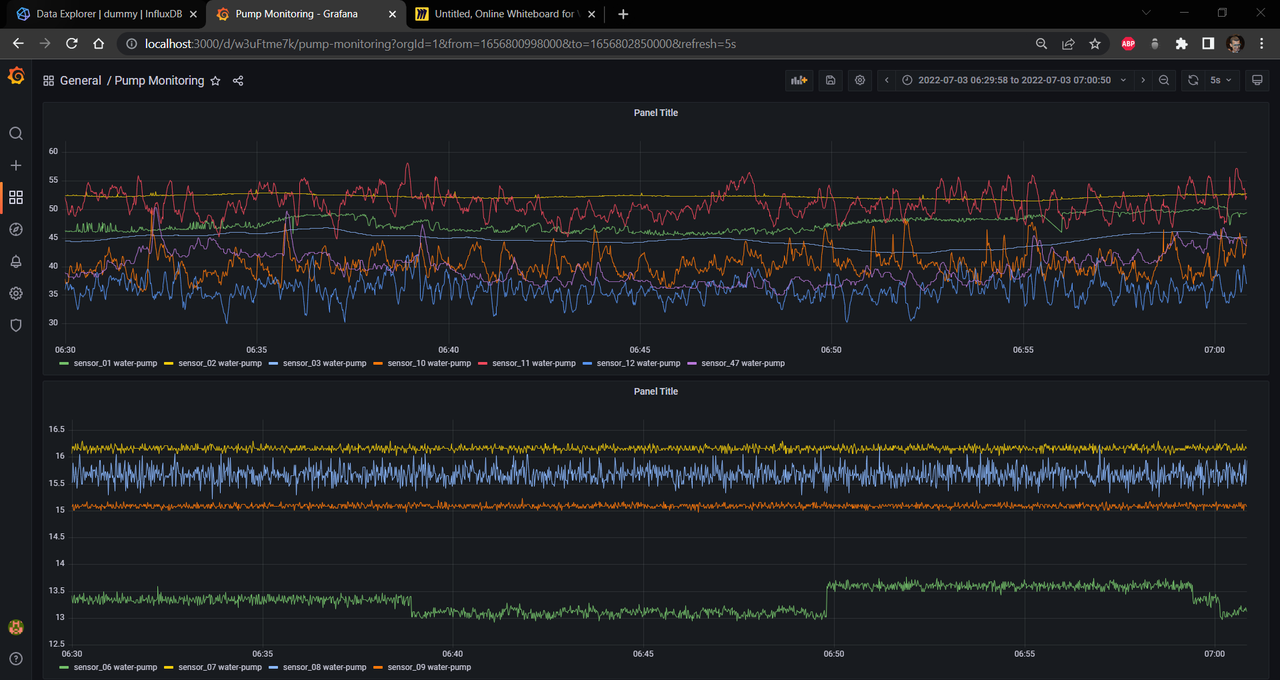

Real-time pump monitoring dashboard

The dataset is a real world dataset generated by a water pump operating in a rural area, the dataset was obtained from kaggle using the following link https://www.kaggle.com/datasets/nphantawee/pump-sensor-data

Note: The data is not in the github repo due to its large size, If you would like to use the code, make sure to download it.

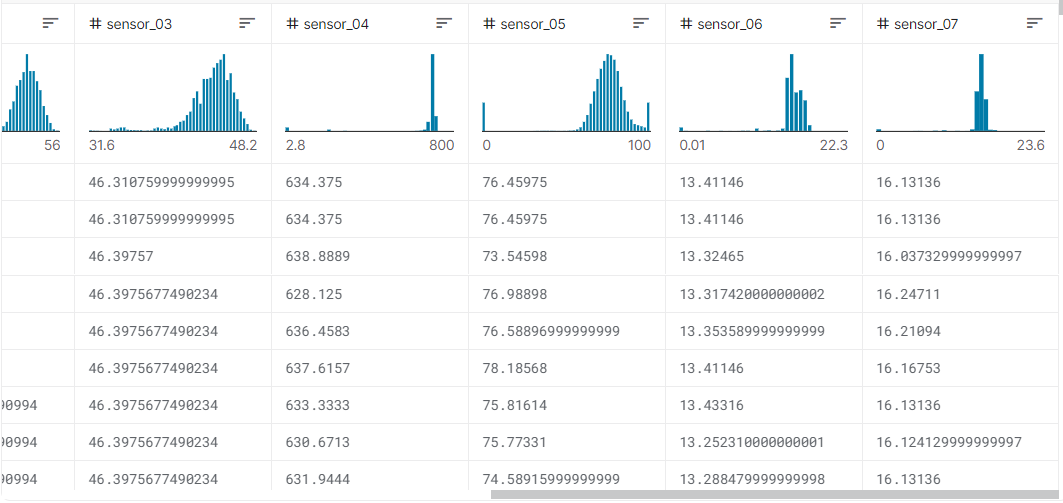

The dataset is relatively large consisting of 1 timestamp column, 1 machine status column, 52 sensor columns and 200k+ observations

The attributes of the dataset is as following

A Pump simulator python script pump-simulator.py was created to simulate the real world pump, the dataset is read in the script and each line of the dataset (pump sensor measurement) is POSTed to an API on a 1 second interval. The pump-simulator code is as following

import requests

import pandas as pd

from datetime import datetime

import time

# API endpoint

BASE_URL = 'http://54.197.93.27:80/pump1'

# reading the data and dropping unnessecary column

data = pd.read_csv('data/sensor.csv')

data.drop('reading', axis=1, inplace=True)

# POSTing a line of the dataset on a 1 sec interval to the API endpoint

for i in data.index:

data.loc[i, 'timestamp'] = datetime.now().strftime('%Y-%m-%dT%H:%M:%SZ')

print(data.loc[i, 'timestamp'])

measurement = data.loc[i].to_json()

print(measurement)

response = requests.post(BASE_URL, measurement)

print(response)

time.sleep(1)An API is hosted where the pump-simulator.py is posting the pump sensor readings and the API produces the reading to a Kafka Topic. The API is created locally using the following python script api/main.py and then a Dockerfile is created to build an image of the API and push it to Docker Hub. This is crucial for deployment as the API will be used in the docker-compose.yml that will run on EC2 instance

The code for the api/main.py is as following

from fastapi import FastAPI

from pydantic import BaseModel

from typing import Union

from kafka import KafkaProducer

import json

from fastapi.encoders import jsonable_encoder

from fastapi.responses import JSONResponse

# instantiating the producer

producer = KafkaProducer(bootstrap_servers='kafka:9092', value_serializer=lambda m: json.dumps(m).encode('ascii'))

class Pump(BaseModel):

timestamp: str

sensor_00: Union[float, None]

sensor_01: Union[float, None]

sensor_02: Union[float, None]

sensor_03: Union[float, None]

sensor_04: Union[float, None]

sensor_05: Union[float, None]

sensor_06: Union[float, None]

sensor_07: Union[float, None]

sensor_08: Union[float, None]

sensor_09: Union[float, None]

sensor_10: Union[float, None]

sensor_11: Union[float, None]

sensor_12: Union[float, None]

sensor_13: Union[float, None]

sensor_14: Union[float, None]

sensor_15: Union[float, None]

sensor_16: Union[float, None]

sensor_17: Union[float, None]

sensor_18: Union[float, None]

sensor_19: Union[float, None]

sensor_20: Union[float, None]

sensor_21: Union[float, None]

sensor_22: Union[float, None]

sensor_23: Union[float, None]

sensor_24: Union[float, None]

sensor_25: Union[float, None]

sensor_26: Union[float, None]

sensor_27: Union[float, None]

sensor_28: Union[float, None]

sensor_29: Union[float, None]

sensor_30: Union[float, None]

sensor_31: Union[float, None]

sensor_32: Union[float, None]

sensor_33: Union[float, None]

sensor_34: Union[float, None]

sensor_35: Union[float, None]

sensor_36: Union[float, None]

sensor_37: Union[float, None]

sensor_38: Union[float, None]

sensor_39: Union[float, None]

sensor_40: Union[float, None]

sensor_41: Union[float, None]

sensor_42: Union[float, None]

sensor_43: Union[float, None]

sensor_44: Union[float, None]

sensor_45: Union[float, None]

sensor_46: Union[float, None]

sensor_47: Union[float, None]

sensor_48: Union[float, None]

sensor_49: Union[float, None]

sensor_50: Union[float, None]

sensor_51: Union[float, None]

machine_status: str

app = FastAPI()

@app.get('/')

async def root():

return {"message" : "Hello World!"}

@app.post('/pump1')

async def pump1(pump_data: Pump):

json_fmt = jsonable_encoder(pump_data)

producer.send('pump1', json_fmt)

return JSONResponse(json_fmt, status_code=201)And the Dockerfile used to build the image of the API is as following

FROM tiangolo/uvicorn-gunicorn-fastapi:python3.9

COPY ./requirements.txt /app/requirements.txt

RUN pip install --no-cache-dir --upgrade -r /app/requirements.txt

COPY ./ /appFor data processing a Kafka topic ('pump1') is created and a consumer python script docker-kafka-consumer/docker-consumer.py is created to consume the events from Kafka, transform it and load it into InfluxDB. The code is as following

from kafka import KafkaConsumer

from influxdb_client import InfluxDBClient

from influxdb_client.client.write_api import SYNCHRONOUS

import json

from kafka.admin import KafkaAdminClient, NewTopic

# KafkaAdminClient instance

ADMIN_CLIENT = KafkaAdminClient(

bootstrap_servers='kafka:9092',

client_id='CLIENT',

api_version = (0, 10, 1)

)

# kafka consumer object

CONSUMER = KafkaConsumer('pump1', bootstrap_servers = 'kafka:9092', value_deserializer=lambda m: json.loads(m.decode('ascii')))

# influx client

INFLUX_CLIENT = InfluxDBClient(

url = 'http://influxdb:8086',

token = 'V7bAH-_Z_bcOTE_POvqJYZ3JBb9tRPdVVKd-mR_sODaV0nFxjWth5J62MkbRp4GOKztUDRnWmn3FI0MqYzD1PA==',

org = 'my-org'

)

# creating the kafka topic

def create_kafka_topic(topic_name):

try:

topic_list = []

topic_list.append(NewTopic(name=topic_name, num_partitions=1, replication_factor=1))

ADMIN_CLIENT.create_topics(new_topics=topic_list, validate_only=False)

except:

print('error in creating kafka topic')

write_api = INFLUX_CLIENT.write_api(write_options=SYNCHRONOUS)

def kafka_python_consumer():

for msg in CONSUMER:

# configuring the data-load to be written to influxdb

load = {

"measurement": "latest-pump",

"tags": {"type": "water-pump"},

"fields": {k: v for k, v in msg.value.items() if k != 'timestamp'},

"time": msg.value['timestamp']

}

# writing to influx-db

message = write_api.write(

bucket = 'pump',

org = 'my-org',

record = load)

print(message)

write_api.flush()

if __name__ == '__main__':

create_kafka_topic('pump1')

kafka_python_consumer()For Data Visualization, Grafana is connected to InfluxDB and a dashboard is created to monitor the sensor measurements in real time as shown in the figure below

Real-time pump monitoring dashboard

For containerization, a docker-compose.yml file is create to containerize all the services and an AWS EC2 instance is used to host and run the docker containers

the docker-compose.yml content is as following

version: '3'

services:

influxdb:

image: influxdb:2.0

ports:

- '8086:8086'

volumes:

- influxdb-volume:/var/lib/influxdb2

- influxdb-config:/etc/influxdb2

environment:

- DOCKER_INFLUXDB_INIT_MODE=setup

- DOCKER_INFLUXDB_INIT_USERNAME=my-user

- DOCKER_INFLUXDB_INIT_PASSWORD=my-password

- DOCKER_INFLUXDB_INIT_ORG=my-org

- DOCKER_INFLUXDB_INIT_BUCKET=air-quality

networks:

- pump-monitoring

grafana:

image: grafana/grafana:latest

ports:

- '3000:3000'

environment:

- GF_SECURITY_ADMIN_USER=admin

- GF_SECURITY_ADMIN_PASSWORD=admin

- INFLUXDB_DB=db0

- INFLUXDB_ADMIN_USER=admin

- INFLUXDB_ADMIN_PASSWORD=pw12345

- INFLUXDB_ADMIN_USER_PASSWORD=admin

- INFLUXDB_USERNAME=user

- INFLUXDB_PASSWORD=user12345

volumes:

- grafana-volume:/var/lib/grafana

networks:

- pump-monitoring

zookeeper:

image: 'bitnami/zookeeper:latest'

ports:

- '2181:2181'

environment:

- ALLOW_ANONYMOUS_LOGIN=yes

networks:

- pump-monitoring

kafka:

image: 'bitnami/kafka:latest'

ports:

- '9093:9093' #change to 9093 to access external from your windows host

environment:

- KAFKA_BROKER_ID=1

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper:2181

- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=CLIENT:PLAINTEXT,EXTERNAL:PLAINTEXT #add aditional listener for external

- KAFKA_CFG_LISTENERS=CLIENT://:9092,EXTERNAL://:9093 #9092 will be for other containers, 9093 for your windows client

- KAFKA_CFG_ADVERTISED_LISTENERS=CLIENT://kafka:9092,EXTERNAL://localhost:9093 #9092 will be for other containers, 9093 for your windows client

- KAFKA_INTER_BROKER_LISTENER_NAME=CLIENT

depends_on:

- zookeeper

networks:

- pump-monitoring

pump-consumer:

image: 'yousefhosny1/pump-consumer:latest'

ports:

- '5000:5000'

depends_on:

- kafka

networks:

- pump-monitoring

restart: on-failure

pump:

image: 'yousefhosny1/pump:latest'

ports:

- '80:80'

depends_on:

- kafka

- pump-consumer

networks:

- pump-monitoring

restart: always

networks:

pump-monitoring:

driver: bridge

volumes:

grafana-volume:

external: true

influxdb-config:

external: true

influxdb-volume:

external: trueLinkedIn: https://www.linkedin.com/in/yousef-elsayed-682864142/