🙋♂️ Updating to v1.0.0 ? please read here. |

|---|

FusionCache is an easy to use, fast and robust cache with advanced resiliency features and an optional distributed 2nd level.

It was born after years of dealing with all sorts of different types of caches: memory caching, distributed caching, http caching, CDNs, browser cache, offline cache, you name it. So I've tried to put together these experiences and came up with FusionCache.

It uses a memory cache (any impl of the standard IMemoryCache interface) as the primary backing store and optionally a distributed, 2nd level cache (any impl of the standard IDistributedCache interface) as a secondary backing store for better resilience and higher performance, for example in a multi-node scenario or to avoid the typical effects of a cold start (initial empty cache, maybe after a restart).

Optionally, it can also use a backplane: in a multi-node scenario this will send notifications to the other nodes to keep all the memory caches involved perfectly synchronized, without any additional work.

FusionCache also includes some advanced resiliency features like cache stampede prevention, a fail-safe mechanism, fine grained soft/hard timeouts with background factory completion, customizable extensive logging and more (see below).

On August 2021, FusionCache received the Google Open Source Peer Bonus Award: here is the official blogpost.

With 🦄 A Gentle Introduction you'll get yourself comfortable with the overall concepts.

Want to start using it immediately? There's a ⭐ Quick Start for you.

Curious about what you can achieve from start to finish? There's a 👩🏫 Step By Step guide.

More into videos? The fine folks at On .NET have been kind enough to invite me on the show and listen to me mumbling random caching stuff.

These are the key features of FusionCache:

- 🛡️ Cache Stampede: automatic protection from the Cache Stampede problem

- 🔀 2nd level: an optional 2nd level handled transparently, with any implementation of

IDistributedCache - 💣 Fail-Safe: a mechanism to avoids transient failures, by reusing an expired entry as a temporary fallback

- ⏱ Soft/Hard Timeouts: a slow factory (or distributed cache) will not slow down your application, and no data will be wasted

- 📢 Backplane: in a multi-node scenario, it can notify the other nodes about changes in the cache, so all will be in-sync

- ↩️ Auto-Recovery: automatic handling of transient issues with retries and sync logic

- 🧙♂️ Adaptive Caching: for when you don't know upfront the cache duration, as it depends on the value being cached itself

- 🔂 Conditional Refresh: like HTTP Conditional Requests, but for caching

- 🦅 Eager Refresh: start a non-blocking background refresh before the expiration occurs

- 🔃 Dependency Injection + Builder: native support for Dependency Injection, with a nice fluent interface including a Builder support

- 📛 Named Caches: easily work with multiple named caches, even if differently configured

- 🔭 OpenTelemetry: native observability support via OpenTelemetry

- 📜 Logging: comprehensive, structured and customizable, via the standard

ILoggerinterface - 💫 Fully sync/async: native support for both the synchronous and asynchronous programming model

- 📞 Events: a comprehensive set of events, both at a high level and at lower levels (memory/distributed)

- 🧩 Plugins: extend FusionCache with additional behavior like adding support for metrics, statistics, etc...

Something more 😏 ?

Also, FusionCache has some nice additional features:

- ✅ Portable: targets .NET Standard 2.0, so it can run almost everywhere

- ✅ High Performance: FusionCache is optimized to minimize CPU usage and memory allocations to get better performance and lower the cost of your infrastructure all while obtaining a more stable, error resilient application

- ✅ Null caching: explicitly supports caching of

nullvalues differently than "no value". This creates a less ambiguous usage, and typically leads to better performance because it avoids the classic problem of not being able to differentiate between "the value was not in the cache, go check the database" and "the value was in the cache, and it wasnull" - ✅ Circuit-breaker: it is possible to enable a simple circuit-breaker for when the distributed cache or the backplane become temporarily unavailable. This will prevent those components to be hit with an excessive load of requests (that would probably fail anyway) in a problematic moment, so it can gracefully get back on its feet. More advanced scenarios can be covered using a dedicated solution, like Polly

- ✅ Dynamic Jittering: setting

JitterMaxDurationwill add a small randomized extra duration to a cache entry's normal duration. This is useful to prevent variations of the Cache Stampede problem in a multi-node scenario - ✅ Cancellation: every method supports cancellation via the standard

CancellationToken, so it is easy to cancel an entire pipeline of operation gracefully - ✅ Code comments: every property and method is fully documented in code, with useful informations provided via IntelliSense or similar technologies

- ✅ Fully annotated for nullability: every usage of nullable references has been annotated for a better flow analysis by the compiler

Main packages:

| Package Name | Version | Downloads |

|---|---|---|

| ZiggyCreatures.FusionCache The core package |

|

|

| ZiggyCreatures.FusionCache.OpenTelemetry Adds native support for OpenTelemetry setup |

|

|

| ZiggyCreatures.FusionCache.Chaos A package to add some controlled chaos, for testing |

|

|

Serializers:

| Package Name | Version | Downloads |

|---|---|---|

| ZiggyCreatures.FusionCache.Serialization.NewtonsoftJson A serializer, based on Newtonsoft Json.NET |

|

|

| ZiggyCreatures.FusionCache.Serialization.SystemTextJson A serializer, based on the new System.Text.Json |

|

|

| ZiggyCreatures.FusionCache.Serialization.NeueccMessagePack A MessagePack serializer, based on the most used MessagePack serializer on .NET |

|

|

| ZiggyCreatures.FusionCache.Serialization.ProtoBufNet A Protobuf serializer, based on one of the most used protobuf-net serializer on .NET |

|

|

| ZiggyCreatures.FusionCache.Serialization.CysharpMemoryPack A serializer based on the uber fast new serializer by Neuecc, MemoryPack |

|

|

| ZiggyCreatures.FusionCache.Serialization.ServiceStackJson A serializer based on the ServiceStack JSON serializer |

|

|

Backplanes:

| Package Name | Version | Downloads |

|---|---|---|

| ZiggyCreatures.FusionCache.Backplane.Memory An in-memory backplane (mainly for testing) |

|

|

| ZiggyCreatures.FusionCache.Backplane.StackExchangeRedis A Redis backplane, based on StackExchange.Redis |

|

|

Third-party packages:

| Package Name | Version | Downloads |

|---|---|---|

| JoeShook.ZiggyCreatures.FusionCache.Metrics.Core |  |

|

| JoeShook.ZiggyCreatures.FusionCache.Metrics.EventCounters |  |

|

| JoeShook.ZiggyCreatures.FusionCache.Metrics.AppMetrics |  |

|

FusionCache can be installed via the nuget UI (search for the ZiggyCreatures.FusionCache package) or via the nuget package manager console:

PM> Install-Package ZiggyCreatures.FusionCacheAs an example, imagine having a method that retrieves a product from your database:

Product GetProductFromDb(int id) {

// YOUR DATABASE CALL HERE

}💡 This is using the sync programming model, but it would be equally valid with the newer async one for even better performance.

To start using FusionCache the first thing is create a cache instance:

var cache = new FusionCache(new FusionCacheOptions());If instead you are using DI (Dependency Injection) use this:

services.AddFusionCache();We can also specify some global options, like a default FusionCacheEntryOptions object to serve as a default for each call we'll make, with a duration of 2 minutes:

var cache = new FusionCache(new FusionCacheOptions() {

DefaultEntryOptions = new FusionCacheEntryOptions {

Duration = TimeSpan.FromMinutes(2)

}

});Or, using DI, like this:

services.AddFusionCache()

.WithDefaultEntryOptions(new FusionCacheEntryOptions {

Duration = TimeSpan.FromMinutes(2)

})

;Now, to get the product from the cache and, if not there, get it from the database in an optimized way and cache it for 30 sec (overriding the default 2 min we set above) simply do this:

var id = 42;

cache.GetOrSet<Product>(

$"product:{id}",

_ => GetProductFromDb(id),

TimeSpan.FromSeconds(30)

);That's it 🎉

Want a little bit more 😏 ?

Now, imagine we want to do the same, but also:

- set the priority of the cache item to

High(mainly used in the underlying memory cache) - enable fail-safe for

2 hours, to allow an expired value to be used again in case of problems with the database (read more) - set a factory soft timeout of

100 ms, to avoid too slow factories crumbling your application when there's a fallback value readily available (read more) - set a factory hard timeout of

2 sec, so that, even if there is no fallback value to use, you will not wait undefinitely but instead an exception will be thrown to let you handle it however you want (read more)

To do all of that we simply have to change the last line (reformatted for better readability):

cache.GetOrSet<Product>(

$"product:{id}",

_ => GetProductFromDb(id),

// THIS IS WHERE THE MAGIC HAPPENS

options => options

.SetDuration(TimeSpan.FromSeconds(30))

.SetPriority(CacheItemPriority.High)

.SetFailSafe(true, TimeSpan.FromHours(2))

.SetFactoryTimeouts(TimeSpan.FromMilliseconds(100), TimeSpan.FromSeconds(2))

);Basically, on top of specifying the cache key and the factory, instead of specifying just a duration as a TimeSpan we specify a FusionCacheEntryOptions object - which contains all the options needed to control the behavior of FusionCache during each operation - in the form of a lambda that automatically duplicates the default entry options defined before (to copy all our defaults) while giving us a chance to modify it as we like for this specific call.

Now let's say we really like these set of options (priority, fail-safe and factory timeouts) and we want them to be the overall defaults, while keeping the ability to change something on a per-call basis (like the duration).

To do that we simply move the customization of the entry options where we created the DefaultEntryOptions, by changing it to something like this (the same is true for the DI way):

var cache = new FusionCache(new FusionCacheOptions() {

DefaultEntryOptions = new FusionCacheEntryOptions()

.SetDuration(TimeSpan.FromMinutes(2))

.SetPriority(CacheItemPriority.High)

.SetFailSafe(true, TimeSpan.FromHours(2))

.SetFactoryTimeouts(TimeSpan.FromMilliseconds(100), TimeSpan.FromSeconds(2))

});Now these options will serve as the cache-wide default, usable in every method call as a "starting point".

Then, we just change our method call to simply this:

var id = 42;

cache.GetOrSet<Product>(

$"product:{id}",

_ => GetProductFromDb(id),

options => options.SetDuration(TimeSpan.FromSeconds(30))

);The DefaultEntryOptions we did set before will be duplicated and only the duration will be changed for this call.

If you are in for a ride you can read a complete step by step example of why a cache is useful, why FusionCache could be even more so, how to apply most of the options available and what results you can expect to obtain.

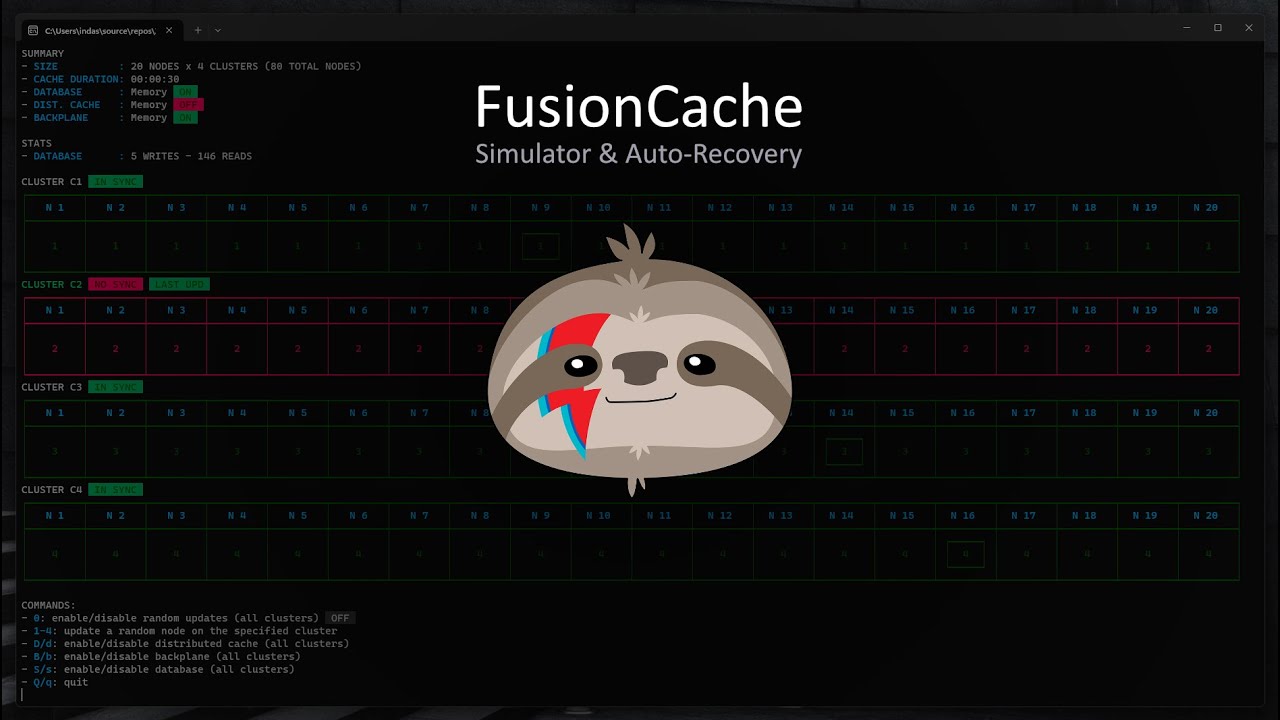

Distributed systems are, in general, quite complex to understand.

When using FusionCache with the distributed cache, the backplane and auto-recovery the Simulator can help us seeing the whole picture.

FusionCache targets .NET Standard 2.0 so any compatible .NET implementation is fine: this means .NET Framework (the old one), .NET Core 2+ and .NET 5/6/7/8+ (the new ones), Mono 5.4+ and more (see here for a complete rundown).

NOTE: if you are running on .NET Framework 4.6.1 and want to use .NET Standard packages Microsoft suggests to upgrade to .NET Framework 4.7.2 or higher (see the .NET Standard Documentation) to avoid some known dependency issues.

There are various alternatives out there with different features, different performance characteristics (cpu/memory) and in general a different set of pros/cons.

A feature comparison between existing .NET caching solutions may help you choose which one to use.

Nothing to do here.

After years of using a lot of open source stuff for free, this is just me trying to give something back to the community.

If you find FusionCache useful just ✉ drop me a line, I would be interested in knowing how you're using it.

And if you really want to talk about money, please consider making ❤ a donation to a good cause of your choosing, and let me know about that.

Yes!

FusionCache is being used in production on real world projects for years, happily handling millions of requests.

Considering that the FusionCache packages have been downloaded more than 4 million times (thanks everybody!) it may very well be used even more.

And again, if you are using it please ✉ drop me a line, I'd like to know!