Important

PipeRider has been superseded by Recce. We recommend that users requiring pre-merge data validation checks migrate to Recce. PipeRider will not longer be updated on a regular basis. You are still welcome to open a PR with bug fixes or feature requests. For questions and help regarding this update, please contact [email protected] or leave a message in the Recce Discord.

PipeRider automatically compares your data to highlight the difference in impacted downstream dbt models so you can merge your Pull Requests with confidence.

- Easy to connect your datasource -> PipeRider leverages the connection profiles in your dbt project to connect to the data warehouse

- Generate profiling statistics of your models to get a high-level overview of your data

- Compare target branch changes with the main branch in a HTML report

- Post a quick summary of the data changes to your PR, so others can be confident too

- Easy to install: Leveraging dbt's configuration settings, PipeRider can be installed within 2 minutes

- Fast comparison: by collecting profiling statistics (e.g. uniqueness, averages, quantiles, histogram) and metric queries, comparing downstream data impact takes little time, speeding up your team's review time

- Valuable insights: various profiling statistics displayed in the HTML report give fast insights into your data

-

Install PipeRider

pip install piperider[<connector>]

You can find all supported data source connectors here.

-

Add PipeRider tag on your model: Go to your dbt project, and add the PipeRider tag on the model you want to profile.

--models/staging/stg_customers.sql {{ config( tags=["piperider"] ) }} select ...

and show the models would be run by piperider

dbt list -s tag:piperider --resource-type model -

Run PipeRider

piperider run

To see the full quick start guide, please refer to PipeRider documentation

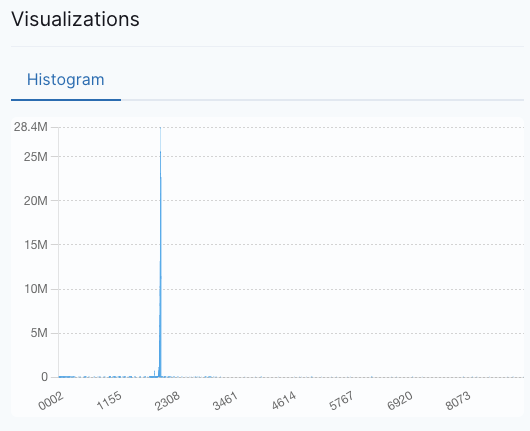

- Model profiling: PipeRider can profile your dbt models and obtain information such as basic data composition, quantiles, histograms, text length, top categories, and more.

- Metric queries: PipeRider can integrate with dbt metrics and present the time-series data of metrics in the report.

- HTML report: PipeRider generates a static HTML report each time it runs, which can be viewed locally or shared.

- Report comparison: You can compare two previously generated reports or use a single command to compare the differences between the current branch and the main branch. The latter is designed specifically for code review scenarios. In our pull requests on GitHub, we not only want to know which files have been changed, but also the impact of these changes on the data. PipeRider can easily generate comparison reports with a single command to provide this information.

- CI integration: The key to CI is automation, and in the code review process, automating this workflow is even more meaningful. PipeRider can easily integrate into your CI process. When new commits are pushed to your PR branch, reports can be automatically generated to provide reviewers with more confidence in the changes made when reviewing.

We use the example project git-repo-analytics to demonstrate how to use piperider+dbt+duckdb to analyze dbt-core repository. Here is the generated result (daily update)

PipeRider Cloud allows you to upload reports and share them with your team members. For information on pricing plans, please refer to the pricing page.

PipeRider provides the PipeRider Compare Action to quickly integrate into your Github Actions workflow. It has the following features:

- Automatically generates a report comparing the PR branch to the main branch

- Uploads the report to GitHub artifacts or PipeRider cloud

- Adds a comment to the pull request with a comparison summary and a link to the report.

You can refer to example workflow yaml and the example pull request.

See setup dev environment and the contributing guildlines to get started.

We love chatting with our users! Let us know if you have any questions, feedback, or need help trying out PipeRider! ❤️