Get public TCP LoadBalancers for local Kubernetes clusters

When using a managed Kubernetes engine, you can expose a Service as a "LoadBalancer" and your cloud provider will provision a TCP cloud load balancer for you, and start routing traffic to the selected service inside your cluster. In other words, you get ingress to an otherwise internal service.

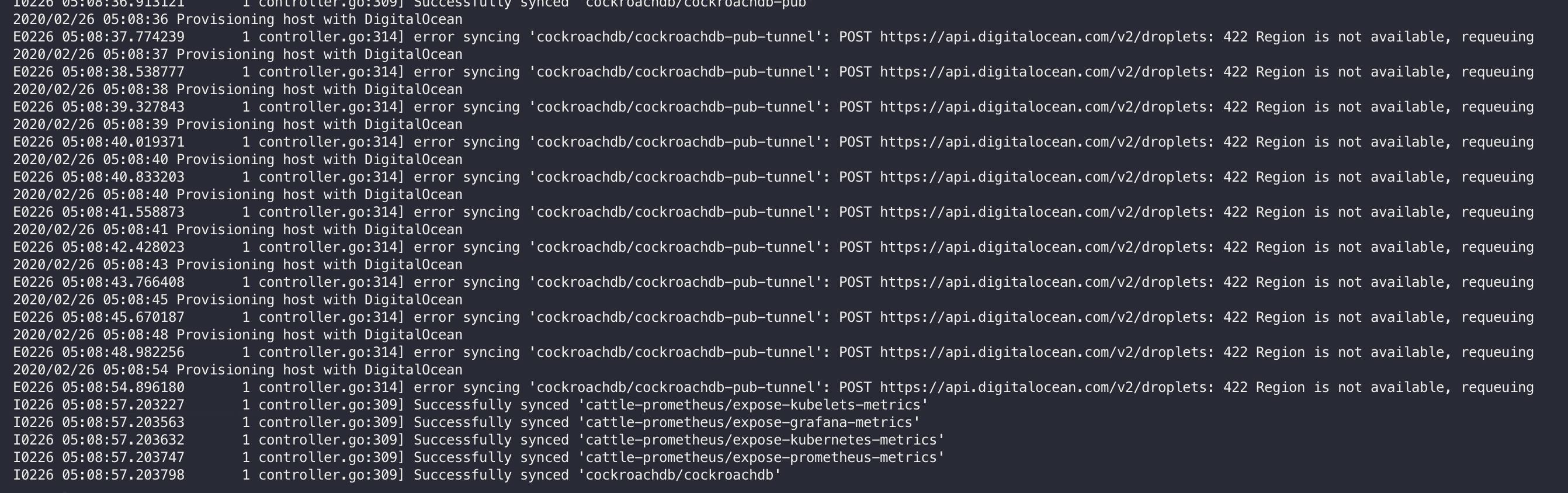

The inlets-operator brings that same experience to your local Kubernetes cluster by provisioning a VM on the public cloud and running an inlets server process there.

Within the cluster, it runs the inlets client as a Deployment, and once the two are connected, it updates the original service with the IP, just like a managed Kubernetes engine.

Deleting the service or annotating it will cause the cloud VM to be deleted.

See also:

Once the inlets-operator is installed, any Service of type LoadBalancer will get an IP address, unless you exclude it with an annotation.

kubectl run nginx-1 --image=nginx --port=80 --restart=Always

kubectl expose pod/nginx-1 --port=80 --type=LoadBalancer

$ kubectl get services -w

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx-1 ClusterIP 192.168.226.216 <pending> 80/TCP 78s

service/nginx-1 ClusterIP 192.168.226.216 104.248.163.242 80/TCP 78sYou'll also find a Tunnel Custom Resource created for you:

$ kubectl get tunnels

NAMESPACE NAME SERVICE HOSTSTATUS HOSTIP HOSTID

default nginx-1-tunnel nginx-1 provisioning 342453649

default nginx-1-tunnel nginx-1 active 178.62.64.13 342453649We recommend exposing an Ingress Controller or Istio Ingress Gateway, see also: Expose an Ingress Controller

Want to create tunnels for all LoadBalancer services, but ignore one or two?

Want to disable the inlets-operator for a particular Service? Add the annotation operator.inlets.dev/manage with a value of 0.

kubectl annotate service nginx-1 operator.inlets.dev/manage=0Want to ignore all services, then only create Tunnels for annotated ones?

Install the chart with annotatedOnly: true, then run:

kubectl annotate service nginx-1 operator.inlets.dev/manage=1For IPVS, you need to declare a Tunnel Custom Resource instead of using the LoadBalancer field.

apiVersion: operator.inlets.dev/v1alpha1

kind: Tunnel

metadata:

name: nginx-1-tunnel

namespace: default

spec:

serviceRef:

name: nginx-1

namespace: default

status: {}You can pre-define the auth token for the tunnel if you need to:

spec:

authTokenRef:

name: nginx-1-tunnel-token

namespace: defaultYour cluster could be running anywhere: on your laptop, in an on-premises datacenter, within a VM, or on your Raspberry Pi. Ingress and LoadBalancers are a core-building block of Kubernetes clusters, so Ingress is especially important if you:

- run a private-cloud or a homelab

- self-host applications and APIs

- test and share work with colleagues or clients

- want to build a realistic environment

- integrate with webhooks and third-party APIs

There is no need to open a firewall port, set-up port-forwarding rules, configure dynamic DNS or any of the usual hacks. You will get a public IP and it will "just work" for any TCP traffic you may have.

- There are no rate limits on connections or bandwidth limits

- You can use your own DNS

- You can use any IngressController or an Istio Ingress Gateway

- You can take your IP address with you - wherever you go

Any Service of type LoadBalancer can be exposed within a few seconds.

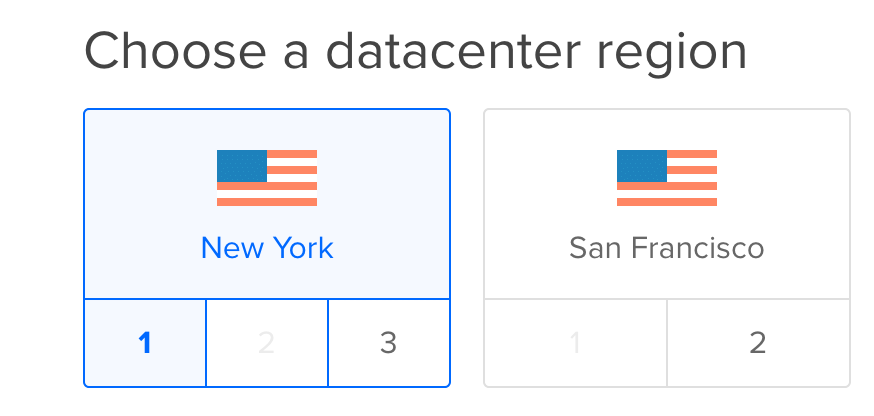

Since exit-servers are created in your preferred cloud (around a dozen are supported already), you'll only have to pay for the cost of the VM, and where possible, the cheapest plan has already been selected for you. For example with Hetzner (coming soon) that's about 3 EUR / mo, and with DigitalOcean it comes in at around 5 USD - both of these VPSes come with generous bandwidth allowances, global regions and fast network access.

In this animation by Ivan Velichko, you see the operator in action.

It detects a new Service of type LoadBalancer, provisions a VM in the cloud, and then updates the Service with the IP address of the VM.

There's also a video walk-through of exposing an Ingress Controller

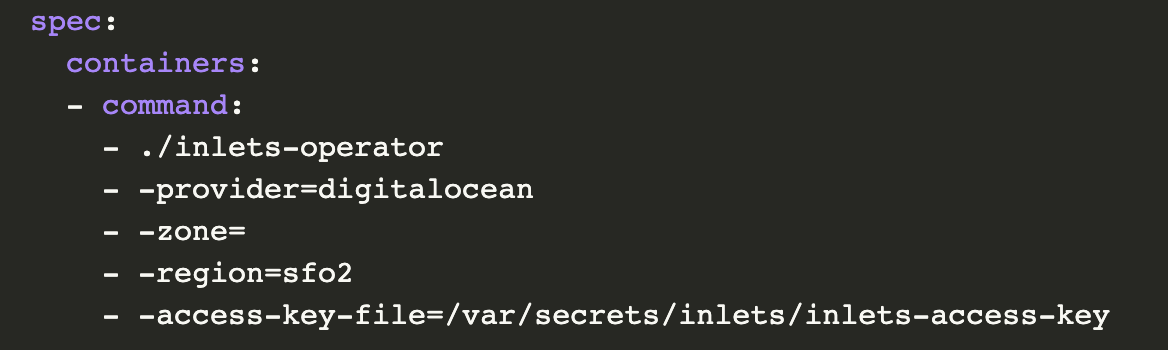

Check out the reference documentation for inlets-operator to get exit-nodes provisioned on different cloud providers here.

See also: Helm chart

Unlike other solutions, this:

- Integrates directly into Kubernetes

- Gives you a TCP LoadBalancer, and updates its IP in

kubectl get svc - Allows you to use any custom DNS you want

- Works with LetsEncrypt

Configuring ingress:

- Expose Ingress Nginx or another IngressController

- Expose an Istio Ingress Gateway

- Expose Traefik with K3s to the Internet

- Node.js microservice with Let's Encrypt

- OpenFaaS with Let's Encrypt

- Docker Registry with Let's Encrypt

The host provisioning code used by the inlets-operator is shared with inletsctl, both tools use the configuration in the grid below.

These costs need to be treated as an estimate and will depend on your bandwidth usage and how many hosts you decide to create. You can at all times check your cloud provider's dashboard, API, or CLI to view your exit-nodes. The hosts provided have been chosen because they are the absolute lowest-cost option that the maintainers could find.

| Provider | Price per month | Price per hour | OS image | CPU | Memory | Boot time |

|---|---|---|---|---|---|---|

| Google Compute Engine | * ~$4.28 | ~$0.006 | Ubuntu 20.04 | 1 | 614MB | ~3-15s |

| Equinix-Metal | ~$360 | $0.50 | Ubuntu 20.04 | 1 | 32GB | ~45-60s |

| Digital Ocean | $5 | ~$0.0068 | Ubuntu 18.04 | 1 | 1GB | ~20-30s |

| Scaleway | 5.84€ | 0.01€ | Ubuntu 20.04 | 2 | 2GB | 3-5m |

| Amazon Elastic Computing 2 | $3.796 | $0.0052 | Ubuntu 20.04 | 1 | 1GB | 3-5m |

| Linode | $5 | $0.0075 | Ubuntu 20.04 | 1 | 1GB | ~10-30s |

| Azure | $4.53 | $0.0062 | Ubuntu 20.04 | 1 | 0.5GB | 2-4min |

| Hetzner | 4.15€ | €0.007 | Ubuntu 20.04 | 1 | 2GB | ~5-10s |

- The first f1-micro instance in a GCP Project (the default instance type for inlets-operator) is free for 720hrs(30 days) a month

In this video walk-through Alex will guide you through creating a Kubernetes cluster on your laptop with KinD, then he'll install ingress-nginx (an IngressController), followed by cert-manager and then after the inlets-operator creates a LoadBalancer on the cloud, you'll see a TLS certificate obtained by LetsEncrypt.

Tutorial: Tutorial: Expose a local IngressController with the inlets-operator

Contributions are welcome, see the CONTRIBUTING.md guide.

- inlets - L7 HTTP / L4 TCP tunnel which can tunnel any TCP traffic. Secure by default with built-in TLS encryption. Kubernetes-ready with Operator, helm chart, container images and YAML manifests

- MetalLB - a LoadBalancer for private Kubernetes clusters, cannot expose services publicly

- kube-vip - a more modern Kubernetes LoadBalancer than MetalLB, cannot expose services publicly

- Cloudflare Argo - product from Cloudflare for Cloudflare customers and domains - K8s integration available through Cloudflare DNS and ingress controller. Not for use with custom Ingress Controllers

- ngrok - a SasS tunnel service tool, restarts every 7 hours, limits connections per minute, SaaS-only, no K8s integration available, TCP tunnels can only use high/unconventional ports, can't be used with Ingress Controllers

- Wireguard - a modern VPN, not for exposing services publicly

- Tailscale - a mesh VPN that automates Wireguard, not for exposing services publicly

inlets and the inlets-operator are brought to you by OpenFaaS Ltd.