This is an incremental Markdown (CommonMark with support for extension) parser that integrates well with the Lezer parser system. It does not in fact use the Lezer runtime (that runs LR parsers, and Markdown can't really be parsed that way), but it produces Lezer-style compact syntax trees and consumes fragments of such trees for its incremental parsing.

Note that this only parses the document, producing a data structure

that represents its syntactic form, and doesn't help with outputting

HTML. Also, in order to be single-pass and incremental, it doesn't do

some things that a conforming CommonMark parser is expected to

do—specifically, it doesn't validate link references, so it'll parse

[a][b] and similar as a link, even if no [b] reference is

declared.

The @codemirror/lang-markdown package integrates this parser with CodeMirror to provide Markdown editor support.

The code is licensed under an MIT license.

-

parser: MarkdownParser The default CommonMark parser.

-

classMarkdownParserextends Parser A Markdown parser configuration.

-

nodeSet: NodeSet The parser's syntax node types.

-

configure(spec: MarkdownExtension) → MarkdownParser Reconfigure the parser.

-

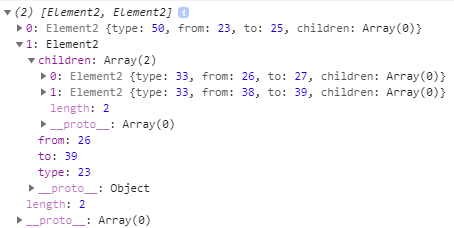

parseInline(text: string, offset: number) → Element[] Parse the given piece of inline text at the given offset, returning an array of

Elementobjects representing the inline content.

-

-

interfaceMarkdownConfig Objects of this type are used to configure the Markdown parser.

-

props?: readonly NodePropSource[] Node props to add to the parser's node set.

-

defineNodes?: readonly (string | NodeSpec)[] Define new node types for use in parser extensions.

-

parseBlock?: readonly BlockParser[] Define additional block parsing logic.

-

parseInline?: readonly InlineParser[] Define new inline parsing logic.

-

remove?: readonly string[] Remove the named parsers from the configuration.

-

wrap?: ParseWrapper Add a parse wrapper (such as a mixed-language parser) to this parser.

-

-

type MarkdownExtension = MarkdownConfig | readonly MarkdownExtension[] To make it possible to group extensions together into bigger extensions (such as the Github-flavored Markdown extension), reconfiguration accepts nested arrays of config objects.

-

parseCode(config: Object) → MarkdownExtension Create a Markdown extension to enable nested parsing on code blocks and/or embedded HTML.

-

config -

codeParser?: fn(info: string) → Parser | null When provided, this will be used to parse the content of code blocks.

infois the string after the opening```marker, or the empty string if there is no such info or this is an indented code block. If there is a parser available for the code, it should return a function that can construct the parse.-

htmlParser?: Parser The parser used to parse HTML tags (both block and inline).

-

-

-

GFM: MarkdownConfig[] Extension bundle containing

Table,TaskList,Strikethrough, andAutolink.

-

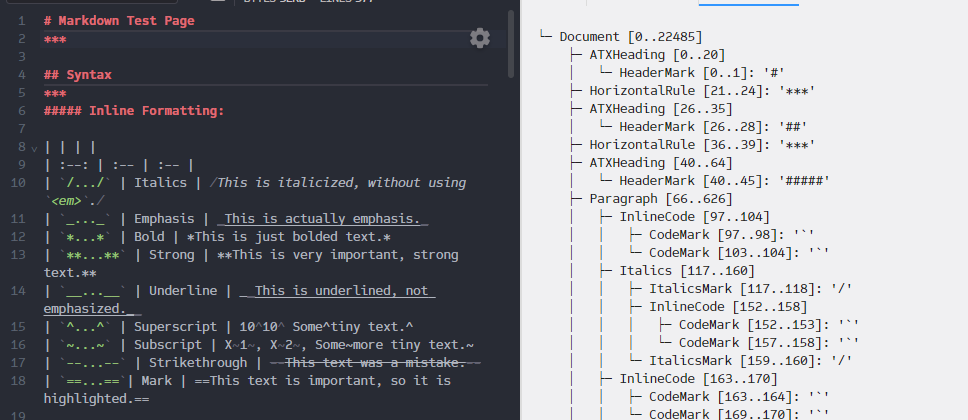

Table: MarkdownConfig This extension provides GFM-style tables, using syntax like this:

| head 1 | head 2 | | --- | --- | | cell 1 | cell 2 |

-

TaskList: MarkdownConfig Extension providing GFM-style task list items, where list items can be prefixed with

[ ]or[x]to add a checkbox.

-

Strikethrough: MarkdownConfig An extension that implements GFM-style Strikethrough syntax using

~~delimiters.

-

Autolink: MarkdownConfig Extension that implements autolinking for

www./http:///https:///mailto:/xmpp:URLs and email addresses.

-

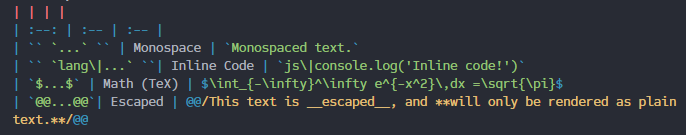

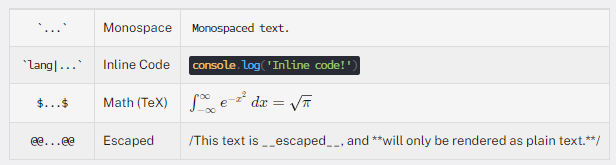

Subscript: MarkdownConfig Extension providing Pandoc-style subscript using

~markers.

-

Superscript: MarkdownConfig Extension providing Pandoc-style superscript using

^markers.

-

Emoji: MarkdownConfig Extension that parses two colons with only letters, underscores, and numbers between them as

Emojinodes.

The parser can, to a certain extent, be extended to handle additional syntax.

-

interfaceNodeSpec Used in the configuration to define new syntax node types.

-

name: string The node's name.

-

block?: boolean Should be set to true if this type represents a block node.

-

composite?: fn(cx: BlockContext, line: Line, value: number) → boolean If this is a composite block, this should hold a function that, at the start of a new line where that block is active, checks whether the composite block should continue (return value) and optionally adjusts the line's base position and registers nodes for any markers involved in the block's syntax.

-

style?: Tag | readonly Tag[] | Object<Tag | readonly Tag[]> Add highlighting tag information for this node. The value of this property may either by a tag or array of tags to assign directly to this node, or an object in the style of

styleTags's argument to assign more complicated rules.

-

-

classBlockContextimplements PartialParse Block-level parsing functions get access to this context object.

-

lineStart: number The start of the current line.

-

parser: MarkdownParser The parser configuration used.

-

depth: number The number of parent blocks surrounding the current block.

-

parentType(depth?: number = this.depth - 1) → NodeType Get the type of the parent block at the given depth. When no depth is passed, return the type of the innermost parent.

-

nextLine() → boolean Move to the next input line. This should only be called by (non-composite) block parsers that consume the line directly, or leaf block parser

nextLinemethods when they consume the current line (and return true).-

prevLineEnd() → number The end position of the previous line.

-

startComposite(type: string, start: number, value?: number = 0) Start a composite block. Should only be called from block parser functions that return null.

-

addElement(elt: Element) Add a block element. Can be called by block parsers.

-

addLeafElement(leaf: LeafBlock, elt: Element) Add a block element from a leaf parser. This makes sure any extra composite block markup (such as blockquote markers) inside the block are also added to the syntax tree.

-

elt(type: string, from: number, to: number, children?: readonly Element[]) → Element Create an

Elementobject to represent some syntax node.

-

-

interfaceBlockParser Block parsers handle block-level structure. There are three general types of block parsers:

-

Composite block parsers, which handle things like lists and blockquotes. These define a

parsemethod that starts a composite block and returns null when it recognizes its syntax. -

Eager leaf block parsers, used for things like code or HTML blocks. These can unambiguously recognize their content from its first line. They define a

parsemethod that, if it recognizes the construct, moves the current line forward to the line beyond the end of the block, add a syntax node for the block, and return true. -

Leaf block parsers that observe a paragraph-like construct as it comes in, and optionally decide to handle it at some point. This is used for "setext" (underlined) headings and link references. These define a

leafmethod that checks the first line of the block and returns aLeafBlockParserobject if it wants to observe that block.

-

name: string The name of the parser. Can be used by other block parsers to specify precedence.

-

parse?: fn(cx: BlockContext, line: Line) → boolean | null The eager parse function, which can look at the block's first line and return

falseto do nothing,trueif it has parsed (and moved past a block), ornullif it has started a composite block.-

leaf?: fn(cx: BlockContext, leaf: LeafBlock) → LeafBlockParser | null A leaf parse function. If no regular parse functions match for a given line, its content will be accumulated for a paragraph-style block. This method can return an object that overrides that style of parsing in some situations.

-

endLeaf?: fn(cx: BlockContext, line: Line, leaf: LeafBlock) → boolean Some constructs, such as code blocks or newly started blockquotes, can interrupt paragraphs even without a blank line. If your construct can do this, provide a predicate here that recognizes lines that should end a paragraph (or other non-eager leaf block).

-

before?: string When given, this parser will be installed directly before the block parser with the given name. The default configuration defines block parsers with names LinkReference, IndentedCode, FencedCode, Blockquote, HorizontalRule, BulletList, OrderedList, ATXHeading, HTMLBlock, and SetextHeading.

-

after?: string When given, the parser will be installed directly after the parser with the given name.

-

-

interfaceLeafBlockParser Objects that are used to override paragraph-style blocks should conform to this interface.

-

nextLine(cx: BlockContext, line: Line, leaf: LeafBlock) → boolean Update the parser's state for the next line, and optionally finish the block. This is not called for the first line (the object is contructed at that line), but for any further lines. When it returns

true, the block is finished. It is okay for the function to consume the current line or any subsequent lines when returning true.-

finish(cx: BlockContext, leaf: LeafBlock) → boolean Called when the block is finished by external circumstances (such as a blank line or the start of another construct). If this parser can handle the block up to its current position, it should finish the block and return true.

-

-

classLine Data structure used during block-level per-line parsing.

-

text: string The line's full text.

-

baseIndent: number The base indent provided by the composite contexts (that have been handled so far).

-

basePos: number The string position corresponding to the base indent.

-

pos: number The position of the next non-whitespace character beyond any list, blockquote, or other composite block markers.

-

indent: number The column of the next non-whitespace character.

-

next: number The character code of the character after

pos.-

skipSpace(from: number) → number Skip whitespace after the given position, return the position of the next non-space character or the end of the line if there's only space after

from.-

moveBase(to: number) Move the line's base position forward to the given position. This should only be called by composite block parsers or markup skipping functions.

-

moveBaseColumn(indent: number) Move the line's base position forward to the given column.

-

addMarker(elt: Element) Store a composite-block-level marker. Should be called from markup skipping functions when they consume any non-whitespace characters.

-

countIndent(to: number, from?: number = 0, indent?: number = 0) → number Find the column position at

to, optionally starting at a given position and column.-

findColumn(goal: number) → number Find the position corresponding to the given column.

-

-

classLeafBlock Data structure used to accumulate a block's content during leaf block parsing.

-

classInlineContext Inline parsing functions get access to this context, and use it to read the content and emit syntax nodes.

-

parser: MarkdownParser The parser that is being used.

-

text: string The text of this inline section.

-

offset: number The starting offset of the section in the document.

-

char(pos: number) → number Get the character code at the given (document-relative) position.

-

end: number The position of the end of this inline section.

-

slice(from: number, to: number) → string Get a substring of this inline section. Again uses document-relative positions.

-

addDelimiter(type: DelimiterType, from: number, to: number, open: boolean, close: boolean) → number Add a delimiter at this given position.

openandcloseindicate whether this delimiter is opening, closing, or both. Returns the end of the delimiter, for convenient returning from parse functions.-

addElement(elt: Element) → number Add an inline element. Returns the end of the element.

-

findOpeningDelimiter(type: DelimiterType) → number | null Find an opening delimiter of the given type. Returns

nullif no delimiter is found, or an index that can be passed totakeContentotherwise.-

takeContent(startIndex: number) → Element[] Remove all inline elements and delimiters starting from the given index (which you should get from

findOpeningDelimiter, resolve delimiters inside of them, and return them as an array of elements.-

skipSpace(from: number) → number Skip space after the given (document) position, returning either the position of the next non-space character or the end of the section.

-

elt(type: string, from: number, to: number, children?: readonly Element[]) → Element Create an

Elementfor a syntax node.

-

-

interfaceInlineParser Inline parsers are called for every character of parts of the document that are parsed as inline content.

-

name: string This parser's name, which can be used by other parsers to indicate a relative precedence.

-

parse(cx: InlineContext, next: number, pos: number) → number The parse function. Gets the next character and its position as arguments. Should return -1 if it doesn't handle the character, or add some element or delimiter and return the end position of the content it parsed if it can.

-

before?: string When given, this parser will be installed directly before the parser with the given name. The default configuration defines inline parsers with names Escape, Entity, InlineCode, HTMLTag, Emphasis, HardBreak, Link, and Image. When no

beforeorafterproperty is given, the parser is added to the end of the list.-

after?: string When given, the parser will be installed directly after the parser with the given name.

-

-

interfaceDelimiterType Delimiters are used during inline parsing to store the positions of things that might be delimiters, if another matching delimiter is found. They are identified by objects with these properties.

-

resolve?: string If this is given, the delimiter should be matched automatically when a piece of inline content is finished. Such delimiters will be matched with delimiters of the same type according to their open and close properties. When a match is found, the content between the delimiters is wrapped in a node whose name is given by the value of this property.

When this isn't given, you need to match the delimiter eagerly using the

findOpeningDelimiterandtakeContentmethods.-

mark?: string If the delimiter itself should, when matched, create a syntax node, set this to the name of the syntax node.

-