ma-dan / keras-yolo4 Goto Github PK

View Code? Open in Web Editor NEWA Keras implementation of YOLOv4 (Tensorflow backend)

License: MIT License

A Keras implementation of YOLOv4 (Tensorflow backend)

License: MIT License

from tensorflow import keras

new_model = keras.models.load_model('yolo4_weight.h5')

Traceback (most recent call last):

File "/home/source/keras-yolo4/mytest.py", line 13, in <module>

new_model = keras.models.load_model(h5)

File "/opt/conda/lib/python3.6/site-packages/tensorflow/python/keras/saving/save.py", line 146, in load_model

return hdf5_format.load_model_from_hdf5(filepath, custom_objects, compile)

File "/opt/conda/lib/python3.6/site-packages/tensorflow/python/keras/saving/hdf5_format.py", line 212, in load_model_from_hdf5

custom_objects=custom_objects)

File "/opt/conda/lib/python3.6/site-packages/tensorflow/python/keras/saving/model_config.py", line 55, in model_from_config

return deserialize(config, custom_objects=custom_objects)

File "/opt/conda/lib/python3.6/site-packages/tensorflow/python/keras/layers/serialization.py", line 89, in deserialize

printable_module_name='layer')

File "/opt/conda/lib/python3.6/site-packages/tensorflow/python/keras/utils/generic_utils.py", line 192, in deserialize_keras_object

list(custom_objects.items())))

File "/opt/conda/lib/python3.6/site-packages/tensorflow/python/keras/engine/network.py", line 1121, in from_config

process_layer(layer_data)

File "/opt/conda/lib/python3.6/site-packages/tensorflow/python/keras/engine/network.py", line 1105, in process_layer

layer = deserialize_layer(layer_data, custom_objects=custom_objects)

File "/opt/conda/lib/python3.6/site-packages/tensorflow/python/keras/layers/serialization.py", line 89, in deserialize

printable_module_name='layer')

File "/opt/conda/lib/python3.6/site-packages/tensorflow/python/keras/utils/generic_utils.py", line 181, in deserialize_keras_object

config, module_objects, custom_objects, printable_module_name)

File "/opt/conda/lib/python3.6/site-packages/tensorflow/python/keras/utils/generic_utils.py", line 166, in class_and_config_for_serialized_keras_object

raise ValueError('Unknown ' + printable_module_name + ': ' + class_name)

ValueError: Unknown layer: Mish

您好,请问一下,我训练的时候出现这样的提示,会影响训练结果吗,是什么原因导致的呢。

I trained from scratch a new set of weights. I used a set of data that I have used to train Yolo3 which worked well. Creating a new set of weights with train.py results in very low detection rate which does not seem to make sense.

Trying to generate animations in img2img with stablediffusion and ive started getting this error, which is odd as i have not had this error before, if anyone can point me to what i need to fix that'd be greatly appreciated!

Traceback (most recent call last):

File "C:\Users\James\Documents\stable-diffusion-webui-master\modules\ui.py", line 185, in f

res = list(func(*args, **kwargs))

File "C:\Users\James\Documents\stable-diffusion-webui-master\webui.py", line 54, in f

res = func(*args, **kwargs)

File "C:\Users\James\Documents\stable-diffusion-webui-master\modules\img2img.py", line 137, in img2img

processed = modules.scripts.scripts_img2img.run(p, *args)

File "C:\Users\James\Documents\stable-diffusion-webui-master\modules\scripts.py", line 290, in run

processed = script.run(p, *script_args)

File "C:\Users\James\Documents\stable-diffusion-webui-master\scripts\animation.py", line 749, in run

init_img = zoom_at2(init_img, rot_per_frame, int(x_shift_cumulative), int(y_shift_cumulative),

File "C:\Users\James\Documents\stable-diffusion-webui-master\scripts\animation.py", line 34, in zoom_at2

img2 = img.resize((int(w * zoom), int(h * zoom)), Image.Resampling.LANCZOS)

File "C:\Users\James\Documents\stable-diffusion-webui-master\venv\lib\site-packages\PIL\Image.py", line 2082, in resize

return self._new(self.im.resize(size, resample, box))

ValueError: height and width must be > 0

大致看了一下,貌似get_random_data()方法里面并没有实现mosaic的数据增强方法?其他的大部分和keras yolov3的差不多~

Loading weights.

Weights Header: 1329865020 1348031555 1952981061 [1010723949]

Converting 0

Traceback (most recent call last):

File "convert.py", line 173, in

yolo4_model = Yolo4(score, iou, anchors_path, classes_path, model_path, weights_path)

File "convert.py", line 157, in init

self.load_yolo()

File "convert.py", line 130, in load_yolo

buffer=weights_file.read(weights_size * 4))

TypeError: buffer is too small for requested array

@Ma-Dan Hi,

Did you test accuracy of converted YOLOv4-model on MSCOCO?

Is it the same as in the Darknet - 43.5% AP (65.7% AP50) for YOLOv4-608x608?

in model.py, preprocess_true_boxes():

for i in range(3):

anchors_xywh = np.zeros((3, 4))

anchors_xywh[:, 0:2] = np.floor(bbox_xywh_scaled[i, 0:2]).astype(np.int32) + 0.5

anchors_xywh[:, 2:4] = anchors[i]

iou_scale = bbox_iou_data(bbox_xywh_scaled[i][np.newaxis, :], anchors_xywh)

iou.append(iou_scale)

since anchors_xywh is in original scale, we shouldn't use bbox_xywh instead of bbox_xywh_scaled to compute iou?

Traceback (most recent call last):

File "convert.py", line 173, in

yolo4_model = Yolo4(score, iou, anchors_path, classes_path, model_path, weights_path)

File "convert.py", line 157, in init

self.load_yolo()

File "convert.py", line 130, in load_yolo

buffer=weights_file.read(weights_size * 4))

Traceback (most recent call last):

File "test.py", line 69, in

image, boxes, scores, classes = _decode.detect_image(image, True)

File "/home/user/santosh/keras-yolo4/decode_np.py", line 23, in detect_image

boxes, scores, classes = self.predict(pimage, image.shape)

File "/home/user/santosh/keras-yolo4/decode_np.py", line 135, in predict

outs = self._yolo.predict(image)

File "/home/user/anaconda3/lib/python3.7/site-packages/keras/engine/training.py", line 1462, in predict

callbacks=callbacks)

File "/home/user/anaconda3/lib/python3.7/site-packages/keras/engine/training_arrays.py", line 324, in predict_loop

batch_outs = f(ins_batch)

File "/home/user/anaconda3/lib/python3.7/site-packages/tensorflow_core/python/keras/backend.py", line 3476, in call

run_metadata=self.run_metadata)

File "/home/user/anaconda3/lib/python3.7/site-packages/tensorflow_core/python/client/session.py", line 1472, in call

run_metadata_ptr)

tensorflow.python.framework.errors_impl.UnimplementedError: Fused conv implementation does not support grouped convolutions for now.

[[{{node conv2d_94/BiasAdd}}]]

可否把训练自己数据用的步骤详细写一下?

When I run python test.py

then

Input image filename:'yolo4.png'

I get tis error:

\Traceback (most recent call last):

File "test.py", line 158, in

result = yolo4_model.detect_image(image, model_image_size=model_image_size)

File "test.py", line 80, in detect_image

boxed_image = letterbox_image(image, tuple(reversed(model_image_size)))

File "/home/muhammadmubeen/keras-yolo4/yolo4/utils.py", line 28, in letterbox_image

image = image.resize((nw,nh), Image.BICUBIC)

File "/usr/local/lib/python2.7/dist-packages/PIL/Image.py", line 1883, in resize

im = im.resize(size, resample, box)

File "/usr/local/lib/python2.7/dist-packages/PIL/Image.py", line 1888, in resize

return self._new(self.im.resize(size, resample, box))

ValueError: height and width must be > 0

it works for standard yolov4, but in model.py there is no network for tiny-yolov4, what kind of network that has to be specified? any help for it? Thanks in advance.

I trained with my own data set, and loss felt high and converged slowly

how to capture real camera and detect ?

Hi,

Does anyone know of a way to get the detection information from the model frame by frame and then save that information to a file? I have been trying to figure this out but have not found a good solution yet. Would love to hear your ideas.

Warm Regards

I want to change Backbone network! I heard that It is not good ResNeXt in OD.

I want to change CSPDarknet53! How can I change Backbone?

Traceback (most recent call last):

File "test.py", line 58, in <module>

yolo4_model.load_weights(model_path)

File "/usr/local/lib/python3.6/dist-packages/keras/engine/saving.py", line 492, in load_wrapper

return load_function(*args, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/keras/engine/network.py", line 1230, in load_weights

f, self.layers, reshape=reshape)

File "/usr/local/lib/python3.6/dist-packages/keras/engine/saving.py", line 1237, in load_weights_from_hdf5_group

K.batch_set_value(weight_value_tuples)

File "/usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py", line 2960, in batch_set_value

tf_keras_backend.batch_set_value(tuples)

File "/home/mayur/.local/lib/python3.6/site-packages/tensorflow_core/python/keras/backend.py", line 3323, in batch_set_value

x.assign(np.asarray(value, dtype=dtype(x)))

File "/home/mayur/.local/lib/python3.6/site-packages/tensorflow_core/python/ops/resource_variable_ops.py", line 819, in assign

self._shape.assert_is_compatible_with(value_tensor.shape)

File "/home/mayur/.local/lib/python3.6/site-packages/tensorflow_core/python/framework/tensor_shape.py", line 1110, in assert_is_compatible_with

raise ValueError("Shapes %s and %s are incompatible" % (self, other))

ValueError: Shapes (1, 1, 1024, 75) and (255, 1024, 1, 1) are incompatible请问训练该模型使用的GPU是什么型号,显存大小?

GPU=8G显存,能否支持训练batch_size = 4?

keras和tensorflow的版本?

在新版的https://github.com/miemie2013/Keras-YOLOv4代码中,运行出现eval.py程序出现

2020-06-21 20:08:35,249-INFO: Test iter 5800

loading annotations into memory...

Done (t=0.06s)

creating index...

index created!

2020-06-21 20:09:15,991-INFO: Generating json file...

Traceback (most recent call last):

File "eval.py", line 72, in

box_ap = eval(_decode, images, eval_pre_path, anno_file, eval_batch_size, _clsid2catid, draw_image)

File "E:\chongqingchuyuxiangmu\Keras-YOLOv4\tools\cocotools.py", line 167, in eval

box_ap_stats = bbox_eval(anno_file)

File "E:\chongqingchuyuxiangmu\Keras-YOLOv4\tools\cocotools.py", line 86, in bbox_eval

for line in f:

MemoryError

运行test_dev.py正常,但运行eval.py程序之后,出现MemoryError,请问是怎么回事?

3, 5 + num_classes))

TypeError: only integer scalar arrays can be converted to a scalar index

这个问题怎么解决

I have trained yolo.weights (with my custom dataset having 3 classes) and the detections are coming fine while testing with that model. But when i am converting it to keras model, I am getting below error

File "convert.py", line 172, in <module>

yolo4_model = Yolo4(score, iou, anchors_path, classes_path, model_path, weights_path)

File "convert.py", line 157, in __init__

self.load_yolo()

File "convert.py", line 98, in load_yolo

buffer=weights_file.read(weights_size * 4))

TypeError: buffer is too small for requested array

Could someone tell me why it is happening and what changes I should make

在model.py的文件中yo'lo4-body中每一个输出层是如何定义的?原版的网络层数我看到只有161层,但是在代码中看到了204层?

并且想知道本次的代码中第二个残差网络输出后是多少层

Epoch 89/150

loss: [70.428894][6.8589654][49.6575623][13.912364]

1234/1234 [==============================] - 638s 517ms/step - loss: 48.12988.202549][5.05134583][33.3962708][9.7549324]]]]]]]

Epoch end eval mAP on weight logs/222/ep089-loss48.130.h5

Epoch 89 mAP 0.2658832599269524

Epoch 90/150

loss: [59.5338707][5.99079227][46.7998619][6.74321651]

1234/1234 [==============================] - 655s 531ms/step - loss: 47.88505.1289482][3.23023152][27.289856][4.60885954]]]]]

Epoch end eval mAP on weight logs/222/ep090-loss47.885.h5

Epoch 90 mAP 0.26986168503362695

in @miemie2013's code

x = layers.ZeroPadding2D(padding=((1, 0), (1, 0)))(x)

x = conv2d_unit(x, i512, 3, strides=2)

s16 = conv2d_unit(x, i256, 1, strides=1)

x = conv2d_unit(x, i256, 1, strides=1)

x = stack_residual_block(x, i256, i256, n=8)

x = conv2d_unit(x, i256, 1, strides=1)

s16 = layers.Concatenate()([x, s16])

x = conv2d_unit(s16, i512, 1, strides=1)

where s16 is the output of Concatenate()

x = layers.UpSampling2D(2)(x)

s16 = conv2d_unit(s16, i256, 1, strides=1, act='leaky')

x = layers.Concatenate()([s16, x])

s16 then computer through conv2d_unit and concatenate with upsampling output x

as for your code:

y38 = DarknetConv2D_BN_Leaky(256, (1,1))(darknet.layers[204].output)

y38 = Concatenate()([y38, y19_upsample])

where darknet.layers[204].output is the output of DarknetConv2D_BN_Mish, not the concatenate layer darknet.layers[201].outputs.

So, I am curious which version is right, or both right?

Hello! Thank you for amazing work!

Could you help me, how to convert the trained weights back to darknet or tensorflow?

Thank you!

thanks for the nice repo.

I am training yolov4 on coco dataset for only the first 7 classes with a split 70:30,

any experiences or hints about the training loss when the model seems acceptable?

below is my traing log, seem a long time to train.

Train on 82801 samples, val on 35486 samples, with batch size 32.

Epoch 1/50

1/2587 [..............................] - ETA: 28:56:58 - loss: 17721.8926

2/2587 [..............................] - ETA: 16:33:45 - loss: 17243.4922

3/2587 [..............................] - ETA: 12:32:28 - loss: 16760.1377

4/2587 [..............................] - ETA: 10:10:04 - loss: 16299.9021

5/2587 [..............................] - ETA: 9:24:35 - loss: 15857.3045

6/2587 [..............................] - ETA: 9:14:57 - loss: 15430.3268

7/2587 [..............................] - ETA: 9:07:24 - loss: 15008.8902

8/2587 [..............................] - ETA: 9:01:51 - loss: 14608.8591

9/2587 [..............................] - ETA: 9:12:25 - loss: 14219.5904

10/2587 [..............................] - ETA: 9:20:37 - loss: 13848.0055

11/2587 [..............................] - ETA: 9:18:39 - loss: 13490.1777

12/2587 [..............................] - ETA: 8:59:26 - loss: 13150.2271

13/2587 [..............................] - ETA: 8:44:20 - loss: 12822.8066

14/2587 [..............................] - ETA: 8:34:12 - loss: 12509.4747

15/2587 [..............................] - ETA: 8:32:09 - loss: 12211.5387

16/2587 [..............................] - ETA: 8:26:59 - loss: 11925.6479

17/2587 [..............................] - ETA: 8:20:37 - loss: 11649.0334

18/2587 [..............................] - ETA: 8:21:20 - loss: 11381.8880

19/2587 [..............................] - ETA: 8:14:07 - loss: 11124.9040

20/2587 [..............................] - ETA: 8:11:29 - loss: 10882.4443

21/2587 [..............................] - ETA: 8:07:56 - loss: 10645.2164

22/2587 [..............................] - ETA: 8:03:53 - loss: 10420.4502

23/2587 [..............................] - ETA: 7:58:37 - loss: 10203.8660

24/2587 [..............................] - ETA: 7:54:08 - loss: 9995.8066

25/2587 [..............................] - ETA: 7:50:39 - loss: 9794.8682

26/2587 [..............................] - ETA: 7:48:39 - loss: 9599.6988

27/2587 [..............................] - ETA: 7:45:55 - loss: 9411.8161

28/2587 [..............................] - ETA: 7:42:36 - loss: 9234.4692

29/2587 [..............................] - ETA: 7:39:51 - loss: 9060.8342

30/2587 [..............................] - ETA: 7:35:15 - loss: 8894.1920

31/2587 [..............................] - ETA: 7:33:45 - loss: 8733.4837

32/2587 [..............................] - ETA: 7:31:51 - loss: 8577.9887

33/2587 [..............................] - ETA: 7:29:49 - loss: 8425.8984

34/2587 [..............................] - ETA: 7:27:43 - loss: 8280.7446

35/2587 [..............................] - ETA: 7:26:30 - loss: 8139.6654

36/2587 [..............................] - ETA: 7:26:01 - loss: 8004.0953

37/2587 [..............................] - ETA: 7:26:13 - loss: 7872.3828

38/2587 [..............................] - ETA: 7:24:52 - loss: 7746.5956

39/2587 [..............................] - ETA: 7:23:28 - loss: 7624.6465

40/2587 [..............................] - ETA: 7:29:19 - loss: 7506.7023

41/2587 [..............................] - ETA: 7:35:16 - loss: 7391.7025

42/2587 [..............................] - ETA: 7:34:34 - loss: 7280.5936

43/2587 [..............................] - ETA: 7:31:58 - loss: 7172.5243

44/2587 [..............................] - ETA: 7:29:56 - loss: 7067.7942

45/2587 [..............................] - ETA: 7:30:26 - loss: 6965.9564

46/2587 [..............................] - ETA: 7:31:08 - loss: 6867.1803

47/2587 [..............................] - ETA: 7:34:20 - loss: 6771.7925YOLO V3 keras版本 解冻前3层配置 batchsize 128乘8 解冻后 252层 32乘8,8代表GPU nums 均可以顺利训练

YOLO V3 keras版本 解冻前3层 只能配置 16乘8 解冻后 只能1乘8 ,否则就会报错,这个有办法解决么。如果batchsize不能配置较大的话,训练一个模型50万张图片,需要好几个月,这完全没法忍受啊。

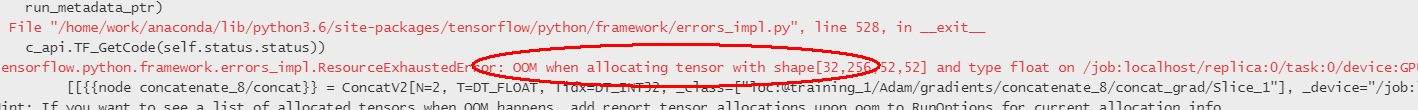

具体报错:(tensorflow.python.framework.errors_impl.ResourceExhaustedError: OOM when allocating tensor with shape[32,256,52,52] and type float on /job:localhost/replica:0/task:0/device:GPU:0 by allocator GPU_0_bfc)

I have converted a custom YOLO4 model to the tensorflow saved_model format. I can successfully use this for single image inference. Now when I feed in a batch of images for client side batching I get the results back in one list without separation of the results into frames so I can't work out which detections go with which frames. Can you provide any info on whether this behavior is expected or how I might remedy it?

loss和val loss总是出现nan

恕我直言qqwweee那版yolo3就是个废仓库,训练不出效果,loss经常出现nan,那段时间让我踩了很多坑。恕我直言这么废的仓库也有那么多star真的让人很无语。算法复现的要求是达到指定精度,而不是让观众成为你的debugger、改进者。广告虽可耻,大家可以看看我复现的这版yolov4,精心设计了损失函数,训练了70000步就没出现过nan,mAP至少可达36%,欢迎点star:

Failed to run convert.py. And there is no error message.

in tf2+, Lambda layer could not be used anymore, is there any solution to solve the issue

especially th error:

ValueError: tf.function-decorated function tried to create variables on non-first call.

能提供个和 v3一样的,tiny版本吗

Tensorflow YOLOv4 训练自己的数据集,深度学习实战群 755860371 共同学习,谢谢

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.