About Unray

Framework for communication between Unreal Engine and Python.

This repository contains all the files needed for usage in Python.

Unreal Engine

Engine Version

We are currently using Unreal Engine 5.1. We recommend using the same version to ensure project stability.

Project Files

In the Maps folder you'll find some examples to run:

Custom Envs

To create a custom env in Unreal Engine, first create your Agent Blueprint.

You can create your agent based on the parent class of your choice. Once you create the blueprint, go to the Class Settings section.

In the details panel, find the Interfaces section:

In the Implemented Interfaces subsection, click the Add button and search for "BI_Agent".

Once you do this, in the blueprint functions, you'll now have these functions:

You have to implement these functions according to your enviornment.

| Function | Description |

|---|---|

Get Reward |

Agent Reward |

Is Done |

Function to specify the way the agent finishes the environment |

Reset |

Reset the agent. Create Actor -> True if you want to destroy the actor and spawn it again in a new place. |

Get State |

Get agent observations |

Step |

What the agent does in each step |

When you've implemented all these functions and you want to try your environment, you'll have to add a Connector to your map.

In the Blueprints folder, you'll find the connectors for both single agent envs and multiagent envs:

Single Agent Environments

If your environment is a single agent env, place a Connector_SA instance in your map. Once you do, you can select it and in the details panel you'll find the Default section. There, you'll find an Actor Agent variable, assign your agent to this variable.

MultiAgent Environments

If your environment is a multiagent env, you'll need to place a Connector_MA instance in your map. Once you do, you can select it and in the details panel you'll find the Default section. There, you'll find an array called Actor Agents.

To ensure the framework can recognise all the agents in your environment, add each agent to the array.

Remember that for each agent in your env, you'll have to implement the Reward, Done, Reset, Get State and Step functions.

Parallel Trainning

If you want to train several envs at the same time, we recommend you create your env as a Blueprint.

In the Blueprints folder you'll find a MultiAgent_Env Blueprint.

You can create your env with this Blueprint as a parent Class.

In the Viewport of your env blueprint class, drag the connector you need from the Content Drawer and place it where you want.

In the Event Graph of your env blueprint class, you'll have to do a few things to configure your env.

First, each env you create will have an ID (which defaults to 1). You can either set this parameter in the Details pannel of your map or create a function to set it automatically.

Then, you need to add the agents in your env to an Agents array, which belongs to the MultiAgent_Env class. To do so, simply search for the Get Agents function and add each of your agents to this array. For example, in the MultiAgent Arena map it looks like this:

Finally, you'll have to add the following functions to your env class:

This is to set the agents and set the ports in which the communication is going to happen.

UNRAY Bridge

Clone the repo and install the given dependencies. This is just the python-side of the framework. Remember to create or to open a UE5 scene with the official unray-bridge blueprints.

https://github.com/Nullspace-Colombia/unray-bridge.git && cd unray-bridge

pip install -r requirements.txt

We recommend conda for creating a virtualenv and installing the dependendencies. Currently, Ray is available in Python 3.10 or less, so we recommend creating a virtualenv with version 3.10.

Running Examples

There are currently two examples ready for you to run.

Cartpole

In Unreal Engine, go to the maps folder and start the Cartpole map. Once it is running, go to your terminal an inside the unray-bridge folder run:

python main_cartpole.py

If everything is correct, the cartpole will start to move.

MultiAgent Arena

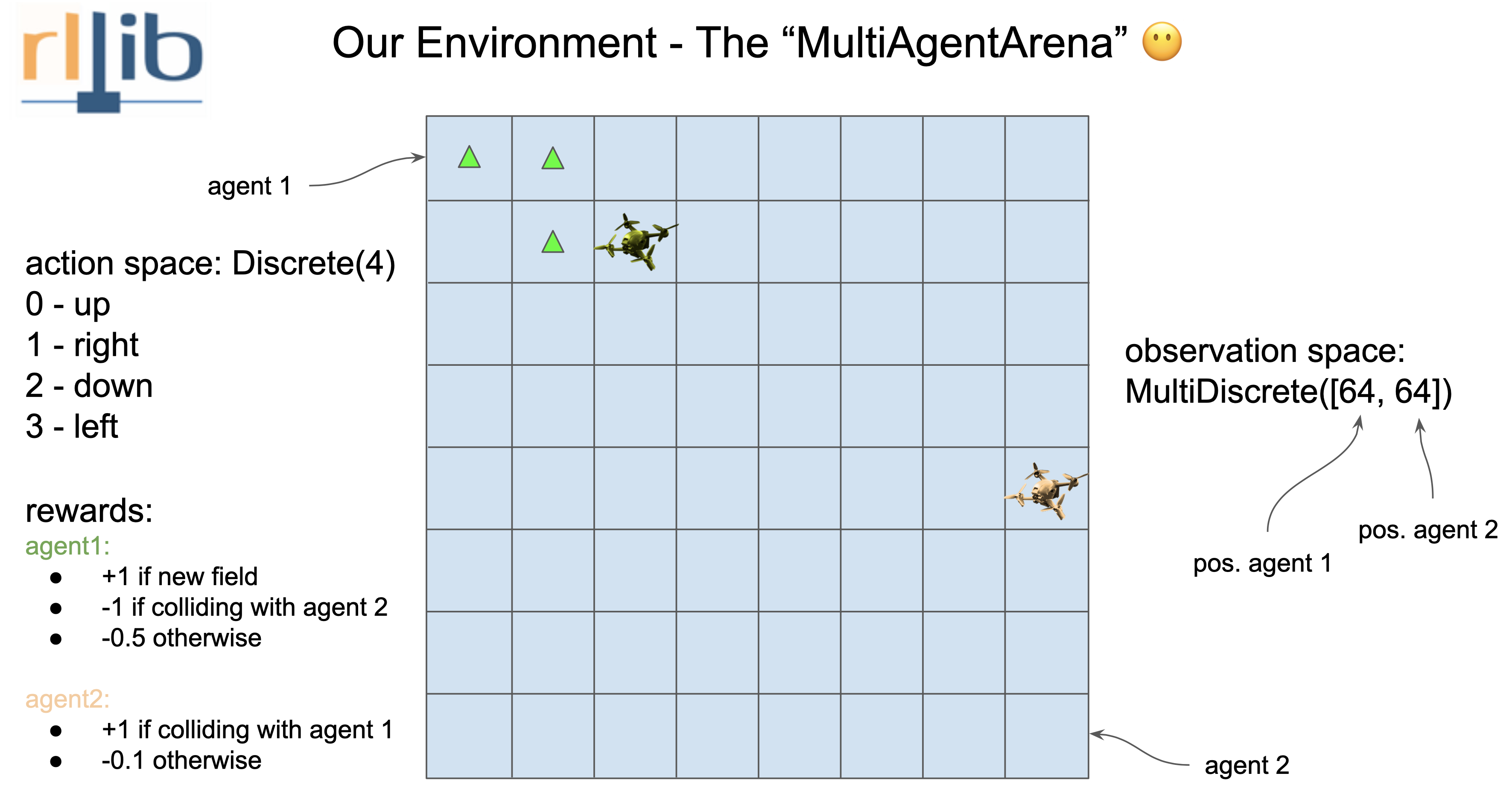

In this env, you have two agents competing in a single env.

In Unreal Engine, go to the maps folder and start the MultiAgentArena map. Once it is running, go to your terminal an inside the unray-bridge folder run:

python main_multiagentArena.py

If everything is correct, the agents will start to move.

MultiAgent Arena Parallel Trainning

In Unreal Engine, go to the maps folder and start the MultiAgentArena_BP map. Once it is running, go to your terminal an inside the unray-bridge folder run:

python parallel_multiagentArena.py

If everything is correct, the four envs will start to move.

RL Environment for simple training

Single Agent

[In dev process]

Multiagent

In order to define a custom environment, you have to create an action and observation dictionary. This is called a env_config dict.

# Define the env_config dict for each agent.

env_config = {

"agent-1": {

"observation": <Space>,

"action": <Space>

},

"agent-2": {

"observation": <Space>,

"action": <Space>

}

...Each Space is taken from BridgeSpace

from unray_bridge.envs.spaces import BridgeSpaces This dictionary defines the independent spaces for each of the agents. Then, the environment is intantiated inherited from MultiAgentBridgeEnv from unray_bridge

from unray_bridge.envs.bridge_env import MultiAgentBridgeEnvContructor needs environment name, ip, port and the config.

env = MultiAgentBridgeEnv(

name = "multiagent-arena",

ip = 'localhost',

port = 10110,

config = env_config

)Multiagent Workflow

As well as in the single-agent case, the environment dynamics are defined externally in the UE5 Scenario. The BridgeEnv lets RLlib comunicate with the enviornment via TPC/IP connection, sending the agent actions defined by ray algorithms and reciving the observation vectors from the environment for the trainer to train. The MultiAgentBridgeEnvcreates the connection_handler that allow to maintain the socket communication.

1. How does the multiagent dictionaries are structured for sending to UE5 ?

Suppose we have n-agents in the environment. Each of them with a given a_i action vector. This means that we have a total data of the sum of sizes for each action vector. Hence, stacking these vectors we got the final buffer that is send to the socket server from UE5.

Multiagent Example: Multiagent-Arena

As a simple example we will build a Multiagent-Arena environment in UE5 an train it in ray using the unray-bridge framework.

Img taken from https://github.com/sven1977/rllib_tutorials/blob/main/ray_summit_2021/tutorial_notebook.ipynbUnderstanding the environment

As a Unray-bridge philosophy first we have to break down what the environment need. We have two agents that move in the same scenario, given by a 8x8 square grid. They can only move one no-diagonal square for each episode. (The reward system is defined in the image).

Hence we got:

- Agent 1 and 2 Observation: MultiDiscrete([64])

- Agent 1 and 2 Action: Discrete([4])

Defining the env_config as follows:

env_config = {

"agent-1":{

"observation": BridgeSpaces.MultiDiscrete([64], [64]),

"action": BridgeSpaces.Discrete(4),

},

"agent-2":{

"observation": BridgeSpaces.MultiDiscrete([64], [64]),

"action": BridgeSpaces.Discrete(4),

}

}Create the environment

env = MultiAgentBridgeEnv(

name = "multiagent-arena",

ip = 'localhost',

port = 10110,

config = env_config

)