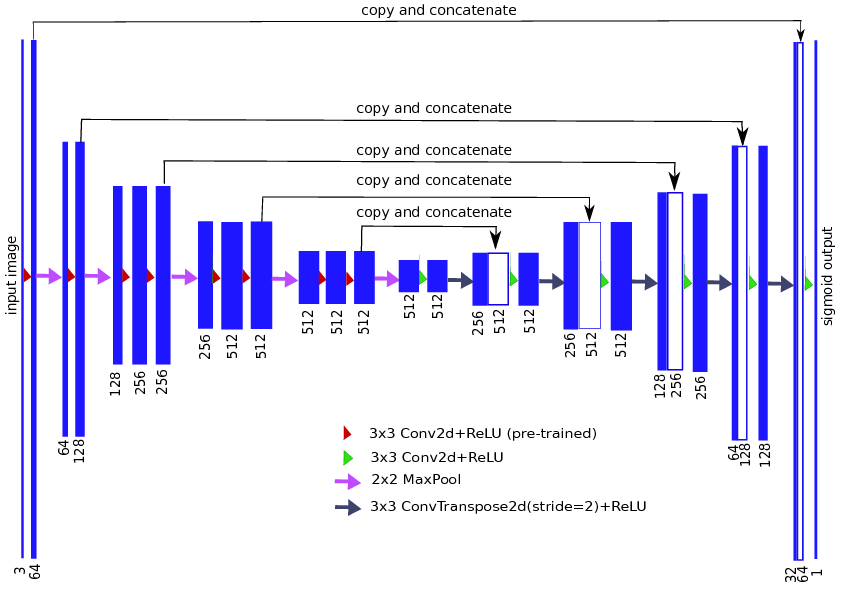

I am using your pretrained VGG11 model for Kaggle AirBus competition. The output class is binary. The first problem is that during training the loss score continued to decrease, however the Jaccard score do not change at all.

Epoch 1, lr 0.01: 0%| | 0/57424 [00:00<?, ?it/s]

0.01

Epoch 1, lr 0.01: 100%|█████████▉| 57422/57424 [1:49:41<00:00, 8.33it/s, loss=0.00074]

Epoch 2, lr 0.01: 0%| | 0/57424 [00:00<?, ?it/s]

Valid loss: 0.00063, jaccard: 0.37004

0.01

Epoch 2, lr 0.01: 100%|█████████▉| 57422/57424 [1:50:11<00:00, 8.14it/s, loss=0.00350]

Epoch 3, lr 0.01: 0%| | 0/57424 [00:00<?, ?it/s]

Valid loss: 0.63987, jaccard: 0.37004

0.01

Epoch 3, lr 0.01: 100%|█████████▉| 57422/57424 [1:49:52<00:00, 8.04it/s, loss=0.00102]

Epoch 4, lr 0.01: 0%| | 0/57424 [00:00<?, ?it/s]

Valid loss: 0.00081, jaccard: 0.37004

0.01

Epoch 4, lr 0.01: 100%|█████████▉| 57422/57424 [1:49:59<00:00, 8.02it/s, loss=0.00036]

Epoch 5, lr 0.01: 0%| | 0/57424 [00:00<?, ?it/s]

Valid loss: 0.00043, jaccard: 0.37004

0.01

Epoch 5, lr 0.01: 100%|█████████▉| 57422/57424 [1:49:53<00:00, 8.01it/s, loss=0.00035]

Epoch 6, lr 0.001: 0%| | 0/57424 [00:00<?, ?it/s]

Valid loss: 0.00039, jaccard: 0.37004

0.001

Epoch 6, lr 0.001: 100%|█████████▉| 57422/57424 [1:49:33<00:00, 7.97it/s, loss=0.00038]

Epoch 7, lr 0.001: 0%| | 0/57424 [00:00<?, ?it/s]

Valid loss: 0.00039, jaccard: 0.37004

0.001

Epoch 7, lr 0.001: 100%|█████████▉| 57422/57424 [1:49:32<00:00, 8.26it/s, loss=0.00051]

Epoch 8, lr 0.001: 0%| | 0/57424 [00:00<?, ?it/s]

Valid loss: 0.00039, jaccard: 0.37004

0.001

Epoch 8, lr 0.001: 100%|█████████▉| 57422/57424 [1:49:33<00:00, 8.15it/s, loss=0.00085]

Epoch 9, lr 0.001: 0%| | 0/57424 [00:00<?, ?it/s]

Valid loss: 0.00039, jaccard: 0.37004

0.001

Epoch 9, lr 0.001: 92%|█████████▏| 52952/57424 [1:40:36<08:59, 8.29it/s, loss=0.00052]

My next question is how to make final prediction? I check your paper. In the paper, you claim that after applying sigmoid function to output, you just pick a "0.3" threshold. So, if I want to do my own problem, I just do the same way, correct? also, I tried with my output. I tried with different numbers, here is an example output I got with 0.509 threshold. It is clearly detecting something. However, the predicted ship area is not very continuous, unlike the one in your paper. Do you know why? or how to deal with it? how to better select a threshold?