🔥NEWS🔥: We have a new preprint! Check it out at bioRxiv: https://www.biorxiv.org/content/10.1101/2024.02.03.576026v1

BiaPy is an open source ready-to-use all-in-one library that provides deep-learning workflows for a large variety of bioimage analysis tasks, including 2D and 3D semantic segmentation, instance segmentation, object detection, image denoising, single image super-resolution, self-supervised learning and image classification.

BiaPy is a versatile platform designed to accommodate both proficient computer scientists and users less experienced in programming. It offers diverse and user-friendly access points to our workflows.

This repository is actively under development by the Biomedical Computer Vision group at the University of the Basque Country and the Donostia International Physics Center.

Find a comprehensive overview of BiaPy and its functionality in the following videos:

BiaPy history and GUI demo at RTmfm by Ignacio Arganda-Carreras and Daniel Franco-Barranco. |

|---|

BiaPy presentation at Virtual Pub of Euro-BioImaging by Ignacio Arganda-Carreras. |

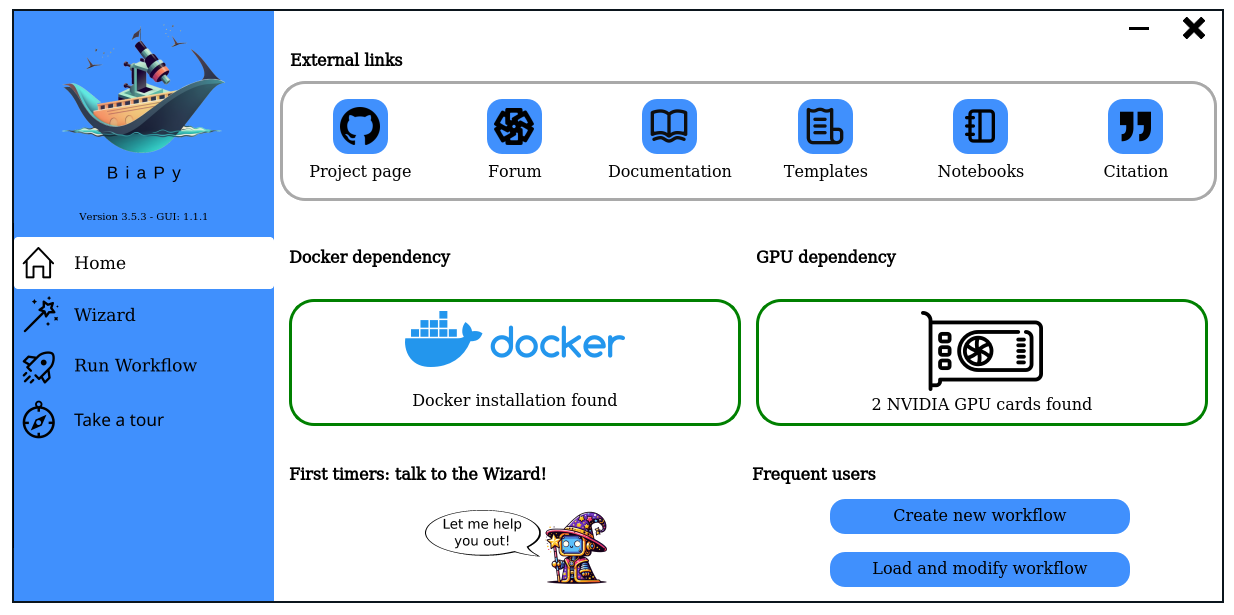

You can also use BiaPy through our graphical user interface (GUI).

Project's page: [BiaPy GUI]

López-Cano, Daniel, et al. "Characterizing Structure Formation through Instance Segmentation" (2023). This study presents a machine-learning framework to predict the formation of dark matter haloes from early universe density perturbations. Utilizing two neural networks, it distinguishes particles comprising haloes and groups them by membership. The framework accurately predicts halo masses and shapes, and compares favorably with N-body simulations. The open-source model could enhance analytical methods of structure formation by analyzing initial condition variations. BiaPy is used in the creation of the watershed approach. [Documentation (not yet)] [Paper] |

|

||

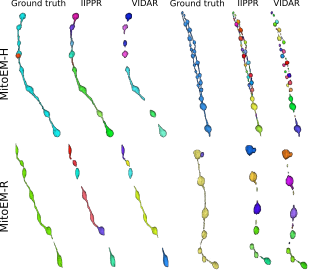

Franco-Barranco, Daniel, et al. "Current Progress and Challenges in Large-scale 3D Mitochondria Instance Segmentation." (2023). This paper reports the results of the MitoEM challenge on 3D instance segmentation of mitochondria in electron microscopy images, held in conjunction with IEEE-ISBI 2021. The paper discusses the top-performing methods, addresses ground truth errors, and proposes a new scoring system to improve segmentation evaluation. Despite progress, challenges remain in segmenting mitochondria with complex shapes, keeping the competition open for further submissions. BiaPy is used in the creation of the MitoEM challenge baseline (U2D-BC). [Documentation] [Paper] [Toolbox] |

|

||

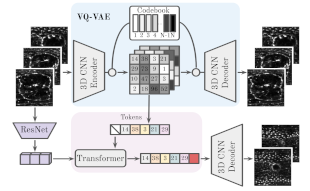

Backová, Lenka, et al. "Modeling Wound Healing Using Vector Quantized Variational Autoencoders and Transformers." 2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI). IEEE, 2023. This study focuses on time-lapse sequences of Drosophila embryos healing from laser-incised wounds. The researchers employ a two-stage approach involving a vector quantized variational autoencoder and an autoregressive transformer to model wound healing as a video prediction task. BiaPy is used in the creation of the wound segmentation masks. [Documentation] [Paper] |

|

||

Andrés-San Román, Jesús A., et al. "CartoCell, a high-content pipeline for 3D image analysis, unveils cell morphology patterns in epithelia." Cell Reports Methods (2023) Combining deep learning and 3D imaging is crucial for high-content analysis. CartoCell is introduced, a method that accurately labels 3D epithelial cysts, enabling quantification of cellular features and mapping their distribution. It's adaptable to other epithelial tissues. CartoCell method is created using BiaPy. [Documentation] [Paper] |

|

||

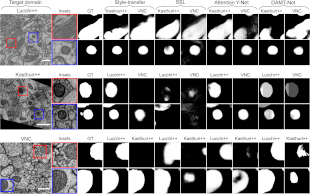

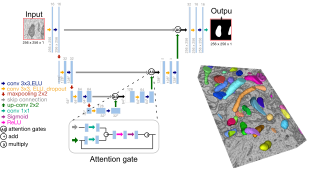

Franco-Barranco, Daniel, et al. "Deep learning based domain adaptation for mitochondria segmentation on EM volumes." Computer Methods and Programs in Biomedicine 222 (2022): 106949. This study addresses mitochondria segmentation across different datasets using three unsupervised domain adaptation approaches, including style transfer, self-supervised learning, and multi-task neural networks. To ensure robust generalization, a new training stopping criterion based on source domain morphological priors is proposed. BiaPy is used for the implementation of the Attention U-Net. [Documentation] [Paper] |

|

||

Franco-Barranco, Daniel, et al. "Stable deep neural network architectures for mitochondria segmentation on electron microscopy volumes." Neuroinformatics 20.2 (2022): 437-450. Recent deep learning models have shown impressive performance in mitochondria segmentation, but often lack code and training details, affecting reproducibility. This study follows best practices, comprehensively comparing state-of-the-art architectures and variations of U-Net models for mitochondria segmentation, revealing their impact and stability. The research consistently achieves state-of-the-art results on various datasets, including EPFL Hippocampus, Lucchi++, and Kasthuri++. BiaPy is used for the implementation of the methods compared in the study. [Documentation] [Paper] |

|

||

Wei, Donglai, et al. "Mitoem dataset: Large-scale 3d mitochondria instance segmentation from em images." International Conference on Medical Image Computing and Computer-Assisted Intervention. Cham: Springer International Publishing, 2020. Existing mitochondria segmentation datasets are small, raising questions about method robustness. The MitoEM dataset introduces larger 3D volumes with diverse mitochondria, challenging existing instance segmentation methods, highlighting the need for improved techniques. BiaPy is used in the creation of the MitoEM challenge baseline (U2D-BC). [Documentation] [Paper] [Challenge] |

|

| Name | Role | Affiliations |

|---|---|---|

| Daniel Franco-Barranco | Creator, Implementation, Software design |

|

| Lenka Backová | Implementation |

|

| Aitor Gonzalez-Marfil | Implementation |

|

| Ignacio Arganda-Carreras | Supervision, Implementation |

|

| Arrate Muñoz-Barrutia | Supervision |

|

| Name | Role | Affiliations |

|---|---|---|

| Jesús Ángel Andrés-San Román | Implementation |

|

| Pedro Javier Gómez Gálvez | Supervision, Implementation |

|

| Luis M. Escudero | Supervision |

|

| Iván Hidalgo Cenalmor | Implementation |

|

| Donglai Wei | Supervision |

|

| Clément Caporal | Implementation |

|

| Anatole Chessel | Supervision |

|

Franco-Barranco, Daniel, et al. "BiaPy: a ready-to-use library for Bioimage Analysis Pipelines."

2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI). IEEE, 2023.