Error processing line 1 of /home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/matplotlib-3.5.2-py3.8-nspkg.pth:

Traceback (most recent call last):

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site.py", line 169, in addpackage

exec(line)

File "", line 1, in

File "", line 553, in module_from_spec

AttributeError: 'NoneType' object has no attribute 'loader'

Remainder of file ignored

running install

/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/command/install.py:34: SetuptoolsDeprecationWarning: setup.py install is deprecated. Use build and pip and other standards-based tools.

warnings.warn(

/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/command/easy_install.py:144: EasyInstallDeprecationWarning: easy_install command is deprecated. Use build and pip and other standards-based tools.

warnings.warn(

running bdist_egg

running egg_info

writing pointops2.egg-info/PKG-INFO

writing dependency_links to pointops2.egg-info/dependency_links.txt

writing top-level names to pointops2.egg-info/top_level.txt

Error processing line 1 of /home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/matplotlib-3.5.2-py3.8-nspkg.pth:

Traceback (most recent call last):

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site.py", line 169, in addpackage

exec(line)

File "", line 1, in

File "", line 553, in module_from_spec

AttributeError: 'NoneType' object has no attribute 'loader'

Remainder of file ignored

reading manifest file 'pointops2.egg-info/SOURCES.txt'

writing manifest file 'pointops2.egg-info/SOURCES.txt'

installing library code to build/bdist.linux-x86_64/egg

running install_lib

running build_ext

building 'pointops2_cuda' extension

Error processing line 1 of /home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/matplotlib-3.5.2-py3.8-nspkg.pth:

Traceback (most recent call last):

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site.py", line 169, in addpackage

exec(line)

File "", line 1, in

File "", line 553, in module_from_spec

AttributeError: 'NoneType' object has no attribute 'loader'

Remainder of file ignored

Emitting ninja build file /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/build.ninja...

Compiling objects...

Allowing ninja to set a default number of workers... (overridable by setting the environment variable MAX_JOBS=N)

Error processing line 1 of /home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/matplotlib-3.5.2-py3.8-nspkg.pth:

Traceback (most recent call last):

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site.py", line 169, in addpackage

exec(line)

File "", line 1, in

File "", line 553, in module_from_spec

AttributeError: 'NoneType' object has no attribute 'loader'

Remainder of file ignored

[1/12] c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/knnquery/knnquery_cuda.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/knnquery/knnquery_cuda.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/knnquery/knnquery_cuda.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

FAILED: /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/knnquery/knnquery_cuda.o

c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/knnquery/knnquery_cuda.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/knnquery/knnquery_cuda.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/knnquery/knnquery_cuda.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++

/home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/knnquery/knnquery_cuda.cpp:2:10: fatal error: THC/THC.h: 没有那个文件或目录

2 | #include <THC/THC.h>

| ^~~~~~~~~~~

compilation terminated.

[2/12] c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/grouping/grouping_cuda.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/grouping/grouping_cuda.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/grouping/grouping_cuda.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

FAILED: /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/grouping/grouping_cuda.o

c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/grouping/grouping_cuda.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/grouping/grouping_cuda.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/grouping/grouping_cuda.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++

/home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/grouping/grouping_cuda.cpp:2:10: fatal error: THC/THC.h: 没有那个文件或目录

2 | #include <THC/THC.h>

| ^~~~~~~~~~~

compilation terminated.

[3/12] c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/aggregation/aggregation_cuda.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/aggregation/aggregation_cuda.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/aggregation/aggregation_cuda.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

FAILED: /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/aggregation/aggregation_cuda.o

c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/aggregation/aggregation_cuda.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/aggregation/aggregation_cuda.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/aggregation/aggregation_cuda.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++

/home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/aggregation/aggregation_cuda.cpp:2:10: fatal error: THC/THC.h: 没有那个文件或目录

2 | #include <THC/THC.h>

| ^~~~~~~~~~~

compilation terminated.

[4/12] c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/attention_v2/attention_cuda_v2.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/attention_v2/attention_cuda_v2.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/attention_v2/attention_cuda_v2.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

FAILED: /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/attention_v2/attention_cuda_v2.o

c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/attention_v2/attention_cuda_v2.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/attention_v2/attention_cuda_v2.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/attention_v2/attention_cuda_v2.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++

/home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/attention_v2/attention_cuda_v2.cpp:2:10: fatal error: THC/THC.h: 没有那个文件或目录

2 | #include <THC/THC.h>

| ^~~~~~~~~~~

compilation terminated.

[5/12] c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/attention/attention_cuda.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/attention/attention_cuda.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/attention/attention_cuda.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

FAILED: /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/attention/attention_cuda.o

c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/attention/attention_cuda.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/attention/attention_cuda.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/attention/attention_cuda.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++

/home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/attention/attention_cuda.cpp:2:10: fatal error: THC/THC.h: 没有那个文件或目录

2 | #include <THC/THC.h>

| ^~~~~~~~~~~

compilation terminated.

[6/12] c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/rpe/relative_pos_encoding_cuda.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/rpe/relative_pos_encoding_cuda.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/rpe/relative_pos_encoding_cuda.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

FAILED: /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/rpe/relative_pos_encoding_cuda.o

c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/rpe/relative_pos_encoding_cuda.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/rpe/relative_pos_encoding_cuda.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/rpe/relative_pos_encoding_cuda.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++

/home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/rpe/relative_pos_encoding_cuda.cpp:2:10: fatal error: THC/THC.h: 没有那个文件或目录

2 | #include <THC/THC.h>

| ^~~~~~~~~~~

compilation terminated.

[7/12] c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/interpolation/interpolation_cuda.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/interpolation/interpolation_cuda.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/interpolation/interpolation_cuda.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

FAILED: /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/interpolation/interpolation_cuda.o

c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/interpolation/interpolation_cuda.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/interpolation/interpolation_cuda.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/interpolation/interpolation_cuda.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++

/home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/interpolation/interpolation_cuda.cpp:2:10: fatal error: THC/THC.h: 没有那个文件或目录

2 | #include <THC/THC.h>

| ^~~~~~~~~~~

compilation terminated.

[8/12] c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/rpe_v2/relative_pos_encoding_cuda_v2.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/rpe_v2/relative_pos_encoding_cuda_v2.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/rpe_v2/relative_pos_encoding_cuda_v2.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

FAILED: /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/rpe_v2/relative_pos_encoding_cuda_v2.o

c++ -MMD -MF /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/rpe_v2/relative_pos_encoding_cuda_v2.o.d -pthread -B /home/yn/anaconda3/envs/pytorch-gpu/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/TH -I/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/yn/anaconda3/envs/pytorch-gpu/include/python3.8 -c -c /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/rpe_v2/relative_pos_encoding_cuda_v2.cpp -o /home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/build/temp.linux-x86_64-3.8/src/rpe_v2/relative_pos_encoding_cuda_v2.o -g -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=pointops2_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++

/home/yn/project/Stratified-Transformer-main/Stratified-Transformer-main/lib/pointops2/src/rpe_v2/relative_pos_encoding_cuda_v2.cpp:2:10: fatal error: THC/THC.h: 没有那个文件或目录

2 | #include <THC/THC.h>

| ^~~~~~~~~~~

compilation terminated.

ninja: build stopped: subcommand failed.

Traceback (most recent call last):

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 1740, in _run_ninja_build

subprocess.run(

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/subprocess.py", line 516, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['ninja', '-v']' returned non-zero exit status 1.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "setup.py", line 12, in

setup(

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/init.py", line 87, in setup

return distutils.core.setup(**attrs)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/core.py", line 148, in setup

return run_commands(dist)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/core.py", line 163, in run_commands

dist.run_commands()

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/dist.py", line 967, in run_commands

self.run_command(cmd)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/dist.py", line 1214, in run_command

super().run_command(command)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/dist.py", line 986, in run_command

cmd_obj.run()

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/command/install.py", line 74, in run

self.do_egg_install()

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/command/install.py", line 123, in do_egg_install

self.run_command('bdist_egg')

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/cmd.py", line 313, in run_command

self.distribution.run_command(command)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/dist.py", line 1214, in run_command

super().run_command(command)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/dist.py", line 986, in run_command

cmd_obj.run()

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/command/bdist_egg.py", line 165, in run

cmd = self.call_command('install_lib', warn_dir=0)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/command/bdist_egg.py", line 151, in call_command

self.run_command(cmdname)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/cmd.py", line 313, in run_command

self.distribution.run_command(command)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/dist.py", line 1214, in run_command

super().run_command(command)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/dist.py", line 986, in run_command

cmd_obj.run()

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/command/install_lib.py", line 11, in run

self.build()

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/command/install_lib.py", line 107, in build

self.run_command('build_ext')

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/cmd.py", line 313, in run_command

self.distribution.run_command(command)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/dist.py", line 1214, in run_command

super().run_command(command)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/dist.py", line 986, in run_command

cmd_obj.run()

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/command/build_ext.py", line 79, in run

_build_ext.run(self)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/Cython/Distutils/old_build_ext.py", line 186, in run

_build_ext.build_ext.run(self)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/command/build_ext.py", line 339, in run

self.build_extensions()

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 741, in build_extensions

build_ext.build_extensions(self)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/Cython/Distutils/old_build_ext.py", line 195, in build_extensions

_build_ext.build_ext.build_extensions(self)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/command/build_ext.py", line 448, in build_extensions

self._build_extensions_serial()

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/command/build_ext.py", line 473, in _build_extensions_serial

self.build_extension(ext)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/command/build_ext.py", line 202, in build_extension

_build_ext.build_extension(self, ext)

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/setuptools/_distutils/command/build_ext.py", line 528, in build_extension

objects = self.compiler.compile(sources,

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 562, in unix_wrap_ninja_compile

_write_ninja_file_and_compile_objects(

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 1419, in _write_ninja_file_and_compile_objects

_run_ninja_build(

File "/home/yn/anaconda3/envs/pytorch-gpu/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 1756, in _run_ninja_build

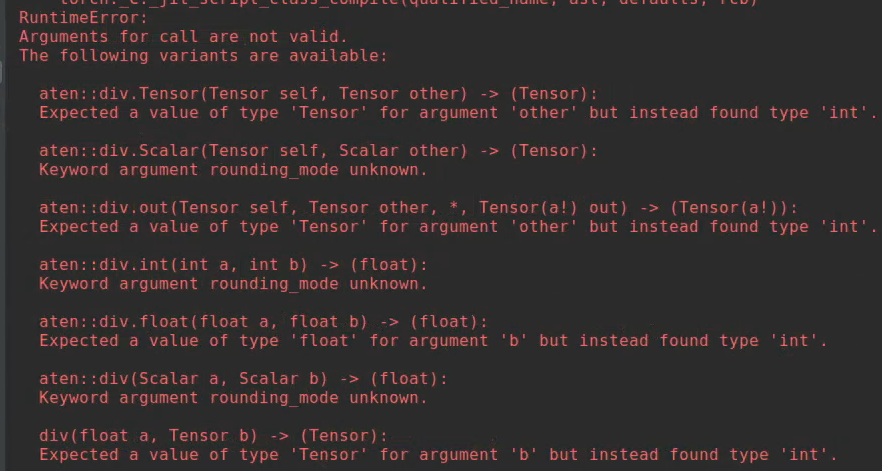

raise RuntimeError(message) from e

RuntimeError: Error compiling objects for extension