vkdt is a workflow toolbox for raw photography and video.

vkdt is designed with high performance in mind. it features a flexible

processing node graph at its core, enabling real-time support for animations,

timelapses, raw video, and heavy lifting algorithms like image alignment and

better highlight inpainting. this is made possible by faster processing,

allowing more complex operations.

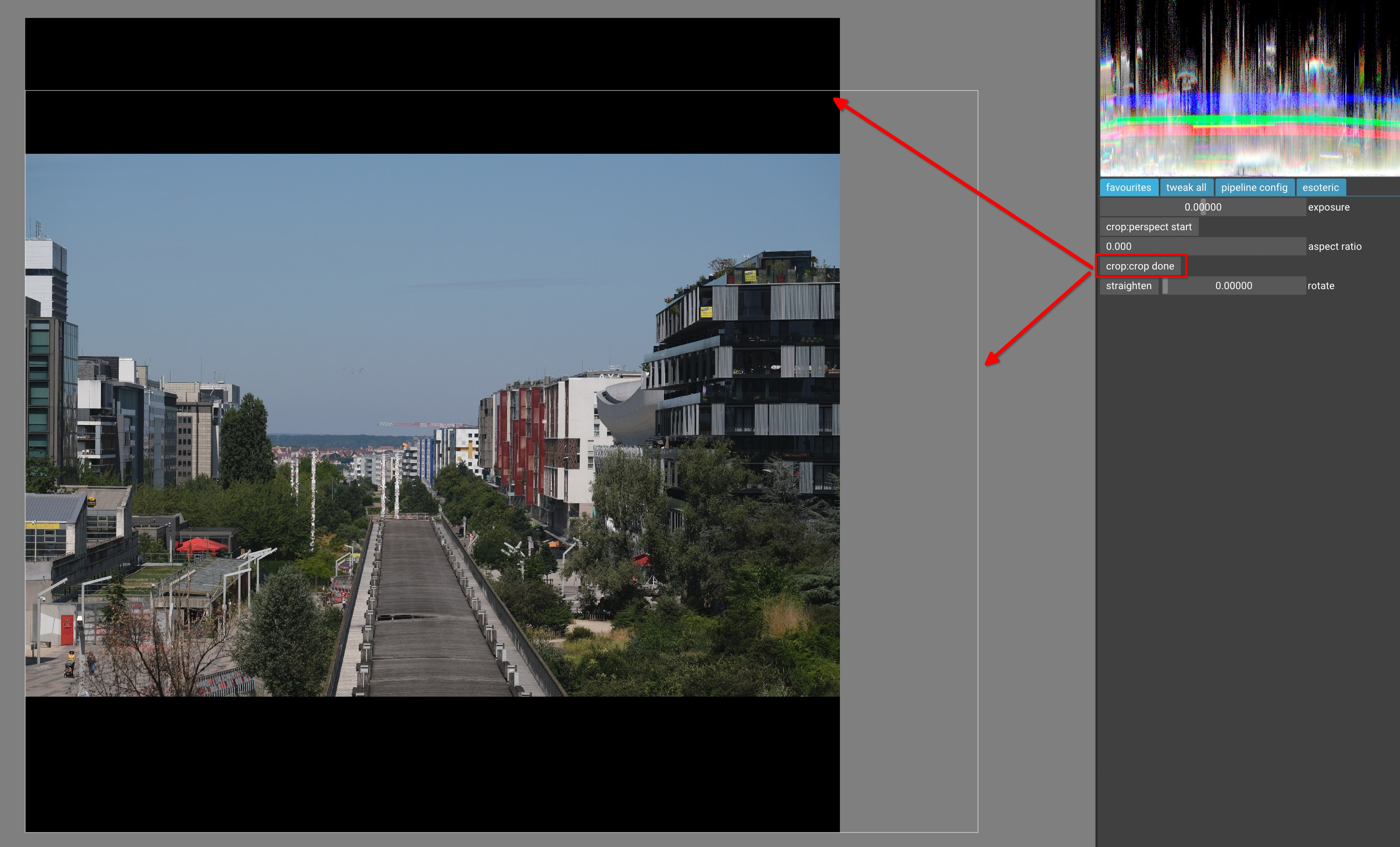

the processing pipeline is a generic node graph (DAG) which supports multiple inputs and multiple outputs. all processing is done in glsl shaders/vulkan. this facilitates potentially heavy duty computational photography tasks, for instance aligning multiple raw files and merging them before further processing, as well as outputting intermediate results for debugging. the gui profits from this scheme as well and can display textures while they are still on GPU and output the data to multiple targets, such as the main view and histograms.

- very fast GPU only processing

- general DAG of processing operations, featuring multiple inputs and outputs and feedback connectors for animation/iteration

- full window colour management

- noise profiling

- minimally invasive folder-based image database

- command line utility

- real time magic lantern raw video (mlv) processing

- 10-bit display output

- gamepad input inherited from imgui

- automatic parameter optimisation, for instance to fit vignetting

- heavy handed processing at almost realistic speeds

there are up-to-date packages (deb/rpm/pkgbuild) in the

also there are nixos packages vkdt

and vkdt-wayland which you can use to try out/run on any linux distro, for instance:

nix-shell -p vkdt

you should have checked out this repo recursively. if not, do

git submodule init

git submodule update

to grab the dependencies in the ext/ folder. you should then be able to

simply run 'make' from the bin/ folder. for debug builds (which enable the

vulkan validation layers, so you need to have them installed), try

cd bin/

make debug -j12

simply run make without the debug for a release build. note that the debug

build includes some extra memory overhead and performs expensive checks and can

thus be much slower. make sanitize is supported to switch on the address

sanitizer. changes to the compile time configuration as well as the compiler

toolchain can be set in config.mk. if you don't have that file yet, you can

copy it from config.mk.defaults.

the binaries are put into the bin/ directory. if you want to run vkdt from

anywhere, create a symlink such as /usr/local/bin/vkdt -> ~/vc/vkdt/bin/vkdt.

cd bin/

./vkdt -d all /path/to/your/rawfile.raw

raw files will be assigned the bin/default-darkroom.i-raw processing graph.

if you run the command line interface vkdt-cli, it will replace all display

nodes by export nodes.

there are also a few example config files in bin/examples/. note that you

have to edit the filename in the example cfg to point to a file that actually

exists on your system.

our code is licenced under the 2-clause bsd licence (if not clearly marked otherwise in the respective source files). there are parts from other libraries that are licenced differently. in particular:

rawspeed: LGPLv2 imgui: MIT

and we may link to some others, too.

- vulkan, glslangValidator (libvulkan-dev, glslang-tools, or use the sdk)

- glfw (libglfw3-dev and libglfw3, only use libglfw3-wayland if you're on wayland)

- submodule imgui

- for raw input support:

- either rawspeed (depends on pugixml, stdc++, zlib, jpeg, libomp, build-depends on cmake, libomp-dev, optionally libexiv2)

- or rawler (depends on rust toolchain which will manage their own dependencies)

- libjpeg

- video input: libavformat libavcodec (minimum version 6)

- sound: libasound2

- video output: ffmpeg binary

- build: make, pkg-config, clang, rsync, sed

the full list of packages used to build the nightly appimages can be found in the workflow yaml file.

optional (configure in bin/config.mk):

- freetype (libfreetype-dev libpng16-16) for nicer font rendering

- exiv2 (libexiv2-dev) for raw metadata loading to assign noise profiles, only needed for rawspeed builds

- asound (libasound2) for audio support in mlv raw video

- ffmpeg (ffmpeg, libavformat-dev libavcodec-dev) for the video input module

i-vidand the output moduleo-ffmpeg

you can also build without rawspeed or rawler if that is useful for you.

-

can i load canon cr3 files?

yes. the rawspeed submodule in this repository is now by default already using a branch that supports cr3 files. this may go away in the future though. the rawler backend supports cr3. -

does it work with wayland?

vkdthas been confirmed to run on wayland, using amd and nvidia hardware. -

can i run my super long running kernel without timeout?

if you're using your only gpu in the system, you'll need to run without xorg, straight from a tty console. this means you'll only be able to use the command line interfacevkdt-cli. we force a timeout, too, but it's something like 16 minutes. let us know if you run into this.. -

can i build without display server?

there is aclitarget, i.e. you can try to runmake clito only generate the command line interface tools that do not depend on xorg or wayland or glfw. -

i have multiple GPUs and vkdt picks the wrong one by default. what do i do?

make sure the GPU you want to run has the HDMI/dp cable attached (or else you can only runvkdt-cli) on it. then runvkdt -d qvkand find a line such as

[qvk] dev 0: NVIDIA GeForce RTX 2070

and then place this printed name exactly as written there in your

~/.config/vkdt/config.rc in a line such as, in this example:

strqvk/device_name:NVIDIA GeForce RTX 2070

if you have several identical models in your system, you can use the device

number vkdt assigns (an index starting at zero, again see the log output),

and instead use

intqvk/device_id:0

- can i use my pentablet to draw masks in vkdt?

yes, but you need a specific version of glfw to support it. you can for instance clonehttps://github.com/hanatos/glfw, for instance to/home/you/vc/glfw, and then put the following in your custombin/config.mk:

VKDT_GLFW_CFLAGS=-I/home/you/vc/glfw/include/

VKDT_GLFW_LDFLAGS=/home/you/vc/glfw/build/src/libglfw3.a

VKDT_USE_PENTABLET=1

export VKDT_USE_PENTABLET

-

are there system recommendations?

vkdtneeds a vulkan capable GPU. it will work better with floating point atomics, in particularshaderImageFloat32AtomicAdd. you can check this property for certain devices on this website. also,vkdtrequires 4GB video ram (it may run with less, but this seems to be a number that is fun to work with). a fast ssd is desirable since disk io is often times a limiting factor, especially during thumbnail creation. -

can i speed up rendering on my 2012 on-board GPU?

you can set the level of detail (LOD) parameter in your~/.config/vkdt/config.rcfile:intgui/lod:1. set it to2to only render exactly at the resolution of your screen (will slow down when you zoom in), or to3and more to brute force downsample. -

can i limit the frame rate to save power?

there is theframe_limiteroption in~/.config/vkdt/config.rcfor this. setintgui/frame_limiter:30to have at most one redraw every30milliseconds. leave it at0to redraw as quickly as possible. -

where can i ask for support?

try#vkdtonoftc.netor ask on pixls.us.