This repository contains the implementation of our papers Dual Octree Graph Networks. The experiments are conducted on Ubuntu 18.04 with 4 V400 GPUs (32GB memory). The code is released under the MIT license.

Dual Octree Graph Networks for Learning Adaptive Volumetric Shape Representations

Peng-Shuai Wang, Yang Liu, and Xin Tong

ACM Transactions on Graphics (SIGGRAPH), 41(4), 2022

- Dual Octree Graph Networks

-

Install Conda and create a

Condaenvironment.conda create --name dualocnn python=3.7 conda activate dualocnn

-

Install PyTorch-1.9.1 with conda according to the official documentation.

conda install pytorch==1.9.1 torchvision==0.10.1 cudatoolkit=10.2 -c pytorch

-

Install

ocnn-pytorchfrom O-CNN.git clone https://github.com/microsoft/O-CNN.git cd O-CNN/pytorch pip install -r requirements.txt python setup.py install --build_octree -

Clone this repository and install other requirements.

git clone https://github.com/microsoft/DualOctreeGNN.git cd DualOctreeGNN pip install -r requirements.txt

-

Download

ShapeNetCore.v1.zip(31G) from ShapeNet and place it into the folderdata/ShapeNet. -

Convert the meshes in

ShapeNetCore.v1to signed distance fields (SDFs).python tools/shapenet.py --run convert_mesh_to_sdf

Note that this process is relatively slow, it may take several days to finish converting all the meshes from ShapeNet. And for simplicity, I did not use multiprocessing of python to speed up. If the speed is a matter, you can simultaneously execute multiple python commands manually by specifying the

startandendindex of the mesh to be processed. An example is shown as follows:python tools/shapenet.py --run convert_mesh_to_sdf --start 10000 --end 20000

The

ShapeNetConv.v1contains 57k meshes. After unzipping, the total size is about 100G. And the total sizes of the generated SDFs and the repaired meshes are 450G and 90G, respectively. Please make sure your hard disk has enough space. -

Sample points and ground-truth SDFs for the learning process.

python tools/shapenet.py --run generate_dataset

-

If you just want to forward the pretrained network, the test point clouds (330M) can be downloaded manually from here. After downloading the zip file, unzip it to the folder

data/ShapeNet/test.input.

-

Train: Run the following command to train the network on 4 GPUs. The training takes 17 hours on 4 V100 GPUs. The trained weight and log can be downloaded here.

python dualocnn.py --config configs/shapenet.yaml SOLVER.gpu 0,1,2,3

-

Test: Run the following command to generate the extracted meshes. It is also possible to specify other trained weights by replacing the parameter after

SOLVER.ckpt.python dualocnn.py --config configs/shapenet_eval.yaml \ SOLVER.ckpt logs/shapenet/shapenet/checkpoints/00300.model.pth -

Evaluate: We use the code of ConvONet to compute the evaluation metrics. Following the instructions here to reproduce our results in Table 1.

-

Test: Run the following command to test the trained network on unseen 5 categories of ShapeNet:

python dualocnn.py --config configs/shapenet_unseen5.yaml \ SOLVER.ckpt logs/shapenet/shapenet/checkpoints/00300.model.pth -

Evaluate: Following the instructions here to reproduce our results on the unseen dataset in Table 1.

Download and unzip the synthetic scene dataset (205G in total) and the data splitting filelists by ConvONet via the following command. If needed, the ground truth meshes can be downloaded from here (90G).

python tools/room.py --run generate_dataset-

Train: Run the following command to train the network on 4 GPUs. The training takes 27 hours on 4 V100 GPUs. The trained weight and log can be downloaded here.

python dualocnn.py --config configs/synthetic_room.yaml SOLVER.gpu 0,1,2,3

-

Test: Run the following command to generate the extracted meshes.

python dualocnn.py --config configs/synthetic_room_eval.yaml \ SOLVER.ckpt logs/room/room/checkpoints/00900.model.pth -

Evaluate: Following the instructions here to reproduce our results in Table 5.

-

Download the DFaust dataset, unzip the raw scans into the folder

data/dfaust/scans, and unzip the ground-truth meshes into the folderdata/dfaust/mesh_gt. Note that the ground-truth meshes are used in computing evaluation metric and NOT used in training. -

Run the following command to prepare the dataset.

python tools/dfaust.py --run genereate_dataset

-

For convenience, we also provide the dataset for downloading.

python tools/dfaust.py --run download_dataset

-

Train: Run the following command to train the network on 4 GPUs. The training takes 20 hours on 4 V100 GPUs. The trained weight and log can be downloaded here.

python dualocnn.py --config configs/dfaust.yaml SOLVER.gpu 0,1,2,3

-

Test: Run the following command to generate the meshes with the trained weights.

python dualocnn.py --config configs/dfaust_eval.yaml \ SOLVER.ckpt logs/dfaust/dfaust/checkpoints/00600.model.pth -

Evaluate: To calculate the evaluation metric, we need first rescale the mesh into the original size, since the point clouds are scaled during the data processing stage.

python tools/dfaust.py \ --mesh_folder logs/dfaust_eval/dfaust \ --output_folder logs/dfaust_eval/dfaust_rescale \ --run rescale_meshThen our results in Table 6 can be reproduced in the file

metrics.csv.python tools/compute_metrics.py \ --mesh_folder logs/dfaust_eval/dfaust_rescale \ --filelist data/dfaust/filelist/test.txt \ --ref_folder data/dfaust/mesh_gt \ --filename_out logs/dfaust_eval/dfaust_rescale/metrics.csv

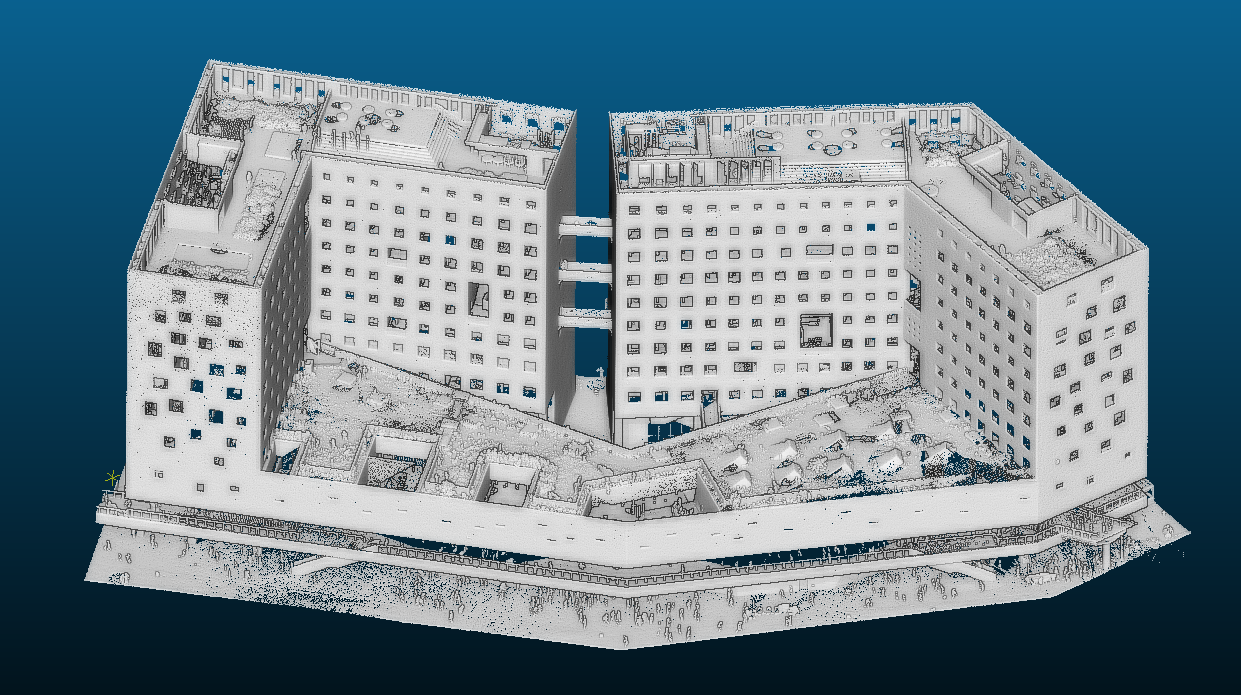

In the Figure 1 and 11 of our paper, we test the generalization ability of our

network on several out-of-distribution point clouds. Please download the point

clouds from here,

and place the unzipped data to the folder data/shapes. Then run the following

command to reproduce the results:

python dualocnn.py --config configs/shapes.yaml \

SOLVER.ckpt logs/dfaust/dfaust/checkpoints/00600.model.pth \Following the instructions here to prepare the dataset.

-

Train: Run the following command to train the network on 4 GPUs. The training takes 24 hours on 4 V100 GPUs. The trained weight and log can be downloaded here.

python dualocnn.py --config configs/shapenet_ae.yaml SOLVER.gpu 0,1,2,3

-

Test: Run the following command to generate the extracted meshes.

python dualocnn.py --config configs/shapenet_ae_eval.yaml \ SOLVER.ckpt logs/shapenet/ae/checkpoints/00300.model.pth -

Evaluate: Run the following command to evaluate the predicted meshes. Then our results in Table 7 can be reproduced in the file

metrics.4096.csv.python tools/compute_metrics.py \ --mesh_folder logs/shapenet_eval/ae \ --filelist data/ShapeNet/filelist/test_im.txt \ --ref_folder data/ShapeNet/mesh \ --num_samples 4096 \ --filename_out logs/shapenet_eval/ae/metrics.4096.csv