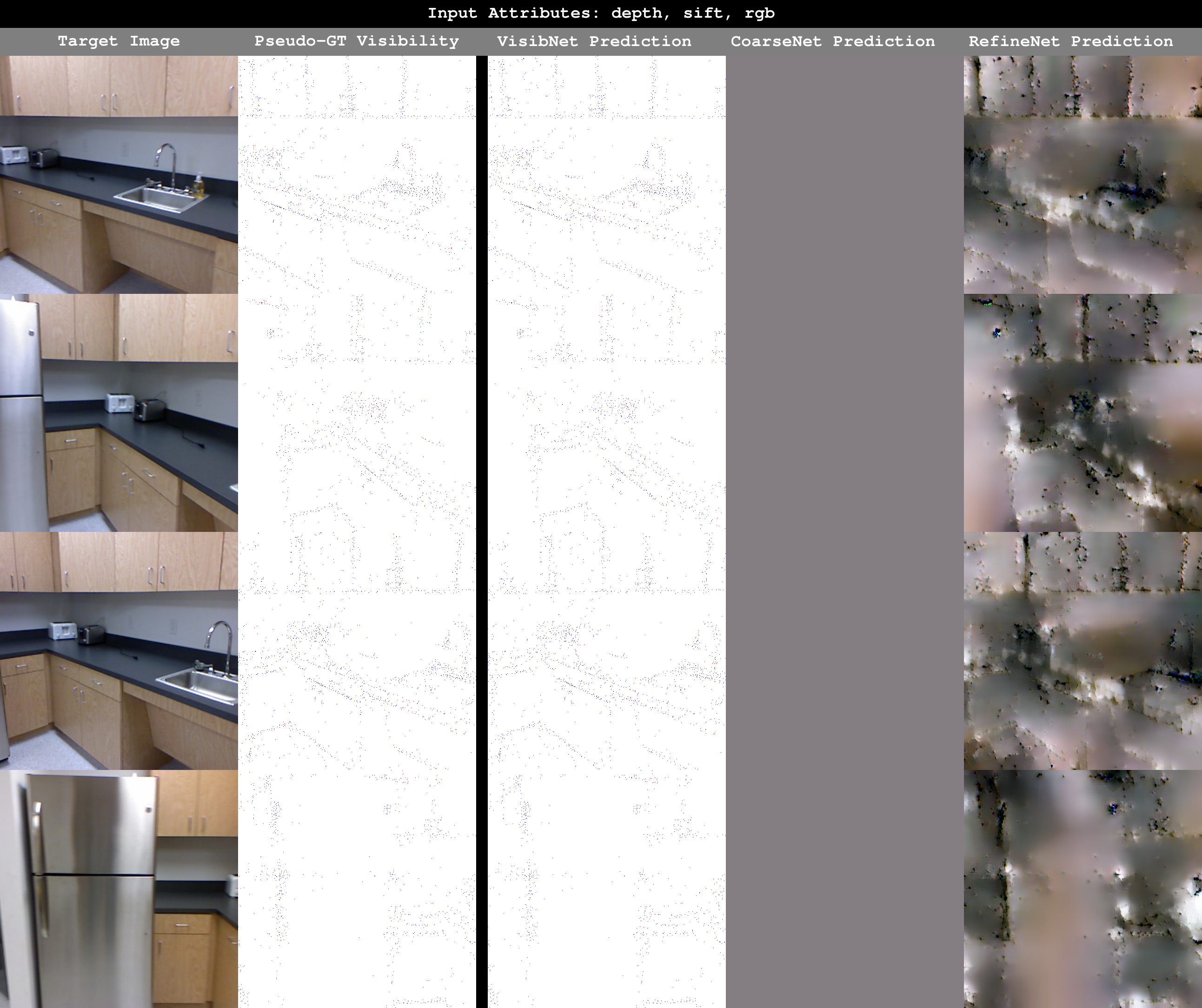

Synthesizing Imagery from a SFM Point Cloud: From left to right--Top view of a SfM reconstruction of an indoor scene; 3D points projected into a viewpoint associated with a source image; the image reconstructed using our technique; and the source image.

Synthesizing Imagery from a SFM Point Cloud: From left to right--Top view of a SfM reconstruction of an indoor scene; 3D points projected into a viewpoint associated with a source image; the image reconstructed using our technique; and the source image.

This repository contains a reference implementation of the algorithms described in the CVPR 2019 paper Revealing Scenes by Inverting Structutre from Motion Reconstructions. This paper was selected as a Best Paper Finalist at CVPR 2019. For more details about the project, please visit the main project page.

If you use this code/model for your research, please cite the following paper:

@inproceedings{pittaluga2019revealing,

title={Revealing scenes by inverting structure from motion reconstructions},

author={Pittaluga, Francesco and Koppal, Sanjeev J and Bing Kang, Sing and Sinha, Sudipta N},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={145--154},

year={2019}

}

The code was tested with Tensorflow 1.10, Ubuntu 16, NVIDIA TitanX / NVIDIA 1080ti.

See requirements.txt. The training code depends only on tensorflow. The demos additionally depend on Pillow and scikit-image.

Run $ bash download_wts.sh to programatically download and untar wts.tar.gz (1.24G). Alternatively, manually download wts.tar.gz from here and untar it in the root directory of the repo.

Run $ bash download_data.sh to programatically download and untar data.tar.gz (11G). Alternatively, manually download data.tar.gz from here and untar it in the root directory of the repo.

$ python demo_5k.py

$ python demo_colmap.py

Note: Run $ python demo_5k.py --help and $ python demo_colmap.py --help to see the various demo options available.

$ python train_visib.py

$ python train_coarse.py

$ python train_refine.py

Note: Run $ python train_*.py --help to see the various training options available.