RAPIDS cuCIM is an open-source, accelerated computer vision and image processing software library for multidimensional images used in biomedical, geospatial, material and life science, and remote sensing use cases.

cuCIM offers:

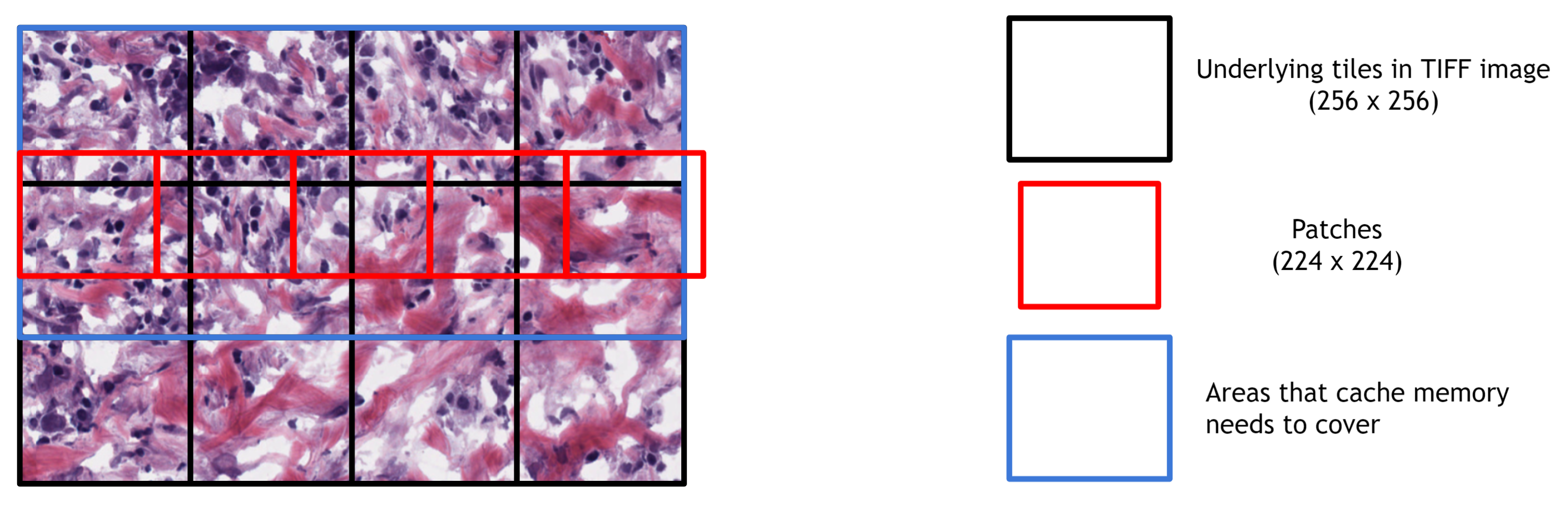

- Enhanced Image Processing Capabilities for large and n-dimensional tag image file format (TIFF) files

- Accelerated performance through Graphics Processing Unit (GPU)-based image processing and computer vision primitives

- A Straightforward Pythonic Interface with Matching Application Programming Interface (API) for Openslide

cuCIM supports the following formats:

- Aperio ScanScope Virtual Slide (SVS)

- Philips TIFF

- Generic Tiled, Multi-resolution RGB TIFF files with the following compression schemes:

- No Compression

- JPEG

- JPEG2000

- Lempel-Ziv-Welch (LZW)

- Deflate

NOTE: For the latest stable README.md ensure you are on the main branch.

- GTC 2022 Accelerating Storage IO to GPUs with Magnum IO [S41347]

- cuCIM's GDS API examples: https://github.com/NVIDIA/MagnumIO/tree/main/gds/readers/cucim-gds

- SciPy 2021 cuCIM - A GPU image I/O and processing library

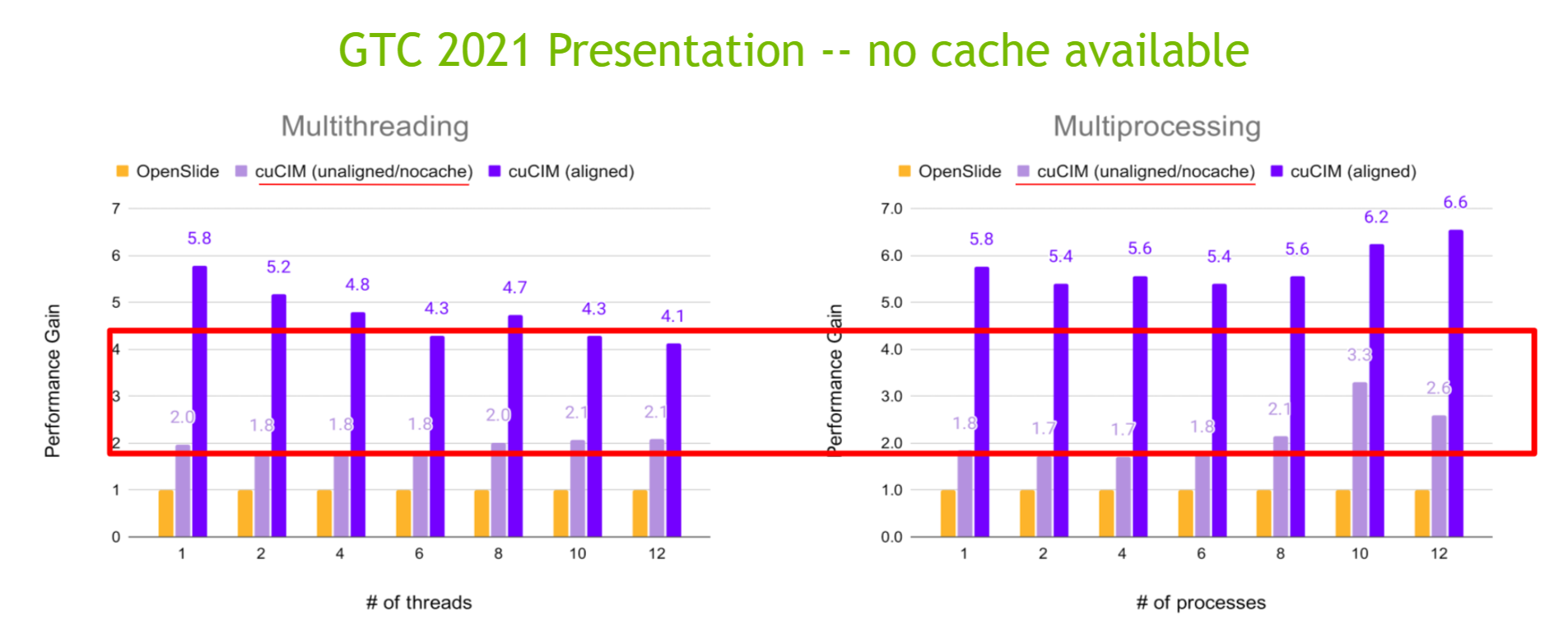

- GTC 2021 cuCIM: A GPU Image I/O and Processing Toolkit [S32194]

Blogs

- Enhanced Image Analysis with Multidimensional Image Processing

- Accelerating Scikit-Image API with cuCIM: n-Dimensional Image Processing and IO on GPUs

- Accelerating Digital Pathology Pipelines with NVIDIA Clara™ Deploy

Webinars

Release notes are available on our wiki page.

conda create -n cucim -c rapidsai -c conda-forge cucim cuda-version=`<CUDA version>`<CUDA version> should be 11.2+ (e.g., 11.2, 12.0, etc.)

conda create -n cucim -c rapidsai-nightly -c conda-forge cucim cuda-version=`<CUDA version>`<CUDA version> should be 11.2+ (e.g., 11.2, 12.0, etc.)

Install for CUDA 12:

pip install cucim-cu12Alternatively install for CUDA 11:

pip install cucim-cu11Please check out our Welcome notebook (NBViewer)

To download images used in the notebooks, please execute the following commands from the repository root folder to copy sample input images into notebooks/input folder:

(You will need Docker installed in your system)

./run download_testdataor

mkdir -p notebooks/input

tmp_id=$(docker create gigony/svs-testdata:little-big)

docker cp $tmp_id:/input notebooks

docker rm -v ${tmp_id}See build instructions.

Contributions to cuCIM are more than welcome! Please review the CONTRIBUTING.md file for information on how to contribute code and issues to the project.

Without awesome third-party open source software, this project wouldn't exist.

Please find LICENSE-3rdparty.md to see which third-party open source software is used in this project.

Apache-2.0 License (see LICENSE file).

Copyright (c) 2020-2022, NVIDIA CORPORATION.