This tutorial will go through the steps necessary to perform pose estimation with a UR3 robotic arm in Unity. You’ll gain experience integrating ROS with Unity, importing URDF models, collecting labeled training data, and training and deploying a deep learning model. By the end of this tutorial, you will be able to perform pick-and-place with a robot arm in Unity, using computer vision to perceive the object the robot picks up.

Want to skip the tutorial and run the full demo? Check out our Quick Demo.

Want to skip the tutorial and focus on collecting training data for the deep learning model? Check out our Quick Data-Collection Demo.

Note: This project has been developed with Python 3 and ROS Noetic.

Table of Contents

- Part 1: Create Unity scene with imported URDF

- Part 2: Setting up the scene for data collection

- Part 3: Data Collection and Model Training

- Part 4: Pick-and-Place

This part includes downloading and installing the Unity Editor, setting up a basic Unity scene, and importing a robot. We will import the UR3 robot arm using the URDF Importer package.

This part focuses on setting up the scene for data collection using the Unity Computer Vision Perception Package. You will learn how to use Perception Package Randomizers to randomize aspects of the scene in order to create variety in the training data.

If you would like to learn more about Randomizers, and apply domain randomization to this scene more thoroughly, check out our further exercises for the reader here.

This part includes running data collection with the Perception Package, and using that data to train a deep learning model. The training step can take some time. If you'd like, you can skip that step by using our pre-trained model.

To measure the success of grasping in simulation using our pre-trained model for pose estimation, we did 100 trials and got the following results:

| Success | Failures | Percent Success | |

|---|---|---|---|

| Without occlusion | 82 | 5 | 94 |

| With occlusion | 7 | 6 | 54 |

| All | 89 | 11 | 89 |

Note: Data for the above experiment was collected in Unity 2020.2.1f1.

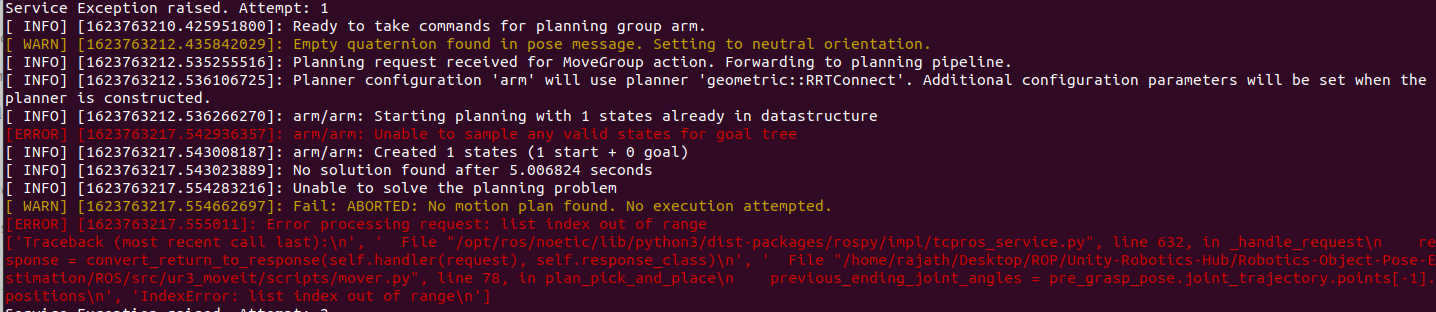

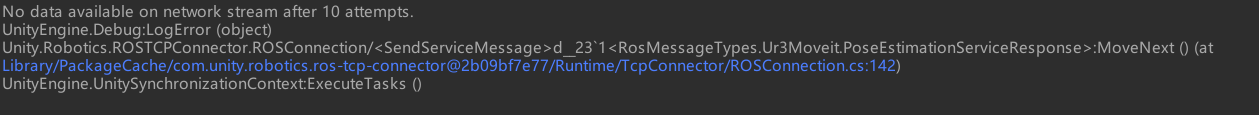

This part includes the preparation and setup necessary to run a pick-and-place task using MoveIt. Here, the cube pose is predicted by the trained deep learning model. Steps covered include:

- Creating and invoking a motion planning service in ROS

- Sending captured RGB images from our scene to the ROS Pose Estimation node for inference

- Using a Python script to run inference on our trained deep learning model

- Moving Unity Articulation Bodies based on a calculated trajectory

- Controlling a gripping tool to successfully grasp and drop an object.

For questions or discussions about Unity Robotics package installations or how to best set up and integrate your robotics projects, please create a new thread on the Unity Robotics forum and make sure to include as much detail as possible.

For feature requests, bugs, or other issues, please file a GitHub issue using the provided templates and the Robotics team will investigate as soon as possible.

For any other questions or feedback, connect directly with the Robotics team at [email protected].

Visit the Robotics Hub for more tutorials, tools, and information on robotics simulation in Unity!