I cant seem to get further than this command, and I have had no luck fixing it myself.

best_index = np.argmax(np.array(history.history['val_angle_metric']) \ + np.array(history.history['val_direction_metric'])) best_checkpoint = str("cp-%04d.ckpt" % (best_index+1)) best_model = utils.load_model(os.path.join(checkpoint_path,best_checkpoint),loss_fn,metric_list) best_tflite = utils.generate_tflite(checkpoint_path, best_checkpoint) utils.save_tflite (best_tflite, checkpoint_path, "best") print("Best Checkpoint (val_angle: %s, val_direction: %s): %s" \ %(history.history['val_angle_metric'][best_index],\ history.history['val_direction_metric'][best_index],\ best_checkpoint))

It returns the error

`---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

in

2 + np.array(history.history['val_direction_metric']))

3 best_checkpoint = str("cp-%04d.ckpt" % (best_index+1))

----> 4 best_model = utils.load_model(os.path.join(checkpoint_path,best_checkpoint),loss_fn,metric_list)

5 best_tflite = utils.generate_tflite(checkpoint_path, best_checkpoint)

6 utils.save_tflite (best_tflite, checkpoint_path, "best")

~/git/OpenBot/policy/utils.py in load_model(model_path, loss_fn, metric_list)

72

73 def load_model(model_path,loss_fn,metric_list):

---> 74 model = tf.keras.models.load_model(model_path,

75 custom_objects=None,

76 compile=False

~/anaconda3/envs/openbot/lib/python3.8/site-packages/tensorflow/python/keras/saving/save.py in load_model(filepath, custom_objects, compile)

188 if isinstance(filepath, six.string_types):

189 loader_impl.parse_saved_model(filepath)

--> 190 return saved_model_load.load(filepath, compile)

191

192 raise IOError(

~/anaconda3/envs/openbot/lib/python3.8/site-packages/tensorflow/python/keras/saving/saved_model/load.py in load(path, compile)

114 # TODO(kathywu): Add saving/loading of optimizer, compiled losses and metrics.

115 # TODO(kathywu): Add code to load from objects that contain all endpoints

--> 116 model = tf_load.load_internal(path, loader_cls=KerasObjectLoader)

117

118 # pylint: disable=protected-access

~/anaconda3/envs/openbot/lib/python3.8/site-packages/tensorflow/python/saved_model/load.py in load_internal(export_dir, tags, loader_cls)

600 object_graph_proto = meta_graph_def.object_graph_def

601 with ops.init_scope():

--> 602 loader = loader_cls(object_graph_proto,

603 saved_model_proto,

604 export_dir)

~/anaconda3/envs/openbot/lib/python3.8/site-packages/tensorflow/python/keras/saving/saved_model/load.py in init(self, *args, **kwargs)

186 self._models_to_reconstruct = []

187

--> 188 super(KerasObjectLoader, self).init(*args, **kwargs)

189

190 # Now that the node object has been fully loaded, and the checkpoint has

~/anaconda3/envs/openbot/lib/python3.8/site-packages/tensorflow/python/saved_model/load.py in init(self, object_graph_proto, saved_model_proto, export_dir)

121 self._concrete_functions[name] = _WrapperFunction(concrete_function)

122

--> 123 self._load_all()

124 self._restore_checkpoint()

125

~/anaconda3/envs/openbot/lib/python3.8/site-packages/tensorflow/python/keras/saving/saved_model/load.py in _load_all(self)

207 # loaded from config may create variables / other objects during

208 # initialization. These are recorded in _nodes_recreated_from_config.

--> 209 self._layer_nodes = self._load_layers()

210

211 # Load all other nodes and functions.

~/anaconda3/envs/openbot/lib/python3.8/site-packages/tensorflow/python/keras/saving/saved_model/load.py in _load_layers(self)

310

311 for node_id, proto in metric_list:

--> 312 layers[node_id] = self._load_layer(proto.user_object, node_id)

313 return layers

314

~/anaconda3/envs/openbot/lib/python3.8/site-packages/tensorflow/python/keras/saving/saved_model/load.py in _load_layer(self, proto, node_id)

335 obj, setter = self._revive_from_config(proto.identifier, metadata, node_id)

336 if obj is None:

--> 337 obj, setter = revive_custom_object(proto.identifier, metadata)

338

339 # Add an attribute that stores the extra functions/objects saved in the

~/anaconda3/envs/openbot/lib/python3.8/site-packages/tensorflow/python/keras/saving/saved_model/load.py in revive_custom_object(identifier, metadata)

776 return revived_cls._init_from_metadata(metadata) # pylint: disable=protected-access

777 else:

--> 778 raise ValueError('Unable to restore custom object of type {} currently. '

779 'Please make sure that the layer implements get_config'

780 'and from_config when saving. In addition, please use '

ValueError: Unable to restore custom object of type _tf_keras_metric currently. Please make sure that the layer implements get_configand from_config when saving. In addition, please use the custom_objects arg when calling load_model().

`

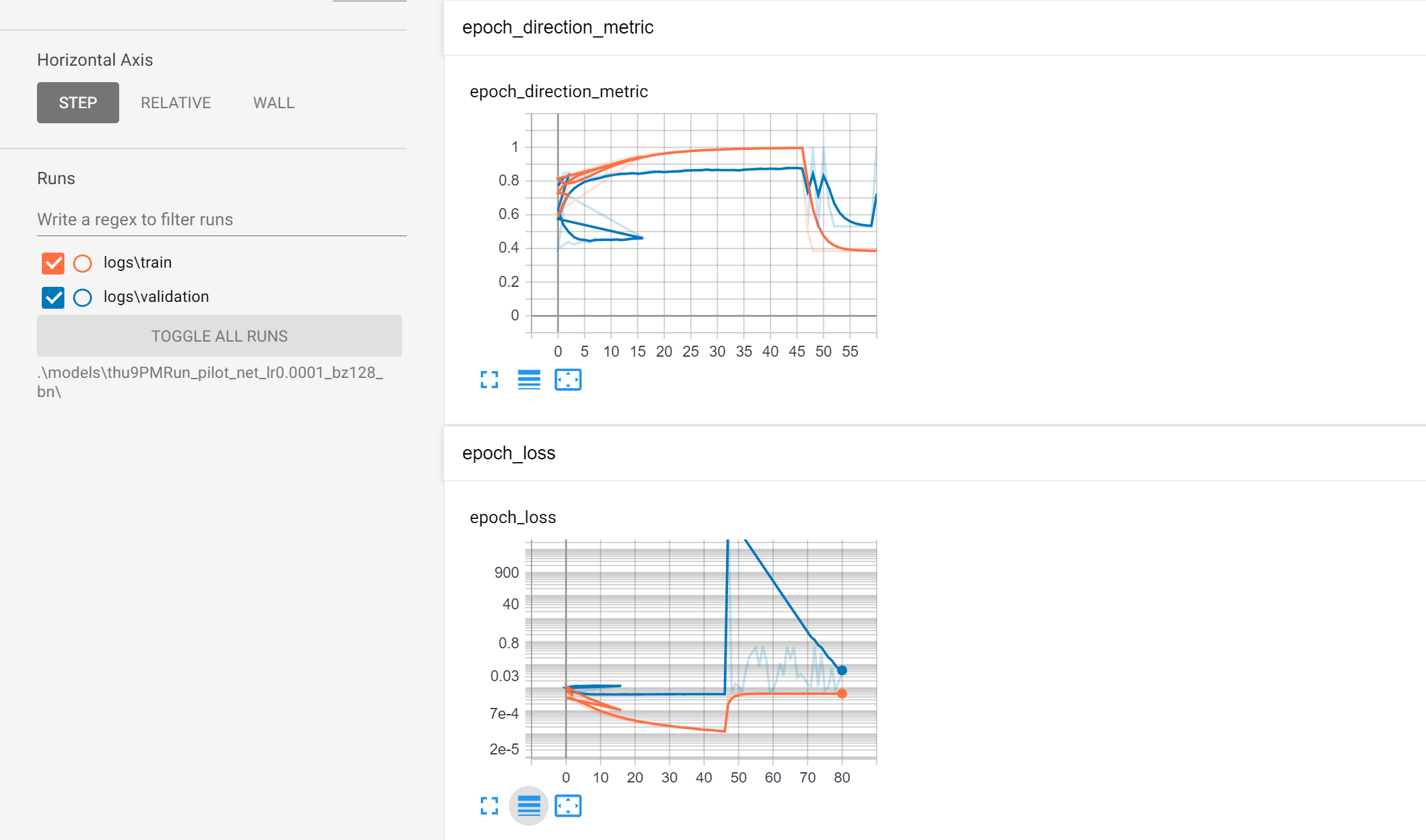

Every thing else runs smoothly.