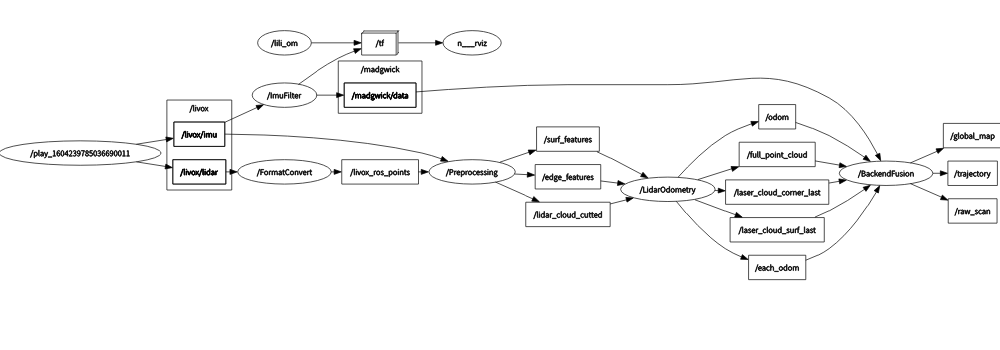

This is the code repository of LiLi-OM, a real-time tightly-coupled LiDAR-inertial odometry and mapping system for solid-state LiDAR (Livox Horizon) and conventional LiDARs (e.g., Velodyne). It has two variants as shown in the folder:

- LiLi-OM, for Livox Horizon with a newly proposed feature extraction module,

- LiLi-OM-ROT, for conventional LiDARs of spinning mechanism with feature extraction module similar to LOAM.

Both variants exploit the same backend module, which is proposed to directly fuse LiDAR and (preintegrated) IMU measurements based on a keyframe-based sliding window optimization. Detailed information can be found in the paper below and on Youtube.

Thank you for citing our LiLi-OM paper on IEEE or ArXiv if you use any of this code:

@article{liliom,

author={Li, Kailai and Li, Meng and Hanebeck, Uwe D.},

journal={IEEE Robotics and Automation Letters},

title={Towards High-Performance Solid-State-LiDAR-Inertial Odometry and Mapping},

year={2021},

volume={6},

number={3},

pages={5167-5174},

doi={10.1109/LRA.2021.3070251}

}

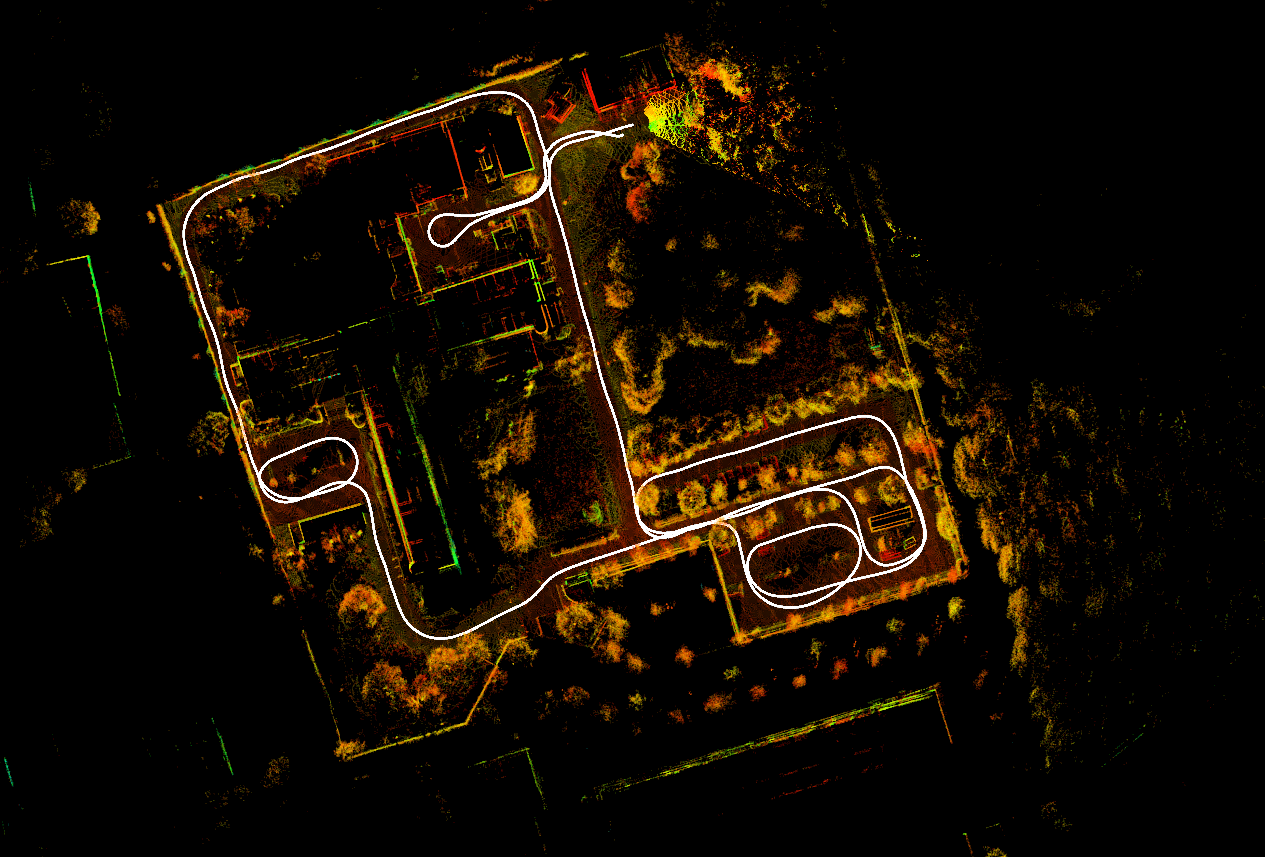

We provide data sets recorded by Livox Horizon (10 Hz) and Xsens MTi-670 (200 Hz)

Download from isas-server

System dependencies (tested on Ubuntu 18.04/20.04)

In ROS workspce:

- livox_ros_driver (v2.5.0) (ROS driver for Livox Horizon)

Compile with catkin_tools:

cd ~/catkin_ws/src

git clone https://github.com/KIT-ISAS/lili-om

cd ..

catkin build livox_ros_driver

catkin build lili_om

catkin build lili_om_rot

- Run a launch file for lili_om or lili_om_rot

- Play the bag file

- Example for running lili_om (Livox Horizon):

roslaunch lili_om run_fr_iosb.launch

rosbag play FR_IOSB_Short.bag -r 1.0 --clock --pause

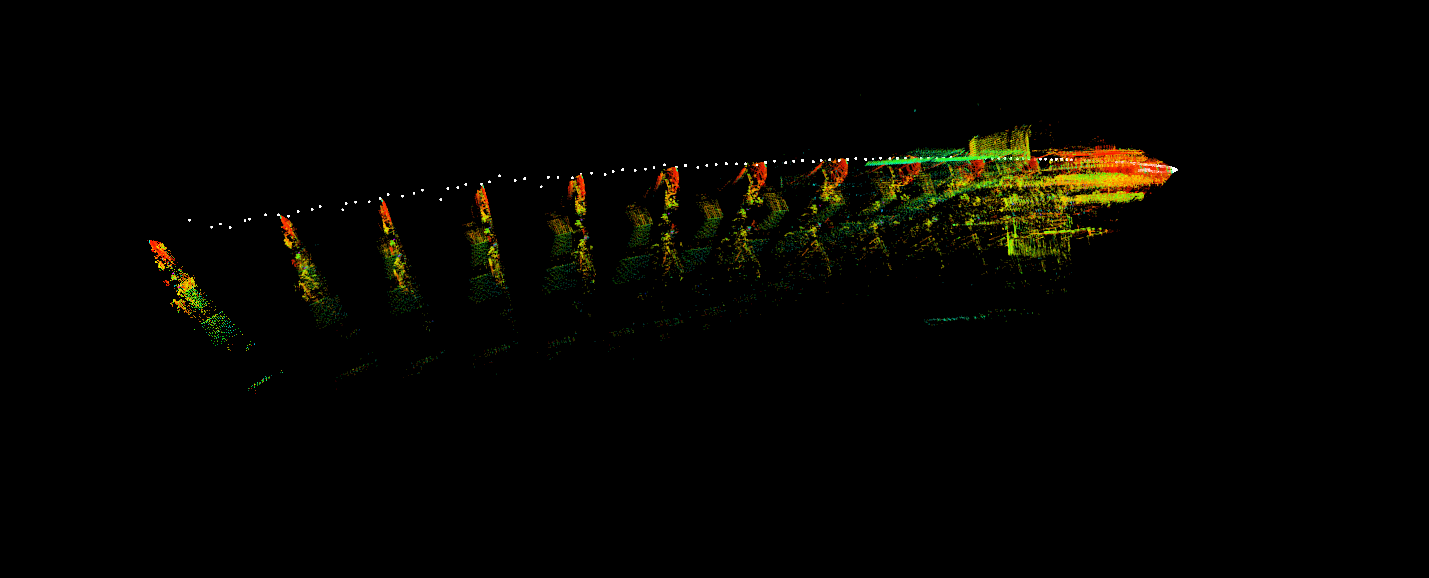

- Example for running lili_om_rot (spinning LiDAR like the Velodyne HDL-64E in FR_IOSB data set):

roslaunch lili_om_rot run_fr_iosb.launch

rosbag play FR_IOSB_Short_64.bag -r 1.0 --clock --pause

- Example for running lili_om using the internal IMU of Livox Horizon:

roslaunch lili_om run_fr_iosb_internal_imu.launch

rosbag play FR_IOSB_Short.bag -r 1.0 --clock --pause --topics /livox/lidar /livox/imu

For live test or own recorded data sets, the system should start at a stationary state.

Meng Li (Email: [email protected])

Kailai Li (Email: [email protected])

We hereby recommend reading VINS-Fusion and LIO-mapping for reference.

The source code is released under GPLv3 license.

We are constantly working on improving our code. For any technical issues or commercial use, please contact Kailai Li < [email protected] > with Intelligent Sensor-Actuator-Systems Lab (ISAS), Karlsruhe Institute of Technology (KIT).